Posted by Dave Burke, VP of Engineering

In the past year, Android users around the globe have installed apps–built by developers like you–over 65 billion times on Google Play. To help developers continue to build amazing experiences on top of Android, today at

Google I/O, we announced a number of new things we’re doing with the platform, including the next Developer Preview of Android N, an extension of Android into virtual reality, an update to Android Studio, and much more!

|

| Android N Developer Preview is available to try on a range of devices |

Android N: Performance, Productivity and Security

With Android N, we want to achieve a new level of product excellence for Android, so we’ve carried out some pretty deep surgery to the platform, rewriting and redesigning some fundamental aspects of how the system works. For Android N, we are focused on three key themes: performance, productivity and security. The

first Developer Preview introduced a brand new

JIT compiler to improve software performance, make app installs faster, and take up less storage. The second N Developer Preview included

Vulkan, a new 3D rendering API to help game developers deliver high performance graphics on mobile devices. Both previews also brought useful productivity improvements to Android, including

Multi-Window support and

Direct Reply.

|

| Multi-Window mode on Android N |

Android N also adds some important new features to help keep users safer and more secure. Inspired by how Chromebooks apply updates, we’re introducing

seamless updates, so that new Android devices built on N can install system updates in the background. This means that the next time a user powers up their device, new devices can automatically and seamlessly switch into the new updated system image.

Today’s release of Android N Developer Preview 3 is our first beta-quality candidate, available to test on your primary phone or tablet. You can opt in to the Android Beta Program at

android.com/beta and run Android N on your Nexus 6, 9, 5X, 6P, Nexus Player, Pixel C, and Android One (General Mobile 4G). By inviting more people to try this beta release, developers can expect to see an uptick in usage of your apps on N; if you’ve got an Android app, you should be testing how it works on N, and be watching for feedback from users.

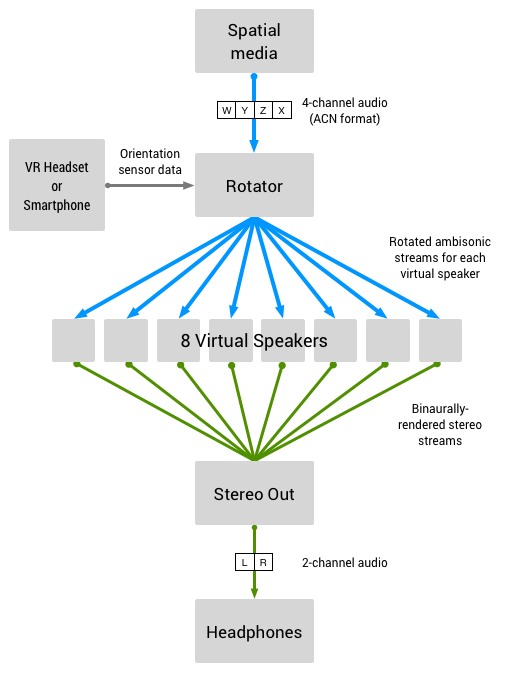

VR Mode in Android

Android was built for today’s multi-screen world; in fact, Android powers your phone, your tablet, the watch on your wrist, it even works in your car and in your living room, all the while helping you move seamlessly between each device. As we look to what’s next, we believe your phone can be a really powerful new way to see the world and experience new content virtually, in a more immersive way; but, until this point, high quality mobile VR wasn’t possible across the Android ecosystem. That’s why we’ve worked at all levels of the Android stack in N–from how the operating system reads sensor data to how it sends pixels to the display–to make it especially built to provide high quality mobile VR experiences, with VR Mode in Android. There are a number of performance enhancements designed for developers, including single buffer rendering and access to an exclusive CPU core for VR apps. Within your apps, you can take advantage of smooth head-tracking and stereo notifications that work for VR. Most importantly, Android N provides for very low latency graphics; in fact, motion-to-photon latency on Nexus 6P running Developer Preview 3 is <20 ms, the speed necessary to establish immersion for the user to feel like they are actually in another place. We’ll be covering all of the new VR updates tomorrow at 9AM PT in the

VR at Google session, livestreamed from Google I/O.

Android Instant Apps: real apps, without the installation

We want to make it easier for users to discover and use your apps. So what if your app was just a tap away? What if users didn't have to install it at all? Today, we're introducing

Android Instant Apps as part of our effort to evolve the way we think about apps. Whether someone discovers your app from search, social media, messaging or other deep links, they’ll be able to experience a fast and powerful native Android app without needing to stop and install your app first or reauthenticate. Best of all, Android Instant Apps is compatible with all Android devices running Jellybean or higher (4.1+) with Google Play services. Android Instant Apps functionality is an upgrade to your existing Android app, not a new, separate app; you can

sign-up to request early access to the documentation.

Android Wear 2.0: UI changes and standalone apps

This morning at Google I/O, we also announced the most significant Android Wear update since its launch two years ago: Android Wear 2.0. Based on what we’ve learned from users and developers, we're evolving the platform to improve key watch experiences: watch faces, messaging, and fitness. We’re also making a number of UI changes and updating our design guidelines to make your apps more consistent, intuitive, and beautiful. With Android Wear 2.0, apps can be standalone and have direct network access to the cloud via a Bluetooth, Wi-Fi, or cellular connection. Since your app won’t have to rely on the

Data Layer APIs, it can continue to offer full functionality even if the paired phone is far away or turned off. You can read about all of the new features available in today’s preview

here.

Android Studio 2.2 Preview: a new layout designer, constraint layout, and much more

Android Studio is the quickest way to get up and running with Android N and all our new platform features. Today at Google I/O, we previewed Android Studio 2.2 - another big update to the IDE designed to help you code faster with smart new tooling features built in. One of the headline features is our rewritten layout designer with the new constraint layout. In addition to helping you get out of XML to do your layouts visually, the new tools help you easily design for Android’s many great devices. Once you’re happy with a layout, we do all the hard work to automatically calculate constraints for you, so your UIs will resize automatically on different screen sizes . Here’s an overview of more of what’s new in 2.2 Preview (we’ll be diving into more detail this update at 10AM PT tomorrow in

“What’s new in Android Development Tools”, livestreamed from Google I/O):

- Speed: New layout designer and constraint layout, Espresso test recording and even faster builds

- Smarts: APK analyzer, Layout inspector, expanded Android code analysis and IntelliJ 2016.1

- Platform Support: Enhanced Jack compiler / Java 8 support, Expanded C++ support with CMake and NDK-Build, Firebase support and enhanced accessibility

|

| New Layout Editor and Constraint Layout in Android Studio 2.2 Preview |

This is just a small taste of some of the new updates for Android, announced today at Google I/O. There are more than

50 Android-related sessions over the next three days; if you’re not able to join us in person, many of them will be livestreamed, and all of them will be posted to YouTube after we’re done. We can’t wait to see what you build!