Back in the eighties, when network constraints limited data transfers, people took to the streets and walked their floppy disks where they needed to go. And Sneakernet was born.

In the world of cloud and exponential data growth, the size of the disk and the speed of your sneakers may have changed, but the solution is the same: Sometimes the best way to move data is to ship it on physical media.

Today, we’re excited to introduce Transfer Appliance, to help you ingest large amounts of data to Google Cloud Platform (GCP).

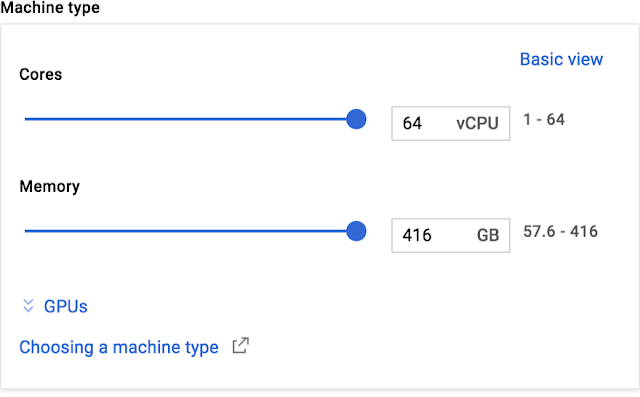

|

| Transfer Appliance offers up to 480TB in 4U or 100TB in 2U of raw data capacity in a single rackmount device |

Like many organizations we talk to, you probably have large amounts of data that you want to use to train machine learning models. You have huge archives and backup libraries taking up expensive space in your data center. Or IoT devices flooding your storage arrays. There’s all this data waiting to get to the cloud, but it’s impeded by expensive, limited bandwidth. With Transfer Appliance, you can finally take advantage of all that GCP has to offer — machine learning, advanced analytics, content serving, archive and disaster recovery — without upgrading your network infrastructure or acquiring third-party data migration tools.

Working with customers, we’ve found that the typical enterprise has many petabytes of data, and available network bandwidth between 100 Mbps and 1 Gbps. Depending on the available bandwidth, transferring 10 PB of that data would take between three and 34 years — much too long.

|

| Estimated transfer times for given capacity and bandwidth |

|

| Compare the transfer times for 1 petabyte of data. |

Customers have been testing Transfer Appliance for several months, and love what they see:

"Google Transfer Appliance moves petabytes of environmental and geographic data for Makani so we can find out where the wind is the most windy." — Ruth Marsh, Technical Program Manager at MakaniTransfer Appliance joins the growing family of Google Cloud Data Transfer services. Initially available in the US, the service comes in two configurations: 100TB or 480TB of raw storage capacity, or up to 200TB or 1PB compressed. The 100TB model is priced at $300, plus shipping via Fedex (approximately $500); the 480TB model is priced at $1800, plus shipping (approximately $900). To learn more visit the documentation.

"Using a service like Google Transfer Appliance meant I could transfer hundreds of terabytes of data in days not weeks. Now we can leverage all that Google Cloud Platform has to offer as we bring narratives to life for our clients." — Tom Taylor, Head of Engineering at The Mill

We think you’re going to love getting to cloud in a matter of weeks rather than years. Sign up to reserve a Transfer Appliance today. You can also sign up here for a GCP free trial.