CameraX, Android's Jetpack camera library, is getting an exciting update to its Dual Concurrent Camera feature, making it even easier to integrate this feature into your app. This feature allows you to stream from 2 different cameras at the same time. The original version of Dual Concurrent Camera was released in CameraX 1.3.0, and it was already a huge leap in making this feature easier to implement.

Starting with 1.5.0-alpha01, CameraX will now handle the composition of the 2 camera streams as well. This update is additional functionality, and it doesn’t remove any prior functionality nor is it a breaking change to your existing Dual Concurrent Camera code. To tell CameraX to handle the composition, simply use the new SingleCameraConfig constructor which has a new parameter for a CompositionSettings object. Since you’ll be creating 2 SingleCameraConfigs, you should be consistent with what constructor you use.

Nothing has changed in the way you check for concurrent camera support from the prior version of this feature. As a reminder, here is what that code looks like.

// Set up primary and secondary camera selectors if supported on device. var primaryCameraSelector: CameraSelector? = null var secondaryCameraSelector: CameraSelector? = null for (cameraInfos in cameraProvider.availableConcurrentCameraInfos) { primaryCameraSelector = cameraInfos.first { it.lensFacing == CameraSelector.LENS_FACING_FRONT }.cameraSelector secondaryCameraSelector = cameraInfos.first { it.lensFacing == CameraSelector.LENS_FACING_BACK }.cameraSelector if (primaryCameraSelector == null || secondaryCameraSelector == null) { // If either a primary or secondary selector wasn't found, reset both // to move on to the next list of CameraInfos. primaryCameraSelector = null secondaryCameraSelector = null } else { // If both primary and secondary camera selectors were found, we can // conclude the search. break } } if (primaryCameraSelector == null || secondaryCameraSelector == null) { // Front and back concurrent camera not available. Handle accordingly. }

Here’s the updated code snippet showing how to implement picture-in-picture, with the front camera stream scaled down to fit into the lower right corner. In this example, CameraX handles the composition of the camera streams.

// If 2 concurrent camera selectors were found, create 2 SingleCameraConfigs // and compose them in a picture-in-picture layout. val primary = SingleCameraConfig( cameraSelectorPrimary, useCaseGroup, CompositionSettings.Builder() .setAlpha(1.0f) .setOffset(0.0f, 0.0f) .setScale(1.0f, 1.0f) .build(), lifecycleOwner); val secondary = SingleCameraConfig( cameraSelectorSecondary, useCaseGroup, CompositionSettings.Builder() .setAlpha(1.0f) .setOffset(2 / 3f - 0.1f, -2 / 3f + 0.1f) .setScale(1 / 3f, 1 / 3f) .build() lifecycleOwner); // Bind to lifecycle ConcurrentCamera concurrentCamera = cameraProvider.bindToLifecycle(listOf(primary, secondary));

You are not constrained to a picture-in-picture layout. For instance, you could define a side-by-side layout by setting the offsets and scaling factors accordingly. You want to keep both dimensions scaled by the same amount to avoid a stretched preview. Here’s how that might look.

// If 2 concurrent camera selectors were found, create 2 SingleCameraConfigs // and compose them in a picture-in-picture layout. val primary = SingleCameraConfig( cameraSelectorPrimary, useCaseGroup, CompositionSettings.Builder() .setAlpha(1.0f) .setOffset(0.0f, 0.25f) .setScale(0.5f, 0.5f) .build(), lifecycleOwner); val secondary = SingleCameraConfig( cameraSelectorSecondary, useCaseGroup, CompositionSettings.Builder() .setAlpha(1.0f) .setOffset(0.5f, 0.25f) .setScale(0.5f, 0.5f) .build() lifecycleOwner); // Bind to lifecycle ConcurrentCamera concurrentCamera = cameraProvider.bindToLifecycle(listOf(primary, secondary));

We’re excited to offer this improvement to an already developer-friendly feature. Truly the CameraX way! CompositionSettings in Dual Concurrent Camera is currently in alpha, so if you have feature requests to improve upon it before the API is locked in, please give us feedback in the CameraX Discussion Group. And check out the full CameraX 1.5.0-alpha01 release notes to see what else is new in CameraX.

Posted by Donovan McMurray – Developer Relations Engineer

Posted by Donovan McMurray – Developer Relations Engineer

Posted by Tomáš Mlynarič, Developer Relations Engineer

Posted by Tomáš Mlynarič, Developer Relations Engineer

Posted by Nick Butcher – Product Manager for Jetpack Compose, and Florina Muntenescu – Developer Relations Engineer

Posted by Nick Butcher – Product Manager for Jetpack Compose, and Florina Muntenescu – Developer Relations Engineer

Posted by Ben Trengrove - Developer Relations Engineer, Nick Butcher - Product Manager for Jetpack Compose

Posted by Ben Trengrove - Developer Relations Engineer, Nick Butcher - Product Manager for Jetpack Compose

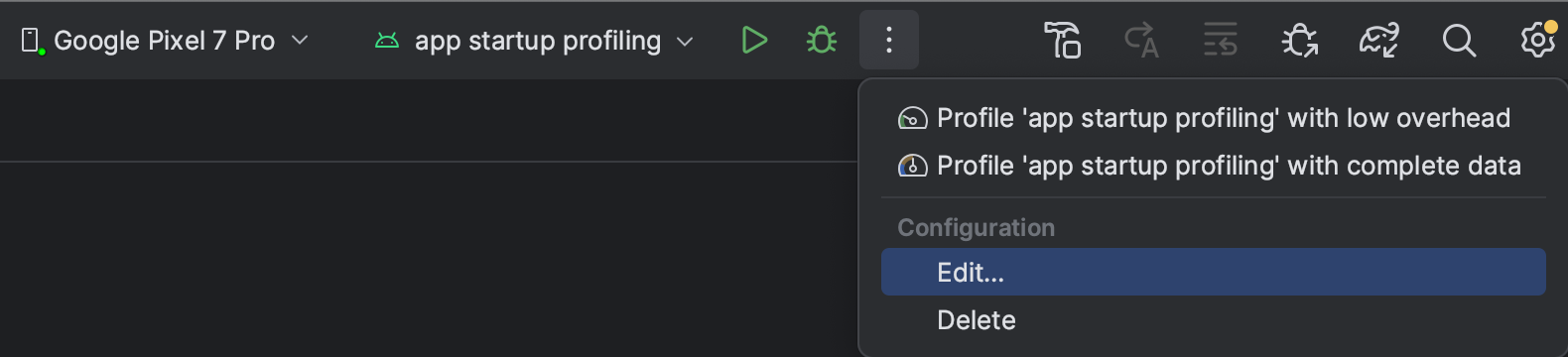

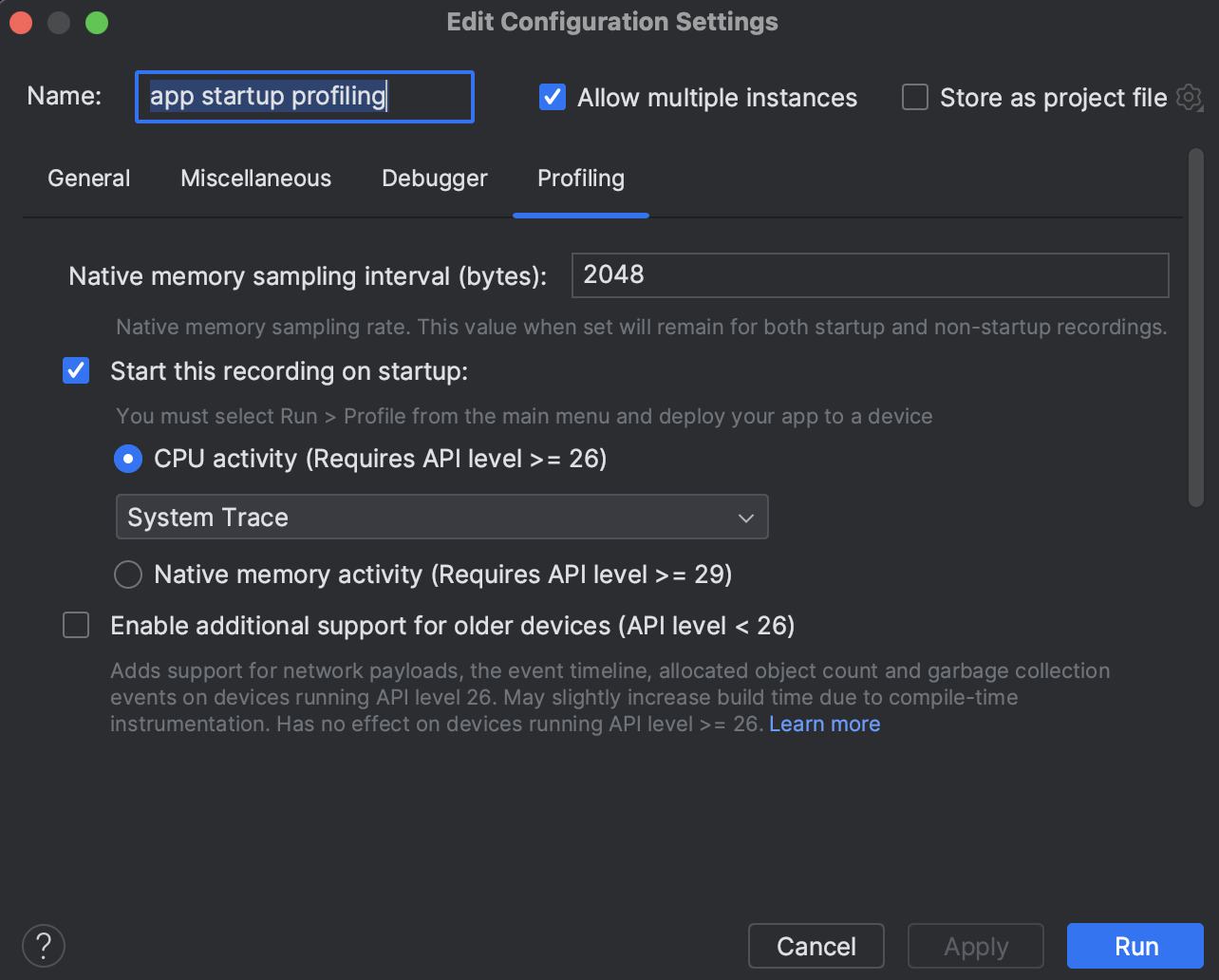

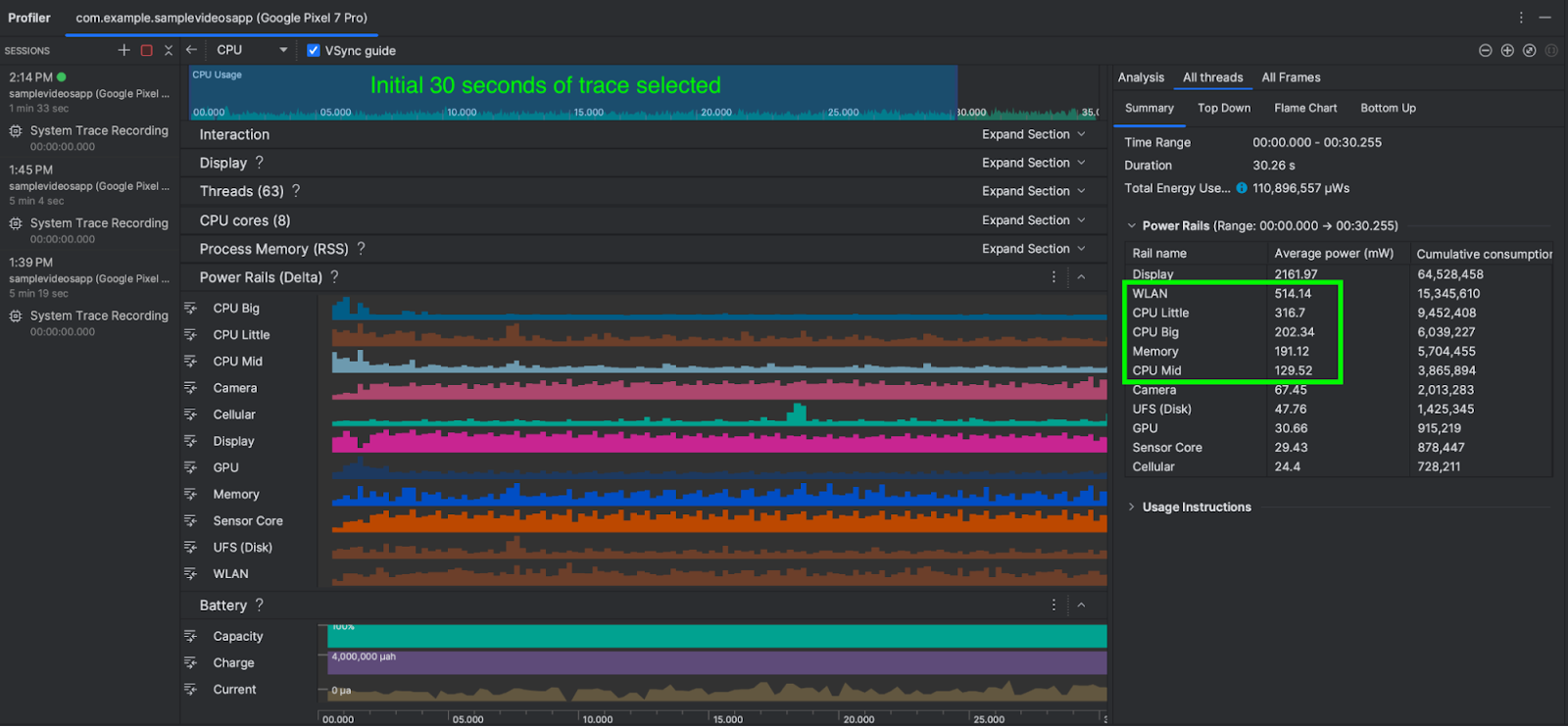

Posted by Mayank Jain - Product Manager, and Yasser Dbeis - Software Engineer; Android Studio

Posted by Mayank Jain - Product Manager, and Yasser Dbeis - Software Engineer; Android Studio

Posted by Nick Butcher – Product Manager for Jetpack Compose, and Florina Muntenescu – Developer Relations Engineer

Posted by Nick Butcher – Product Manager for Jetpack Compose, and Florina Muntenescu – Developer Relations Engineer

Posted by Almaz Mingaleev – Software Engineer and Masha Khokhlova – Technical Program Manager

Posted by Almaz Mingaleev – Software Engineer and Masha Khokhlova – Technical Program Manager

Posted by Terence Zhang – Developer Relations Engineer, Google; in partnership with Tina Ho - Partner Engineering, TPM and Kun Wang – Partner Engineering, Partner Engineer

Posted by Terence Zhang – Developer Relations Engineer, Google; in partnership with Tina Ho - Partner Engineering, TPM and Kun Wang – Partner Engineering, Partner Engineer

Posted by Gina Biernacki, Product Manager

Posted by Gina Biernacki, Product Manager

Posted by Lillian Chen – Global Brand and Content Marketing Manager, Google Accelerator Programs

Posted by Lillian Chen – Global Brand and Content Marketing Manager, Google Accelerator Programs