In the last decade, reinforcement learning (RL) has become one of the most promising research areas in machine learning and has demonstrated great potential for solving sophisticated real-world problems, such as chip placement and resource management, and solving challenging games (e.g., Go, Dota 2, and hide-and-seek). In simplest terms, an RL infrastructure is a loop of data collection and training, where actors explore the environment and collect samples, which are then sent to the learners to train and update the model. Most current RL techniques require many iterations over batches of millions of samples from the environment to learn a target task (e.g., Dota 2 learns from batches of 2 million frames every 2 seconds). As such, an RL infrastructure should not only scale efficiently (e.g., increase the number of actors) and collect an immense number of samples, but also be able to swiftly iterate over these extensive amounts of samples during training.

|

| Overview of an RL system in which an actor sends trajectories (e.g., multiple samples) to a learner. The learner trains a model using the sampled data and pushes the updated model back to the actor (e.g. TF-Agents, IMPALA). |

Today we introduce Menger1, a massive large-scale distributed RL infrastructure with localized inference that scales up to several thousand actors across multiple processing clusters (e.g., Borg cells), reducing the overall training time in the task of chip placement. In this post we describe how we implement Menger using Google TPU accelerators for fast training iterations, and present its performance and scalability on the challenging task of chip placement. Menger reduces the training time by up to 8.6x compared to a baseline implementation.

Menger System Design

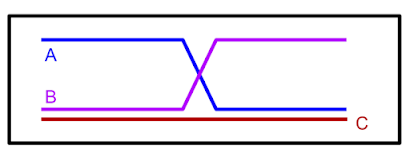

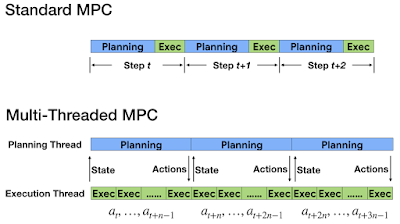

There are various distributed RL systems, such as Acme and SEED RL, each of which focus on optimizing a single particular design point in the space of distributed reinforcement learning systems. For example, while Acme uses local inference on each actor with frequent model retrieval from the learner, SEED RL benefits from a centralized inference design by allocating a portion of TPU cores for performing batched calls. The tradeoffs between these design points are (1) paying the communication cost of sending/receiving observations and actions to/from a centralized inference server or paying the communication cost of model retrieval from a learner and (2) the cost of inference on actors (e.g., CPUs) compared to accelerators (e.g., TPUs/GPUs). Because of the requirements of our target application (e.g., size of observations, actions, and model size), Menger uses local inference in a manner similar to Acme, but pushes the scalability of actors to virtually an unbounded limit. The main challenges to achieving massive scalability and fast training on accelerators include:

- Servicing a large number of read requests from actors to a learner for model retrieval can easily throttle the learner and quickly become a major bottleneck (e.g., significantly increasing the convergence time) as the number of actors increases.

- The TPU performance is often limited by the efficiency of the input pipeline in feeding the training data to the TPU compute cores. As the number of TPU compute cores increases (e.g., TPU Pod), the performance of the input pipeline becomes even more critical for the overall training runtime.

Efficient Model Retrieval

To address the first challenge, we introduce transparent and distributed caching components between the learner and the actors optimized in TensorFlow and backed by Reverb (similar approach used in Dota). The main responsibility of the caching components is to strike a balance between the large number of requests from actors and the learner job. Adding these caching components not only significantly reduces the pressure on the learner to service the read requests, but also further distributes the actors across multiple Borg cells with a marginal communication overhead. In our study, we show that for a 16 MB model with 512 actors, the introduced caching components reduce the average read latency by a factor of ~4.0x leading to faster training iterations, especially for on-policy algorithms such as PPO.

|

| Overview of a distributed RL system with multiple actors placed in different Borg cells. Servicing the frequent model update requests from a massive number of actors across different Borg cells throttles the learner and the communication network between learner and actors, which leads to a significant increase in the overall convergence time. The dashed lines represent gRPC communication between different machines. |

High Throughput Input Pipeline

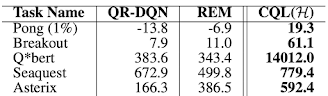

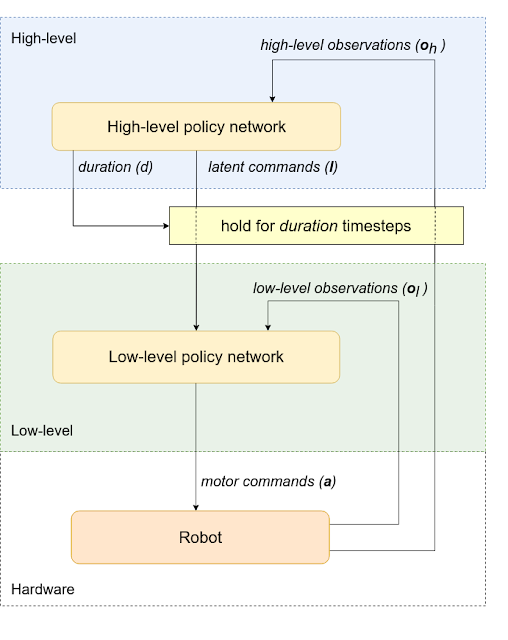

To deliver a high throughput input data pipeline, Menger uses Reverb, a recently open-sourced data storage system designed for machine learning applications that provides an efficient and flexible platform to implement experience replay in a variety of on-policy/off-policy algorithms. However, using a single Reverb replay buffer service does not currently scale well in a distributed RL setting with thousands of actors, and simply becomes inefficient in terms of write throughput from actors.

To better understand the efficiency of the replay buffer in a distributed setting, we evaluate the average write latency for various payload sizes from 16 MB to 512 MB and a number of actors ranging from 16 to 2048. We repeat the experiment when the replay buffer and actors are placed on the same Borg cell. As the number of actors grows the average write latency also increases significantly. Expanding the number of actors from 16 to 2048, the average write latency increases by a factor of ~6.2x and ~18.9x for payload size 16 MB and 512 MB, respectively. This increase in the write latency negatively impacts the data collection time and leads to inefficiency in the overall training time.

|

| The average write latency to a single Reverb replay buffer for various payload sizes (16 MB - 512 MB) and various number of actors (16 to 2048) when the actors and replay buffer are placed on the same Borg cells. |

To mitigate this, we use the sharding capability provided by Reverb to increase the throughput between actors, learner, and replay buffer services. Sharding balances the write load from the large number of actors across multiple replay buffer servers, instead of throttling a single replay buffer server, and also minimizes the average write latency for each replay buffer server (as fewer actors share the same server). This enables Menger to scale efficiently to thousands of actors across multiple Borg cells.

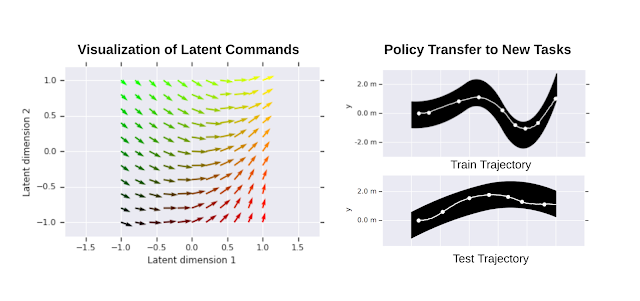

Case Study: Chip Placement

We studied the benefits of Menger in the complex task of chip placement for a large netlist. Using 512 TPU cores, Menger achieves significant improvements in the training time (up to ~8.6x, reducing the training time from ~8.6 hours down to merely one hour in the fastest configuration) compared to a strong baseline. While Menger was optimized for TPUs, that the key factor for this performance gain is the architecture, and we would expect to see similar gains when tailored to use on GPUs.

|

| The improvement in training time using Menger with variable number of TPU cores compared to a baseline in the task of chip placement. |

We believe that Menger infrastructure and its promising results in the intricate task of chip placement demonstrate an innovative path forward to further shorten the chip design cycle and has the potential to not only enable further innovations in the chip design process, but other challenging real-world tasks as well.

Acknowledgments

Most of the work was done by Amir Yazdanbakhsh, Junchaeo Chen, and Yu Zheng. We would like to also thank Robert Ormandi, Ebrahim Songhori, Shen Wang, TF-Agents team, Albin Cassirer, Aviral Kumar, James Laudon, John Wilkes, Joe Jiang, Milad Hashemi, Sat Chatterjee, Piotr Stanczyk, Sabela Ramos, Lasse Espeholt, Marcin Michalski, Sam Fishman, Ruoxin Sang, Azalia Mirhosseini, Anna Goldie, and Eric Johnson for their help and support.

1 A Menger cube is a three-dimensional fractal curve, and the inspiration for the name of this system, given that the proposed infrastructure can virtually scale ad infinitum.↩