In the last two decades, dramatic advances in compute and connectivity have allowed game developers to create works of ever-increasing scope and complexity. Simple linear levels have evolved into photorealistic open worlds, procedural algorithms have enabled games with unprecedented variety, and expanding internet access has transformed games into dynamic online services. Unfortunately, scope and complexity have grown more rapidly than the size of quality assurance teams or the capabilities of traditional automated testing. This poses a challenge to both product quality (such as delayed releases and post-launch patches) and developer quality of life.

Machine learning (ML) techniques offer a possible solution, as they have demonstrated the potential to profoundly impact game development flows – they can help designers balance their game and empower artists to produce high-quality assets in a fraction of the time traditionally required. Furthermore, they can be used to train challenging opponents that can compete at the highest levels of play. Yet some ML techniques can pose requirements that currently make them impractical for production game teams, including the design of game-specific network architectures, the development of expertise in implementing ML algorithms, or the generation of billions of frames of training data. Conversely, game developers operate in a setting that offers unique advantages to leverage ML techniques, such as direct access to the game source, an abundance of expert demonstrations, and the uniquely interactive nature of video games.

Today, we present a ML-based system that game developers can use to quickly and efficiently train game-testing agents, helping developers find serious bugs quickly while allowing human testers to focus on more complex and intricate problems. The resulting solution requires no ML expertise, works on many of the most popular game genres, and can train an ML policy, which generates game actions from game state, in less than an hour on a single game instance. We have also released an open source library that demonstrates a functional application of these techniques.

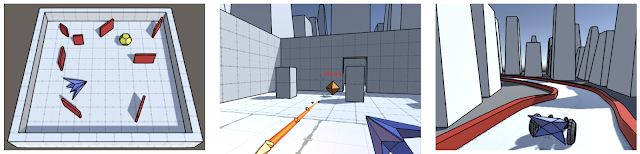

|

| Supported genres include arcade, action/adventure, and racing games. |

The Right Tool for the Right Job

The most elemental form of video game testing is to simply play the game. A lot. Many of the most serious bugs (such as crashes or falling out of the world) are easy to detect and fix; the challenge is finding them within the vast state space of a modern game. As such, we decided to focus on training a system that could “just play the game” at scale.

We found that the most effective way to do this was not to try to train a single, super effective agent that could play the entire game from end-to-end, but to provide developers with the ability to train an ensemble of game-testing agents, each of which could effectively accomplish tasks of a few minutes each, which game developers refer to as “gameplay loops”.

|

These core gameplay behaviors are often expensive to program through traditional means, but are much more efficient to train than a single end-to-end ML model. In practice, commercial games create longer loops by repeating and remixing core gameplay loops, which means that developers can test large stretches of gameplay by combining ML policies with a small amount of simple scripting.

Simulation-centric, Semantic API

One of the most fundamental challenges in applying ML to game development is bridging the chasm between the simulation-centric world of video games and the data-centric world of ML. Rather than ask developers to directly convert the game state into custom, low-level ML features (which would be too labor intensive) or attempting to learn from raw pixels (which would require too much data to train), our system provides developers with an idiomatic, game-developer friendly API that allows them to describe their game in terms of the essential state that a player observes and the semantic actions they can perform. All of this information is expressed via concepts that are familiar to game developers, such as entities, raycasts, 3D positions and rotations, buttons and joysticks.

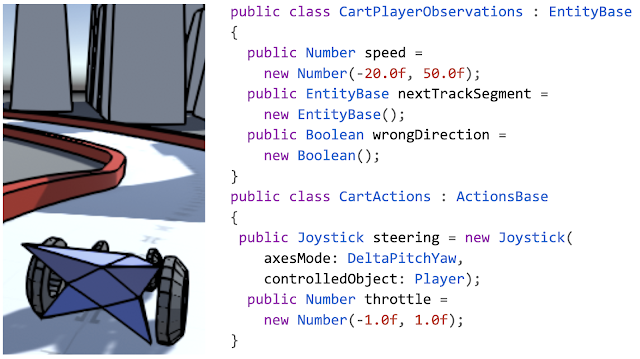

As you can see in the example below, the API allows the specification of observations and actions in just a few lines of code.

|

| Example actions and observations for a racing game. |

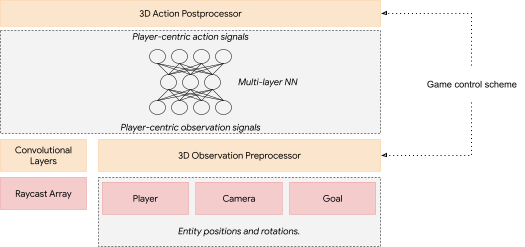

From API to Neural Network

This high level, semantic API is not just easy to use but also allows the system to flexibly adapt to the specific game being developed – the specific combination of API building blocks employed by the game developer informs our choice of network architecture, since it provides information about the type of gaming scenario in which the system is deployed. Some examples of this include: handling action outputs differently depending on whether they represent a digital button or analog joystick, or using techniques from image processing to handle observations that result from an agent probing its environment with raycasts (similar to how autonomous vehicles probe their environment with LIDAR).

Our API is sufficiently general to allow modeling of many common control-schemes (the configuration of action outputs that control movement) in games, such as first-person games, third-person games with camera-relative controls, racing games, twin stick shooters, etc. Since 3D movement and aiming are often an integral aspect of gameplay in general, we create networks that automatically tend towards simple behaviors such as aiming, approach or avoidance in these games. The system accomplishes this by analyzing the game’s control scheme to create neural network layers that perform custom processing of observations and actions in that game. For example, positions and rotations of objects in the world are automatically translated into directions and distances from the point of view of the AI-controlled game entity. This transformation typically increases the speed of learning and helps the learned network generalize better.

Learning From The Experts in Real Time

After generating a neural network architecture, the network needs to be trained to play the game using an appropriate choice of learning algorithm.

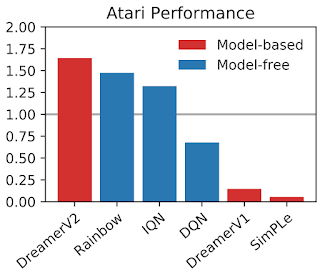

Reinforcement learning (RL), in which an ML policy is trained directly to maximize a reward, may seem like the obvious choice since they have been successfully used to train highly competent ML policies for games. However, RL algorithms tend to require more data than a single game instance can produce in a reasonable amount of time, and achieving good results in a new domain often requires hyperparameter tuning and strong ML domain knowledge.

Instead, we found that imitation learning (IL), which trains ML policies based by observing experts play the game, works well for our use case. Unlike RL, where the agent needs to discover a good policy on its own, IL only needs to recreate the behavior of a human expert. Since game developers and testers are experts in their own games, they can easily provide demonstrations of how to play the game.

We use an IL approach inspired by the DAgger algorithm, which allows us to take advantage of video games’ most compelling quality – interactivity. Thanks to the reductions in training time and data requirements enabled by our semantic API, training is effectively realtime, giving a developer the ability to fluidly switch between providing gameplay demonstrations and watching the system play. This results in a natural feedback loop, in which a developer iteratively provides corrections to a continuous stream of ML policies.

From the developer’s perspective, providing a demonstration or a correction to faulty behavior is as simple as picking up the controller and starting to play the game. Once they are done, they can put the controller down and watch the ML policy play. The result is a training experience that is real-time, interactive, highly experiential, and, very often, more than a little fun.

|

| ML policy for an FPS game, trained with our system. |

Conclusion

We present a system which combines a high-level semantic API with a DAgger-inspired interactive training flow that enables training of useful ML policies for video game testing in a wide variety of genres. We have released an open source library as a functional illustration of our system. No ML expertise is required and training of agents for test applications often takes less than an hour on a single developer machine. We hope that this work will help inspire the development of ML techniques that can be deployed in real-world game-development flows in ways that are accessible, effective, and fun to use.

Acknowledgements

We’d like to thank the core members of the project: Dexter Allen, Leopold Haller, Nathan Martz, Hernan Moraldo, Stewart Miles and Hina Sakazaki. Training algorithms are provided by TF Agents, and on-device inference by TF Lite. Special thanks to our research advisors, Olivier Bachem, Erik Frey, and Toby Pohlen, and to Eugene Brevdo, Jared Duke, Oscar Ramirez and Neal Wu who provided helpful guidance and support.