Finding the balance between graphics quality and performance

Ares: Rise of Guardians is a mobile-to-PC sci-fi MMORPG developed by Second Dive, a game studio based in Korea known for its expertise in developing action RPG series and published by Kakao Games. Set in a vast universe with a detailed, futuristic background, Ares is full of exciting gameplay and beautifully rendered characters involving combatants wearing battle suits. However, because of these richly detailed graphics, some users’ devices struggled to handle the gameplay without affecting the performance.

For some users, their device would overheat after just a few minutes of gameplay and enter a thermally throttled state. In this state, the CPU and GPU frequency are reduced, affecting the game’s performance and causing the FPS to drop. However, as soon as the decreased FPS improved the thermal situation, the FPS would increase again and the cycle would repeat. This FPS fluctuation would cause the game to feel janky.

Adjust the performance in real time with Android Adaptability

To solve this problem, Kakao Games used Android Adaptability and Unity Adaptive Performance to improve the performance and thermal management of their game.

Android Adaptability is a set of tools and libraries to understand and respond to changing performance, thermal, and user situations in real time. These include the Android Dynamic Performance Framework’s thermal APIs, which provide information about the thermal state of a device, and the PerformanceHint API, which help Android choose the optimal CPU operating point and core placement. Both APIs work with the Unity Adaptive Performance package to help developers optimize their games.

Android Adaptability and Unity Adaptive Performance work together to adjust the graphics settings of your app or game to match the capabilities of the user’s device. As a result, it can improve performance, reduce thermal throttling and power consumption, and preserve battery life.

Results

After integrating adaptive performance, Ares was better able to manage its thermal situation, which resulted in less throttling. As a result, users were able to enjoy a higher frame rate, and FPS stability increased from 75% to 96%.

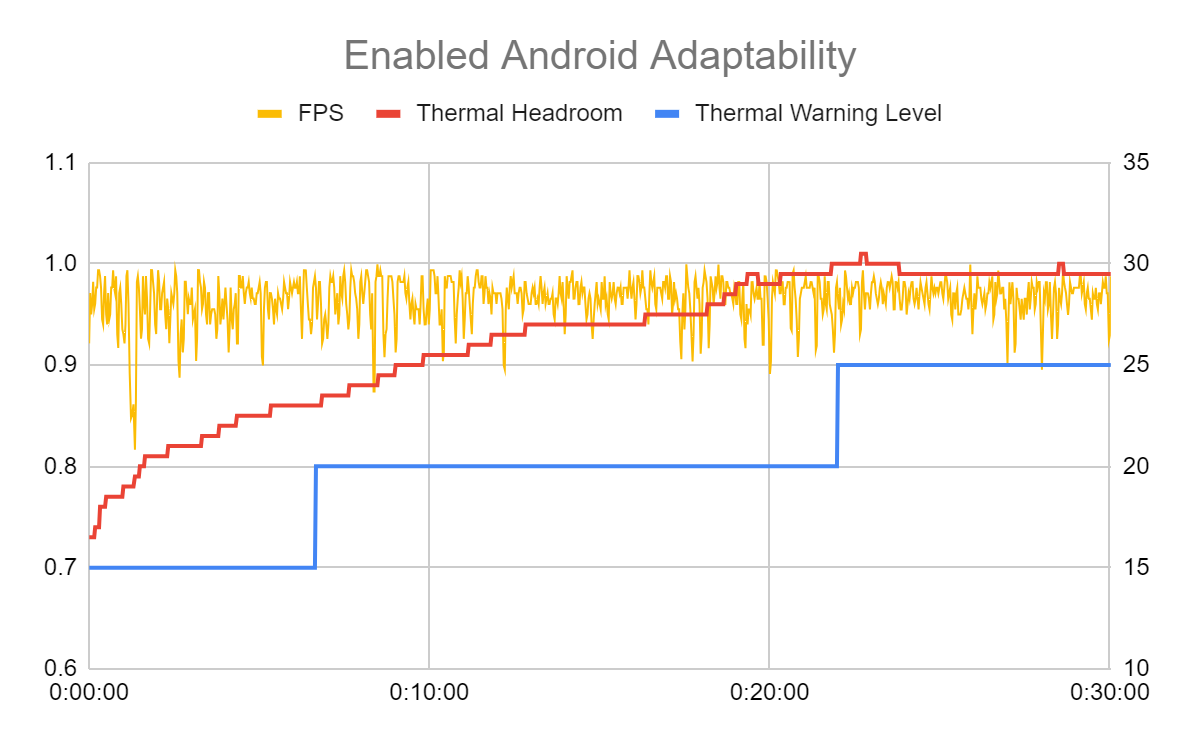

In the charts below, the blue line indicates the thermal warning level. The bottom line (0.7) indicates no warning, the midline (0.8) is throttling imminent, and the upper line (0.9) is throttling. As you can see in the first chart, before implementing Android Adaptability, throttling happened after about 16 minutes of gameplay. In the second chart, you can see that after integration, throttling didn’t occur until around 22 minutes.

Kakao Games also wanted to reduce device heating, which they knew wasn’t possible with a continuously high graphic quality setting. The best practice is to gradually lower the graphical fidelity as device temperature increases to maintain a constant framerate and thermal equilibrium. So Kakao Games created a six-step change sequence with Android Adaptability, offering stable FPS and lower device temperatures. Automatic changes in fidelity are reflected in the in-game graphic quality settings (resolution, texture, shadow, effect, etc.) in the settings menu. Because some users want the highest graphic quality even if their device can’t sustain performance at that level, Kakao Games gave them the option to manually disable Unity Adaptive Performance.

Get started with Android Adaptability

Android Adaptability and Unity Adaptive Performance is now available to all Android game developers using the Android provider on most Android devices after API level 30 (thermal) and 31 (performance Hint API). Developers are able to use the Android provider from the Adaptive Performance 5.0.0 version. The thermal APIs are integrated with Adaptive Performance to help developers easily retrieve device thermal information and the performance Hint API is called every Update() automatically without any additional work.

Learn how Android Adaptability and Unity Adaptive Performance can help you stabilize your game’s FPS and reduce thermal throttling.

Posted by Google for Developers

Posted by Google for Developers

Posted by Serban Constantinescu, Product Manager

Posted by Serban Constantinescu, Product Manager

Posted by Yacine Rezgui - Developer Relations Engineer

Posted by Yacine Rezgui - Developer Relations Engineer

Posted by Lin Guo, Software Engineer

Posted by Lin Guo, Software Engineer

Posted by Márton Braun, Developer Relations Engineer

Posted by Márton Braun, Developer Relations Engineer

Posted by

Posted by

.png)

.png)

.png)

Posted by the Android team

Posted by the Android team

Posted by the Android team

Posted by the Android team

Posted by Alan Leung, Staff Software Engineer,

Posted by Alan Leung, Staff Software Engineer,

.png)

.png)

.png)