Android users have demonstrated an increasing desire to create, personalize, and share video content online, whether to preserve their memories or to make people laugh. As such, media editing is a cornerstone of many engaging Android apps, and historically developers have often relied on external libraries to handle operations such as Trimming and Resizing. While these solutions are powerful, integrating and managing external library dependencies can introduce complexity and lead to challenges with managing performance and quality.

The Jetpack Media3 Transformer APIs offer a native Android solution that streamline media editing with fast performance, extensive customizability, and broad device compatibility. In this blog post, we’ll walk through some of the most common editing operations with Transformer and discuss its performance.

Getting set up with Transformer

To get started with Transformer, check out our Getting Started documentation for details on how to add the dependency to your project and a basic understanding of the workflow when using Transformer. In a nutshell, you’ll:

- Create one or many MediaItem instances from your video file(s), then

- Apply item-specific edits to them by building an EditedMediaItem for each MediaItem,

- Create a Transformer instance configured with settings applicable to the whole exported video,

- and finally start the export to save your applied edits to a file.

Aside: You can also use a CompositionPlayer to preview your edits before exporting, but this is out of scope for this blog post, as this API is still a work in progress. Please stay tuned for a future post!

Here’s what this looks like in code:

val mediaItem = MediaItem.Builder().setUri(mediaItemUri).build() val editedMediaItem = EditedMediaItem.Builder(mediaItem).build() val transformer = Transformer.Builder(context) .addListener(/* Add a Transformer.Listener instance here for completion events */) .build() transformer.start(editedMediaItem, outputFilePath)

Transcoding, Trimming, Muting, and Resizing with the Transformer API

Let’s now take a look at four of the most common single-asset media editing operations, starting with Transcoding.

Transcoding is the process of re-encoding an input file into a specified output format. For this example, we’ll request the output to have video in HEVC (H265) and audio in AAC. Starting with the code above, here are the lines that change:

val transformer =

Transformer.Builder(context)

.addListener(...)

.setVideoMimeType(MimeTypes.VIDEO_H265)

.setAudioMimeType(MimeTypes.AUDIO_AAC)

.build()

Many of you may already be familiar with FFmpeg, a popular open-source library for processing media files, so we’ll also include FFmpeg commands for each example to serve as a helpful reference. Here’s how you can perform the same transcoding with FFmpeg:

$ ffmpeg -i $inputVideoPath -c:v libx265 -c:a aac $outputFilePath

The next operation we’ll try is Trimming.

Specifically, we’ll set Transformer up to trim the input video from the 3 second mark to the 8 second mark, resulting in a 5 second output video. Starting again from the code in the “Getting set up” section above, here are the lines that change:

// Configure the trim operation by adding a ClippingConfiguration to // the media item val clippingConfiguration = MediaItem.ClippingConfiguration.Builder() .setStartPositionMs(3000) .setEndPositionMs(8000) .build() val mediaItem = MediaItem.Builder() .setUri(mediaItemUri) .setClippingConfiguration(clippingConfiguration) .build() // Transformer also has a trim optimization feature we can enable. // This will prioritize Transmuxing over Transcoding where possible. // See more about Transmuxing further down in this post. val transformer = Transformer.Builder(context) .addListener(...) .experimentalSetTrimOptimizationEnabled(true) .build()

With FFmpeg:

$ ffmpeg -ss 00:00:03 -i $inputVideoPath -t 00:00:05 $outputFilePath

Next, we can mute the audio in the exported video file.

val editedMediaItem = EditedMediaItem.Builder(mediaItem) .setRemoveAudio(true) .build()

The corresponding FFmpeg command:

$ ffmpeg -i $inputVideoPath -c copy -an $outputFilePath

And for our final example, we’ll try resizing the input video by scaling it down to half its original height and width.

val scaleEffect = ScaleAndRotateTransformation.Builder() .setScale(0.5f, 0.5f) .build() val editedMediaItem = EditedMediaItem.Builder(mediaItem) .setEffects( /* audio */ Effects(emptyList(), /* video */ listOf(scaleEffect)) ) .build()

An FFmpeg command could look like this:

$ ffmpeg -i $inputVideoPath -filter:v scale=w=trunc(iw/4)*2:h=trunc(ih/4)*2 $outputFilePath

Of course, you can also combine these operations to apply multiple edits on the same video, but hopefully these examples serve to demonstrate that the Transformer APIs make configuring these edits simple.

Transformer API Performance results

Here are some benchmarking measurements for each of the 4 operations taken with the Stopwatch API, running on a Pixel 9 Pro XL device:

(Note that performance for operations like these can depend on a variety of reasons, such as the current load the device is under, so the numbers below should be taken as rough estimates.)

Input video format: 10s 720p H264 video with AAC audio

- Transcoding to H265 video and AAC audio: ~1300ms

- Trimming video to 00:03-00:08: ~2300ms

- Muting audio: ~200ms

- Resizing video to half height and width: ~1200ms

Input video format: 25s 360p VP8 video with Vorbis audio

- Transcoding to H265 video and AAC audio: ~3400ms

- Trimming video to 00:03-00:08: ~1700ms

- Muting audio: ~1600ms

- Resizing video to half height and width: ~4800ms

Input video format: 4s 8k H265 video with AAC audio

- Transcoding to H265 video and AAC audio: ~2300ms

- Trimming video to 00:03-00:08: ~1800ms

- Muting audio: ~2000ms

- Resizing video to half height and width: ~3700ms

One technique Transformer uses to speed up editing operations is by prioritizing transmuxing for basic video edits where possible. Transmuxing refers to the process of repackaging video streams without re-encoding, which ensures high-quality output and significantly faster processing times.

When not possible, Transformer falls back to transcoding, a process that involves first decoding video samples into raw data, then re-encoding them for storage in a new container. Here are some of these differences:

Transmuxing

- Transformer’s preferred approach when possible - a quick transformation that preserves elementary streams.

- Only applicable to basic operations, such as rotating, trimming, or container conversion.

- No quality loss or bitrate change.

Transcoding

- Transformer's fallback approach in cases when Transmuxing isn't possible - Involves decoding and re-encoding elementary streams.

- More extensive modifications to the input video are possible.

- Loss in quality due to re-encoding, but can achieve a desired bitrate target.

We are continuously implementing further optimizations, such as the recently introduced experimentalSetTrimOptimizationEnabled setting that we used in the Trimming example above.

A trim is usually performed by re-encoding all the samples in the file, but since encoded media samples are stored chronologically in their container, we can improve efficiency by only re-encoding the group of pictures (GOP) between the start point of the trim and the first keyframes at/after the start point, then stream-copying the rest.

Since we only decode and encode a fixed portion of any file, the encoding latency is roughly constant, regardless of what the input video duration is. For long videos, this improved latency is dramatic. The optimization relies on being able to stitch part of the input file with newly-encoded output, which means that the encoder's output format and the input format must be compatible.

If the optimization fails, Transformer automatically falls back to normal export.

What’s next?

As part of Media3, Transformer is a native solution with low integration complexity, is tested on and ensures compatibility with a wide variety of devices, and is customizable to fit your specific needs.

To dive deeper, you can explore Media3 Transformer documentation, run our sample apps, or learn how to complement your media editing pipeline with Jetpack Media3. We’ve already seen app developers benefit greatly from adopting Transformer, so we encourage you to try them out yourself to streamline your media editing workflows and enhance your app’s performance!

Posted by Nevin Mital – Developer Relations Engineer, and Kristina Simakova – Engineering Manager

Posted by Nevin Mital – Developer Relations Engineer, and Kristina Simakova – Engineering Manager

Posted by Caren Chang – Developer Relations Engineer

Posted by Caren Chang – Developer Relations Engineer

Posted by Nevin Mital - Developer Relations Engineer, Android Media

Posted by Nevin Mital - Developer Relations Engineer, Android Media

Posted by Caren Chang- Android Developer Relations Engineer

Posted by Caren Chang- Android Developer Relations Engineer

Posted by Kristina Simakova – Engineering Manager

Posted by Kristina Simakova – Engineering Manager

Posted by Roxanna Aliabadi Walker – Product Manager

Posted by Roxanna Aliabadi Walker – Product Manager

Posted by

Posted by

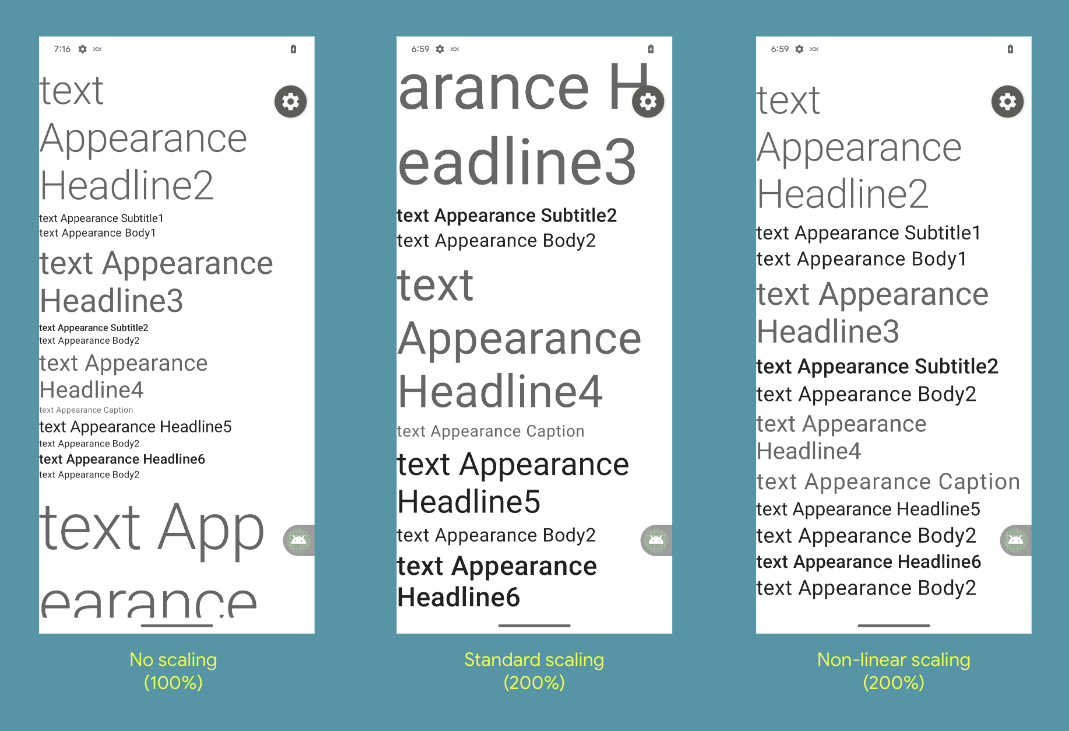

In Android 14, you should test your app UI with the maximum font size using the Font size option within the Accessibility > Display size and text settings. Ensure that the adjusted large text size setting is reflected in the UI, and that it doesn’t cause text to be cut off. Our

In Android 14, you should test your app UI with the maximum font size using the Font size option within the Accessibility > Display size and text settings. Ensure that the adjusted large text size setting is reflected in the UI, and that it doesn’t cause text to be cut off. Our