To make it even easier for users to listen on Android, developers at SoundCloud — an artist-first music platform — turned to Jetpack Glance to create a Liked Tracks widget for their highly-rated app, which boasts 4.6 stars and over 100 million downloads. With a catalog of over 400 million tracks from more than 40 million creators, SoundCloud is dedicated to connecting artists and fans through music, and this latest update to its Android app offers listeners an even more convenient way to enjoy their favorite tracks. Propelled by Glance, the team was able to complete the project in just two weeks, saving precious development time and boosting engagement.

Maximize visibility with user-friendly touchpoints

By showcasing the artwork of their recently liked tracks, the new Liked Tracks widget allows users to to jump directly to a specific song or access their full track list right from their home screen. This keeps SoundCloud front and center for listeners, acting as a shortcut to their personal libraries and encouraging them to tune back in.

Liked Tracks isn’t SoundCloud’s first widget. Over a decade ago, SoundCloud developers used RemoteViews to create a Player widget that let users easily control playback and like tracks. After recently updating the Player widget based on design feedback, developers made sure to prioritize a personalized interface for Liked Tracks. The new widget features both light and dark modes, resizes freely to accommodate user preferences, and dynamically adapts its theme to complement the user's wallpaper. Backed by Glance, these design choices ensured the widget isn’t just seamless to use but also serves as an appealing and tailored gateway into the SoundCloud app.

Accelerate development cycles with Glance

Glance also played a crucial role in streamlining the development of Liked Tracks. For developers already proficient in Compose, Glance’s intuitive design felt familiar, minimizing the learning curve and accelerating the team's onboarding. The platform’s collection of code samples provided a useful starting point, too, helping developers quickly grasp its capabilities and best practices. “Using sample app repositories is a great way to learn. I can check out an entire repository and inspect how the code operates,” said Sigute Kateivaite, lead SoundCloud engineer on the Android team. “It sped up our widget development by a lot.”

The declarative nature of Glance’s UI was especially beneficial to developers. Because they didn’t have to use additional XML files when building, developers could create cleaner, more readable code with less boilerplate. Glance also allowed them to work with modules separately, meaning components could be written and integrated one at a time and reused for later iterations. By isolating components, developers could quickly test modules, identify and resolve issues, and build for different states without duplication, leading to more efficient workflows.

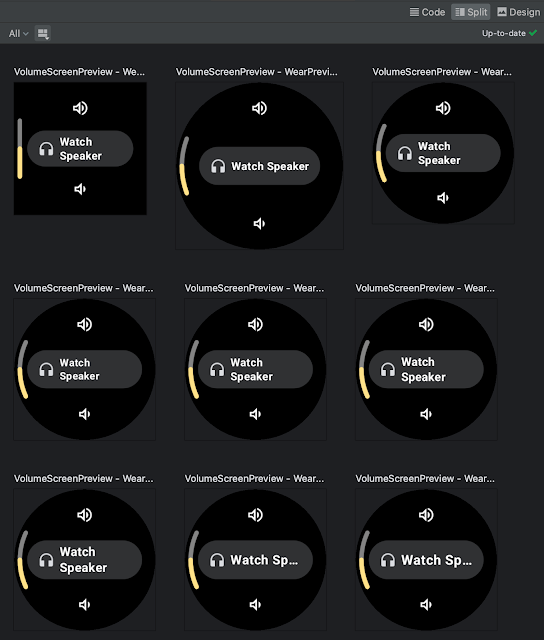

Glance’s design also improved the overall code quality. The ability to make changes using Android Studio’s support for Glance’s real-time preview enabled developers to build components in isolation without needing to integrate the UI component into the widget or deploy the full widget on the phone. They could represent various states, view all relevant cases, and review changes to components without having to compile the full app. Put simply, Glance made developers more productive because it allowed them to iterate faster, refining the widget for a more polished final product.

Elevate app widgets with the power of Glance

With effective new workflows and no major development issues, the SoundCloud team applauds Glance for streamlining a successful production. “With the new Liked Tracks widget, rollout has been really stable,” Sigute said. “Development and the testing process went really smoothly.” Early data also shows promising results — active users now interact with the widget to access the app multiple times a day on average.

Looking ahead, the SoundCloud team is eager to employ more of Glance to improve existing widgets, like adopting canonical layouts, and even develop new ones. While the current Liked Tracks widget focuses primarily on image display, the team is interested in including other types of content to further enrich user experience. Developers also hope to migrate the Player widget over to Glance to access the framework’s robust theming options, simplify resizing processes, and address some long-standing bugs.

Beyond the Liked Tracks and Player features, the team is excited about the potential of using Glance to build a wider range of widgets. The modular, component-based architecture of the Liked Tracks widget, with reusable elements like UserAvatar and Logo, offers a solid foundation for future development, promising to simplify processes from the start.

Get started building custom app widgets with Jetpack Glance

Rapidly develop and deploy widgets that keep your app visible and engaging with Glance.

This blog post is part of our series: Spotlight Week on Widgets, where we provide resources—blog posts, videos, sample code, and more—all designed to help you design and create widgets. You can read more in the overview of Spotlight Week: Widgets, which will be updated throughout the week.

Posted by

Posted by

Posted by Caren Chang – Developer Relations Engineer

Posted by Caren Chang – Developer Relations Engineer

Posted by Donovan McMurray – Developer Relations Engineer

Posted by Donovan McMurray – Developer Relations Engineer

Posted by Chris Assigbe – Developer Relations Engineer and Tom Buckley – Product Manager

Posted by Chris Assigbe – Developer Relations Engineer and Tom Buckley – Product Manager

Posted by

Posted by

Posted by Aurimas Liutikas, Software Engineer on AndroidX

Posted by Aurimas Liutikas, Software Engineer on AndroidX

Posted by

Posted by

Posted by Anna Bernbaum, Product Manager and

Posted by Anna Bernbaum, Product Manager and

.gif)

Posted by Lin Guo, Software Engineer

Posted by Lin Guo, Software Engineer