Tag Archives: hardware

New from Google Nest: The latest Cams and Doorbell are coming

Source: Official Google Australia Blog

Meet the new Nest Hub

Source: Official Google Australia Blog

Contactless Sleep Sensing in Nest Hub

People often turn to technology to manage their health and wellbeing, whether it is to record their daily exercise, measure their heart rate, or increasingly, to understand their sleep patterns. Sleep is foundational to a person’s everyday wellbeing and can be impacted by (and in turn, have an impact on) other aspects of one’s life — mood, energy, diet, productivity, and more.

As part of our ongoing efforts to support people’s health and happiness, today we announced Sleep Sensing in the new Nest Hub, which uses radar-based sleep tracking in addition to an algorithm for cough and snore detection. While not intended for medical purposes1, Sleep Sensing is an opt-in feature that can help users better understand their nighttime wellness using a contactless bedside setup. Here we describe the technologies behind Sleep Sensing and discuss how we leverage on-device signal processing to enable sleep monitoring (comparable to other clinical- and consumer-grade devices) in a way that protects user privacy.

Soli for Sleep Tracking

Sleep Sensing in Nest Hub demonstrates the first wellness application of Soli, a miniature radar sensor that can be used for gesture sensing at various scales, from a finger tap to movements of a person’s body. In Pixel 4, Soli powers Motion Sense, enabling touchless interactions with the phone to skip songs, snooze alarms, and silence phone calls. We extended this technology and developed an embedded Soli-based algorithm that could be implemented in Nest Hub for sleep tracking.

Soli consists of a millimeter-wave frequency-modulated continuous wave (FMCW) radar transceiver that emits an ultra-low power radio wave and measures the reflected signal from the scene of interest. The frequency spectrum of the reflected signal contains an aggregate representation of the distance and velocity of objects within the scene. This signal can be processed to isolate a specified range of interest, such as a user’s sleeping area, and to detect and characterize a wide range of motions within this region, ranging from large body movements to sub-centimeter respiration.

In order to make use of this signal for Sleep Sensing, it was necessary to design an algorithm that could determine whether a person is present in the specified sleeping area and, if so, whether the person is asleep or awake. We designed a custom machine-learning (ML) model to efficiently process a continuous stream of 3D radar tensors (summarizing activity over a range of distances, frequencies, and time) and automatically classify each feature into one of three possible states: absent, awake, and asleep.

To train and evaluate the model, we recorded more than a million hours of radar data from thousands of individuals, along with thousands of sleep diaries, reference sensor recordings, and external annotations. We then leveraged the TensorFlow Extended framework to construct a training pipeline to process this data and produce an efficient TensorFlow Lite embedded model. In addition, we created an automatic calibration algorithm that runs during setup to configure the part of the scene on which the classifier will focus. This ensures that the algorithm ignores motion from a person on the other side of the bed or from other areas of the room, such as ceiling fans and swaying curtains.

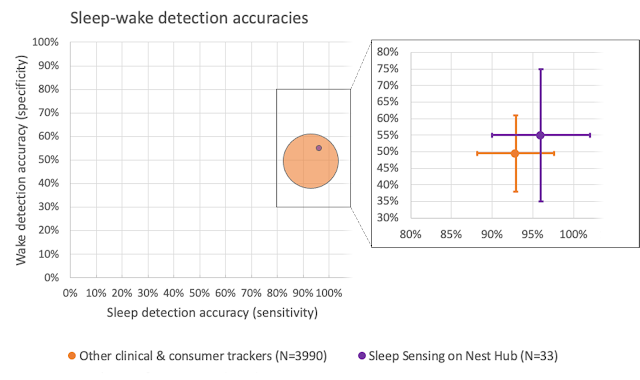

To validate the accuracy of the algorithm, we compared it to the gold-standard of sleep-wake determination, the polysomnogram sleep study, in a cohort of 33 “healthy sleepers” (those without significant sleep issues, like sleep apnea or insomnia) across a broad age range (19-78 years of age). Sleep studies are typically conducted in clinical and research laboratories in order to collect various body signals (brain waves, muscle activity, respiratory and heart rate measurements, body movement and position, and snoring), which can then be interpreted by trained sleep experts to determine stages of sleep and identify relevant events. To account for variability in how different scorers apply the American Academy of Sleep Medicine’s staging and scoring rules, our study used two board-certified sleep technologists to independently annotate each night of sleep and establish a definitive groundtruth.

We compared our Sleep Sensing algorithm’s outputs to the corresponding groundtruth sleep and wake labels for every 30-second epoch of time to compute standard performance metrics (e.g., sensitivity and specificity). While not a true head-to-head comparison, this study’s results can be compared against previously published studies in similar cohorts with comparable methodologies in order to get a rough estimate of performance. In “Sleep-wake detection with a contactless, bedside radar sleep sensing system”, we share the full details of these validation results, demonstrating sleep-wake estimation equivalent to or, in some cases, better than current clinical and consumer sleep tracking devices.

Understanding Sleep Quality with Audio Sensing

The Soli-based sleep tracking algorithm described above gives users a convenient and reliable way to see how much sleep they are getting and when sleep disruptions occur. However, to understand and improve their sleep, users also need to understand why their sleep is disrupted. To assist with this, Nest Hub uses its array of sensors to track common sleep disturbances, such as light level changes or uncomfortable room temperature. In addition to these, respiratory events like coughing and snoring are also frequent sources of disturbance, but people are often unaware of these events.

As with other audio-processing applications like speech or music recognition, coughing and snoring exhibit distinctive temporal patterns in the audio frequency spectrum, and with sufficient data an ML model can be trained to reliably recognize these patterns while simultaneously ignoring a wide variety of background noises, from a humming fan to passing cars. The model uses entirely on-device audio processing with privacy-preserving analysis, with no raw audio data sent to Google’s servers. A user can then opt to save the outputs of the processing (sound occurrences, such as the number of coughs and snore minutes) in Google Fit, in order to view personal insights and summaries of their night time wellness over time.

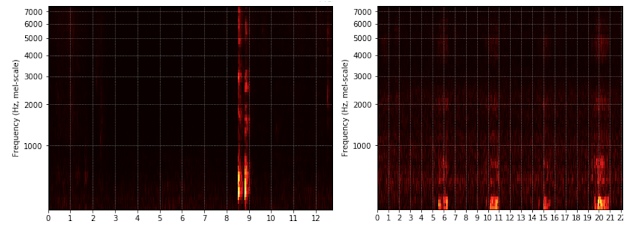

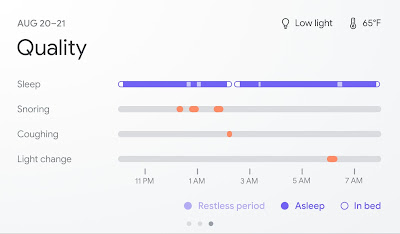

|

|

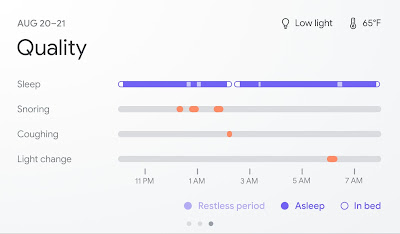

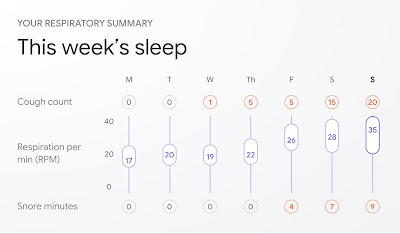

| The Nest Hub displays when snoring and coughing may have disturbed a user’s sleep (top) and can track weekly trends (bottom). |

To train the model, we assembled a large, hand-labeled dataset, drawing examples from the publicly available AudioSet research dataset as well as hundreds of thousands of additional real-world audio clips contributed by thousands of individuals.

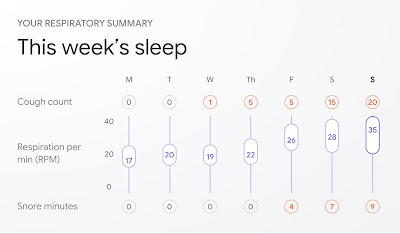

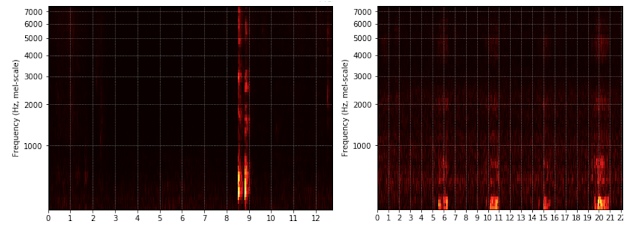

|

| Log-Mel spectrogram inputs comparing cough (left) and snore (right) audio snippets. |

When a user opts in to cough and snore tracking on their bedside Nest Hub, the device first uses its Soli-based sleep algorithms to detect when a user goes to bed. Once it detects that a user has fallen asleep, it then activates its on-device sound sensing model and begins processing audio. The model works by continuously extracting spectrogram-like features from the audio input and feeding them through a convolutional neural network classifier in order to estimate the probability that coughing or snoring is happening at a given instant in time. These estimates are analyzed over the course of the night to produce a report of the overall cough count and snoring duration and highlight exactly when these events occurred.

Conclusion

The new Nest Hub, with its underlying Sleep Sensing features, is a first step in empowering users to understand their nighttime wellness using privacy-preserving radar and audio signals. We continue to research additional ways that ambient sensing and the predictive ability of consumer devices could help people better understand their daily health and wellness in a privacy-preserving way.

Acknowledgements

This work involved collaborative efforts from a multidisciplinary team of software engineers, researchers, clinicians, and cross-functional contributors. Special thanks to D. Shin for his significant contributions to this technology and blogpost, and Dr. Logan Schneider, visiting sleep neurologist affiliated with the Stanford/VA Alzheimer’s Center and Stanford Sleep Center, whose clinical expertise and contributions were invaluable to continuously guide this research. In addition to the authors, key contributors to this research from Google Health include Jeffrey Yu, Allen Jiang, Arno Charton, Jake Garrison, Navreet Gill, Sinan Hersek, Yijie Hong, Jonathan Hsu, Andi Janti, Ajay Kannan, Mukil Kesavan, Linda Lei, Kunal Okhandiar, Xiaojun Ping, Jo Schaeffer, Neil Smith, Siddhant Swaroop, Bhavana Koka, Anupam Pathak, Dr. Jim Taylor, and the extended team. Another special thanks to Ken Mixter for his support and contributions to the development and integration of this technology into Nest Hub. Thanks to Mark Malhotra and Shwetak Patel for their ongoing leadership, as well as the Nest, Fit, Soli, and Assistant teams we collaborated with to build and validate Sleep Sensing on Nest Hub.

1 Not intended to diagnose, cure, mitigate, prevent or treat any disease or condition. ↩

Source: Google AI Blog

Contactless Sleep Sensing in Nest Hub

People often turn to technology to manage their health and wellbeing, whether it is to record their daily exercise, measure their heart rate, or increasingly, to understand their sleep patterns. Sleep is foundational to a person’s everyday wellbeing and can be impacted by (and in turn, have an impact on) other aspects of one’s life — mood, energy, diet, productivity, and more.

As part of our ongoing efforts to support people’s health and happiness, today we announced Sleep Sensing in the new Nest Hub, which uses radar-based sleep tracking in addition to an algorithm for cough and snore detection. While not intended for medical purposes1, Sleep Sensing is an opt-in feature that can help users better understand their nighttime wellness using a contactless bedside setup. Here we describe the technologies behind Sleep Sensing and discuss how we leverage on-device signal processing to enable sleep monitoring (comparable to other clinical- and consumer-grade devices) in a way that protects user privacy.

Soli for Sleep Tracking

Sleep Sensing in Nest Hub demonstrates the first wellness application of Soli, a miniature radar sensor that can be used for gesture sensing at various scales, from a finger tap to movements of a person’s body. In Pixel 4, Soli powers Motion Sense, enabling touchless interactions with the phone to skip songs, snooze alarms, and silence phone calls. We extended this technology and developed an embedded Soli-based algorithm that could be implemented in Nest Hub for sleep tracking.

Soli consists of a millimeter-wave frequency-modulated continuous wave (FMCW) radar transceiver that emits an ultra-low power radio wave and measures the reflected signal from the scene of interest. The frequency spectrum of the reflected signal contains an aggregate representation of the distance and velocity of objects within the scene. This signal can be processed to isolate a specified range of interest, such as a user’s sleeping area, and to detect and characterize a wide range of motions within this region, ranging from large body movements to sub-centimeter respiration.

In order to make use of this signal for Sleep Sensing, it was necessary to design an algorithm that could determine whether a person is present in the specified sleeping area and, if so, whether the person is asleep or awake. We designed a custom machine-learning (ML) model to efficiently process a continuous stream of 3D radar tensors (summarizing activity over a range of distances, frequencies, and time) and automatically classify each feature into one of three possible states: absent, awake, and asleep.

To train and evaluate the model, we recorded more than a million hours of radar data from thousands of individuals, along with thousands of sleep diaries, reference sensor recordings, and external annotations. We then leveraged the TensorFlow Extended framework to construct a training pipeline to process this data and produce an efficient TensorFlow Lite embedded model. In addition, we created an automatic calibration algorithm that runs during setup to configure the part of the scene on which the classifier will focus. This ensures that the algorithm ignores motion from a person on the other side of the bed or from other areas of the room, such as ceiling fans and swaying curtains.

To validate the accuracy of the algorithm, we compared it to the gold-standard of sleep-wake determination, the polysomnogram sleep study, in a cohort of 33 “healthy sleepers” (those without significant sleep issues, like sleep apnea or insomnia) across a broad age range (19-78 years of age). Sleep studies are typically conducted in clinical and research laboratories in order to collect various body signals (brain waves, muscle activity, respiratory and heart rate measurements, body movement and position, and snoring), which can then be interpreted by trained sleep experts to determine stages of sleep and identify relevant events. To account for variability in how different scorers apply the American Academy of Sleep Medicine’s staging and scoring rules, our study used two board-certified sleep technologists to independently annotate each night of sleep and establish a definitive groundtruth.

We compared our Sleep Sensing algorithm’s outputs to the corresponding groundtruth sleep and wake labels for every 30-second epoch of time to compute standard performance metrics (e.g., sensitivity and specificity). While not a true head-to-head comparison, this study’s results can be compared against previously published studies in similar cohorts with comparable methodologies in order to get a rough estimate of performance. In “Sleep-wake detection with a contactless, bedside radar sleep sensing system”, we share the full details of these validation results, demonstrating sleep-wake estimation equivalent to or, in some cases, better than current clinical and consumer sleep tracking devices.

Understanding Sleep Quality with Audio Sensing

The Soli-based sleep tracking algorithm described above gives users a convenient and reliable way to see how much sleep they are getting and when sleep disruptions occur. However, to understand and improve their sleep, users also need to understand why their sleep is disrupted. To assist with this, Nest Hub uses its array of sensors to track common sleep disturbances, such as light level changes or uncomfortable room temperature. In addition to these, respiratory events like coughing and snoring are also frequent sources of disturbance, but people are often unaware of these events.

As with other audio-processing applications like speech or music recognition, coughing and snoring exhibit distinctive temporal patterns in the audio frequency spectrum, and with sufficient data an ML model can be trained to reliably recognize these patterns while simultaneously ignoring a wide variety of background noises, from a humming fan to passing cars. The model uses entirely on-device audio processing with privacy-preserving analysis, with no raw audio data sent to Google’s servers. A user can then opt to save the outputs of the processing (sound occurrences, such as the number of coughs and snore minutes) in Google Fit, in order to view personal insights and summaries of their night time wellness over time.

|

|

| The Nest Hub displays when snoring and coughing may have disturbed a user’s sleep (top) and can track weekly trends (bottom). |

To train the model, we assembled a large, hand-labeled dataset, drawing examples from the publicly available AudioSet research dataset as well as hundreds of thousands of additional real-world audio clips contributed by thousands of individuals.

|

| Log-Mel spectrogram inputs comparing cough (left) and snore (right) audio snippets. |

When a user opts in to cough and snore tracking on their bedside Nest Hub, the device first uses its Soli-based sleep algorithms to detect when a user goes to bed. Once it detects that a user has fallen asleep, it then activates its on-device sound sensing model and begins processing audio. The model works by continuously extracting spectrogram-like features from the audio input and feeding them through a convolutional neural network classifier in order to estimate the probability that coughing or snoring is happening at a given instant in time. These estimates are analyzed over the course of the night to produce a report of the overall cough count and snoring duration and highlight exactly when these events occurred.

Conclusion

The new Nest Hub, with its underlying Sleep Sensing features, is a first step in empowering users to understand their nighttime wellness using privacy-preserving radar and audio signals. We continue to research additional ways that ambient sensing and the predictive ability of consumer devices could help people better understand their daily health and wellness in a privacy-preserving way.

Acknowledgements

This work involved collaborative efforts from a multidisciplinary team of software engineers, researchers, clinicians, and cross-functional contributors. Special thanks to D. Shin for his significant contributions to this technology and blogpost, and Dr. Logan Schneider, visiting sleep neurologist affiliated with the Stanford/VA Alzheimer’s Center and Stanford Sleep Center, whose clinical expertise and contributions were invaluable to continuously guide this research. In addition to the authors, key contributors to this research from Google Health include Jeffrey Yu, Allen Jiang, Arno Charton, Jake Garrison, Navreet Gill, Sinan Hersek, Yijie Hong, Jonathan Hsu, Andi Janti, Ajay Kannan, Mukil Kesavan, Linda Lei, Kunal Okhandiar, Xiaojun Ping, Jo Schaeffer, Neil Smith, Siddhant Swaroop, Bhavana Koka, Anupam Pathak, Dr. Jim Taylor, and the extended team. Another special thanks to Ken Mixter for his support and contributions to the development and integration of this technology into Nest Hub. Thanks to Mark Malhotra and Shwetak Patel for their ongoing leadership, as well as the Nest, Fit, Soli, and Assistant teams we collaborated with to build and validate Sleep Sensing on Nest Hub.

1 Not intended to diagnose, cure, mitigate, prevent or treat any disease or condition. ↩

Source: Google AI Blog

Haptics with Input: Using Linear Resonant Actuators for Sensing

As wearables and handheld devices decrease in size, haptics become an increasingly vital channel for feedback, be it through silent alerts or a subtle "click" sensation when pressing buttons on a touch screen. Haptic feedback, ubiquitous in nearly all wearable devices and mobile phones, is commonly enabled by a linear resonant actuator (LRA), a small linear motor that leverages resonance to provide a strong haptic signal in a small package. However, the touch and pressure sensing needed to activate the haptic feedback tend to depend on additional, separate hardware which increases the price, size and complexity of the system.

In “Haptics with Input: Back-EMF in Linear Resonant Actuators to Enable Touch, Pressure and Environmental Awareness”, presented at ACM UIST 2020, we demonstrate that widely available LRAs can sense a wide range of external information, such as touch, tap and pressure, in addition to being able to relay information about contact with the skin, objects and surfaces. We achieve this with off-the-shelf LRAs by multiplexing the actuation with short pulses of custom waveforms that are designed to enable sensing using the back-EMF voltage. We demonstrate the potential of this approach to enable expressive discrete buttons and vibrotactile interfaces and show how the approach could bring rich sensing opportunities to integrated haptics modules in mobile devices, increasing sensing capabilities with fewer components. Our technique is potentially compatible with many existing LRA drivers, as they already employ back-EMF sensing for autotuning of the vibration frequency.

|

| Different off-the-shelf LRAs that work using this technique. |

Back-EMF Principle in an LRA

Inside the LRA enclosure is a magnet attached to a small mass, both moving freely on a spring. The magnet moves in response to the excitation voltage introduced by the voice coil. The motion of the oscillating mass produces a counter-electromotive force, or back-EMF, which is a voltage proportional to the rate of change of magnetic flux. A greater oscillation speed creates a larger back-EMF voltage, while a stationary mass generates zero back-EMF voltage.

|

| Anatomy of the LRA. |

Active Back-EMF for Sensing

Touching or making contact with the LRA during vibration changes the velocity of the interior mass, as energy is dissipated into the contact object. This works well with soft materials that deform under pressure, such as the human body. A finger, for example, absorbs different amounts of energy depending on the contact force as it flattens against the LRA. By driving the LRA with small amounts of energy, we can measure this phenomenon using the back-EMF voltage. Because leveraging the back-EMF behavior for sensing is an active process, the key insight that enabled this work was the design of a custom, off-resonance driver waveform that allows continuous sensing while minimizing vibrations, sound and power consumption.

|

| Touch and pressure sensing on the LRA. |

We measure back-EMF from the floating voltage between the two LRA leads, which requires disconnecting the motor driver briefly to avoid disturbances. While the driver is disconnected, the mass is still oscillating inside the LRA, producing an oscillating back-EMF voltage. Because commercial back-EMF sensing LRA drivers do not provide the raw data, we designed a custom circuit that is able to pick up and amplify small back-EMF voltage. We also generated custom drive pulses that minimize vibrations and energy consumption.

|

| Simplified schematic of the LRA driver and the back-EMF measurement circuit for active sensing. |

Applications

The behavior of the LRAs used in mobile phones is the same, whether they are on a table, on a soft surface, or hand held. This may cause problems, as a vibrating phone could slide off a glass table or emit loud and unnecessary vibrating sounds. Ideally, the LRA on a phone would automatically adjust based on its environment. We demonstrate our approach for sensing using the LRA back-EMF technique by wiring directly to a Pixel 4’s LRA, and then classifying whether the phone is held in hand, placed on a soft surface (foam), or placed on a table.

|

| Sensing phone surroundings. |

We also present a prototype that demonstrates how LRAs could be used as combined input/output devices in portable electronics. We attached two LRAs, one on the left and one on the right side of a phone. The buttons provide tap, touch, and pressure sensing. They are also programmed to provide haptic feedback, once the touch is detected.

|

| Pressure-sensitive side buttons. |

There are a number of wearable tactile aid devices, such as sleeves, vests, and bracelets. To transmit tactile feedback to the skin with consistent force, the tactor has to apply the right pressure; it can not be too loose or too tight. Currently, the typical way to do so is through manual adjustment, which can be inconsistent and lacks measurable feedback. We show how the LRA back-EMF technique can be used to continuously monitor the fit bracelet device and prompt the user if it's too tight, too loose, or just right.

|

| Fit sensing bracelet. |

Evaluating an LRA as a Sensor

The LRA works well as a pressure sensor, because it has a quadratic response to the force magnitude during touch. Our method works for all five off-the-shelf LRA types that we evaluated. Because the typical power consumption is only 4.27 mA, all-day sensing would only reduce the battery life of a Pixel 4 phone from 25 to 24 hours. The power consumption can be greatly reduced by using low-power amplifiers and employing active sensing only when needed, such as when the phone is active and interacting with the user.

|

| Back-EMF voltage changes when pressure is applied with a finger. |

The challenge with active sensing is to minimize vibrations, so they are not perceptible when touching and do not produce audible sound. We optimize the active sensing to produce only 2 dB of sound and 0.45 m/s2 peak-to-peak acceleration, which is just barely perceptible by finger and is quiet, in contrast to the regular 8.49 m/s2 .

Future Work and Conclusion

To see the work presented here in action, please see the video below.

In the future, we plan to explore other sensing techniques, perhaps measuring the current could be an alternative approach. Also, using machine learning could potentially improve the sensing and provide more accurate classification of the complex back-EMF patterns. Our method could be developed further to enable closed-loop feedback with the actuator and sensor, which would allow the actuator to provide the same force, regardless of external conditions.

We believe that this work opens up new opportunities for leveraging existing ubiquitous hardware to provide rich interactions and closed-loop feedback haptic actuators.

Acknowledgments

This work was done by Artem Dementyev, Alex Olwal, and Richard Lyon. Thanks to Mathieu Le Goc and Thad Starner for feedback on the paper.

Source: Google AI Blog

Our best Chromecast yet, now with Google TV

Source: Official Google Australia Blog

Made for music, the new Nest Audio is here

Source: Official Google Australia Blog

Pixel 4a (5G) and Pixel 5 pack 5G speeds and so much more

- Better videos with Cinematic Pan: Pixel 4a with 5G and Pixel 5 come with Cinematic Pan, which gives your videos a professional look with ultrasmooth panning that’s inspired by the equipment Hollywood directors use.

- Night Sight in Portrait Mode: Night Sight currently gives you the ability to capture amazing low-light photos—and even the Milky Way with astrophotography. Now, these phones bring the power of Night Sight into Portrait Mode to capture beautifully blurred backgrounds in Portraits even in extremely low light.

- Portrait Light: Portrait Mode on the Pixel 4a with 5G and Pixel 5 lets you capture beautiful portraits that focus on your subject as the background fades into an artful blur. If the lighting isn’t right, your Pixel can drop in extra light to illuminate your subjects.

- Ultrawide lens for ultra awesome shots: With an ultrawide lens alongside the standard rear camera, you’ll be able to capture the whole scene. And thanks to Google’s software magic, the latest Pixels still get our Super Res Zoom. So whether you’re zooming in or zooming out, you get sharp details and breathtaking images.

- New editor in Google Photos: Even after you’ve captured your portrait, Google Photos can help you add studio-quality light to your portraits of people with Portrait Light, in the new, more helpful Google Photos editor.

Source: Official Google Australia Blog

Made for music, the new Nest Audio is here

This year, we’ve all spent a lot of time exploring things to do at home. Some of us gardened, and others baked. We tried at-home workouts, or took up art projects. But one thing that many—maybe all of us—did? Enjoyed a lot of music at home. I’ve spent so much more time listening to music during quarantine—bossa nova is my go-to soundtrack for doing the dishes and Lil Baby has become one of my favorite artists. But you might even prefer Mohammed Rafi or Ilayaraja.

To help provide a richer soundtrack to your time at home, we’re especially excited to introduce Nest Audio, our latest smart speaker made for music lovers.

A music machine

Nest Audio is 75 percent louder and has 50 percent stronger bass than the original Google Home—measurements of both devices were taken in controlled conditions. With a 19mm tweeter for consistent high frequency coverage and clear vocals and a 75mm mid-woofer that really brings the bass, this smart speaker is built to deliver a rich musical experience.

Nest Audio’s sound is full, clear, and natural. We completed more than 500 hours of tuning to ensure balanced lows, mids and highs so nothing is lacking or overbearing. The bass is significant and the vocals have depth, which makes Nest Audio sound great across genres: classical, R&B, pop and more. The custom-designed tweeter allows each musical detail to come through, and we optimized the grill, fabric and materials so that you can enjoy the audio without distortion.

Our goal was to ensure that Nest Audio stayed faithful to what the artist intended when they were in the recording studio. We minimized the use of compressors to preserve dynamic range, so the auditory contrast in the original production is preserved—the quiet parts are delicate and subtle, and the loud ones are more dramatic and powerful.

Nest Audio also adapts to your home. Our Media EQ feature enables Nest Audio to automatically tune itself to whatever you’re listening to: music, podcasts, audiobooks or even a response from Google Assistant. And Ambient IQ lets Nest Audio also adjust the volume of Assistant, news, podcasts and audiobooks based on the background noise in your home, so you can hear the weather forecast over a noisy vacuum cleaner.

Whole home audio

If you have a Google Home, Nest Mini or even a Nest Hub, you can easily make Nest Audio the center of your whole home sound system. In my living room, I’ve connected two Nest Audio speakers as a stereo pair for left and right channel separation. I also have a Nest Mini in my bedroom and a Nest Hub in the entryway. These devices are grouped so that I can blast the same song on all of them when I have my daily dance party.

With our stream transfer feature, I can move music from one device to the other with just my voice*. I can even transfer music or podcasts from my phone when I walk in the door. Just last month, we launched multi-room control, which allows you to dynamically group multiple cast-enabled Nest devices in real time.

The Google Assistant you love

Google Assistant, available in Hindi and English, helps you tackle your day, enjoy your entertainment and control compatible smart home brands like Philips Hue, TP-Link and more. In fact, people have already set up more than 100 million devices to work with Google Assistant. Plus, if you’re a YouTube Music or Spotify Premium subscriber, you can say, “Ok Google, recommend some music” and Google Assistant will offer a variety of choices from artists and genres that you like as well as others that are similar.

Differentiated by design

Typically, a bigger speaker equals bigger sound, but Nest Audio has a really slim profile—so it fits anywhere in the home. In order to maximize audio output, we custom-designed quality drivers and housed them in an enclosure that helps it squeeze out every bit of sound possible.

Nest Audio will be available in India in two colors: Chalk and Charcoal. Its soft, rounded edges blend in with your home’s decor, and its minimal footprint doesn't take up too much space on your shelf or countertop.

We’re continuing our commitment to sustainability with Nest Audio. It’s covered in the same sustainable fabric that we first introduced with Nest Mini last year, and the enclosure (meaning the fabric, housing, foot, and a few smaller parts) is made from 70 percent recycled plastic.

Nest Audio will be available in India on Flipkart and at other retail outlets later this month. Stay tuned for more information on pricing and offers, which will be announced closer to the sale date.

Posted by Mark Spates, Product Manager, Google Nest

*currently only available in English in India