With Android reaching more devices, from phones to foldables to Chromebooks, building apps that seamlessly adapt to different screen sizes and types has never been more crucial. At this year’s Google I/O, we covered building adaptable apps, increasing user productivity with key inputs like keyboard and stylus, and scaling games across surfaces.

Building adaptive apps

Throughout Google I/O 2024, we’ve talked a lot about how to build adaptive apps. With this shift, some of you may be asking “what makes an app truly adaptive?”

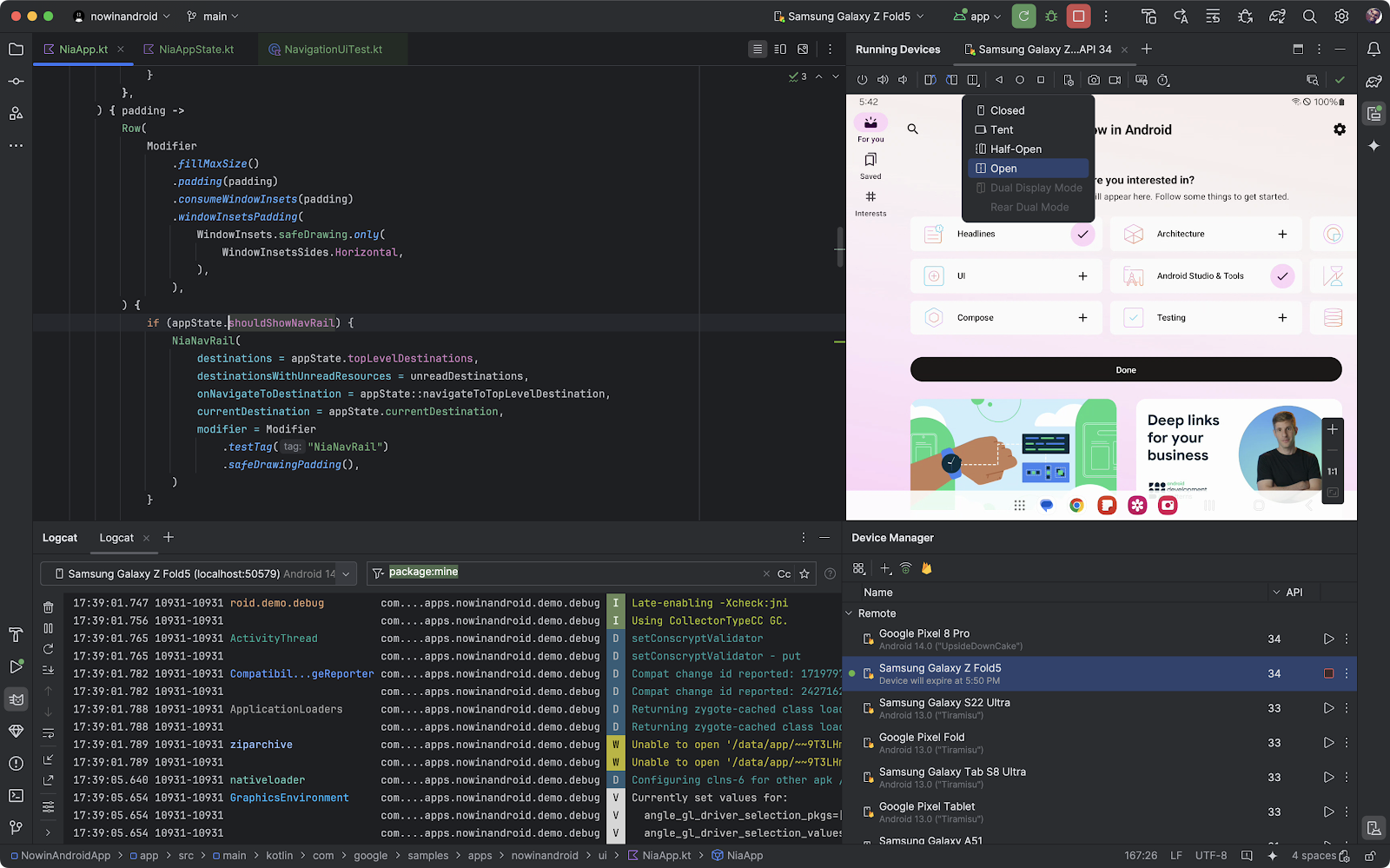

Adaptive apps take advantage of the full screen size they are on - whether that is a phone, a tablet, or a foldable. These apps adjust layout based on conditions, driving how your app’s layout should adapt. These conditions include things like changes to the size of the window, device posture or font size.

Adaptive apps dynamically adjust their layouts by swapping components, showing, or hiding content based on the available window size, compared to simply stretching UI elements. With ever evolving form factors and screen sizes, adaptability for your app to any window size unlocks the seamless experiences users demand today.

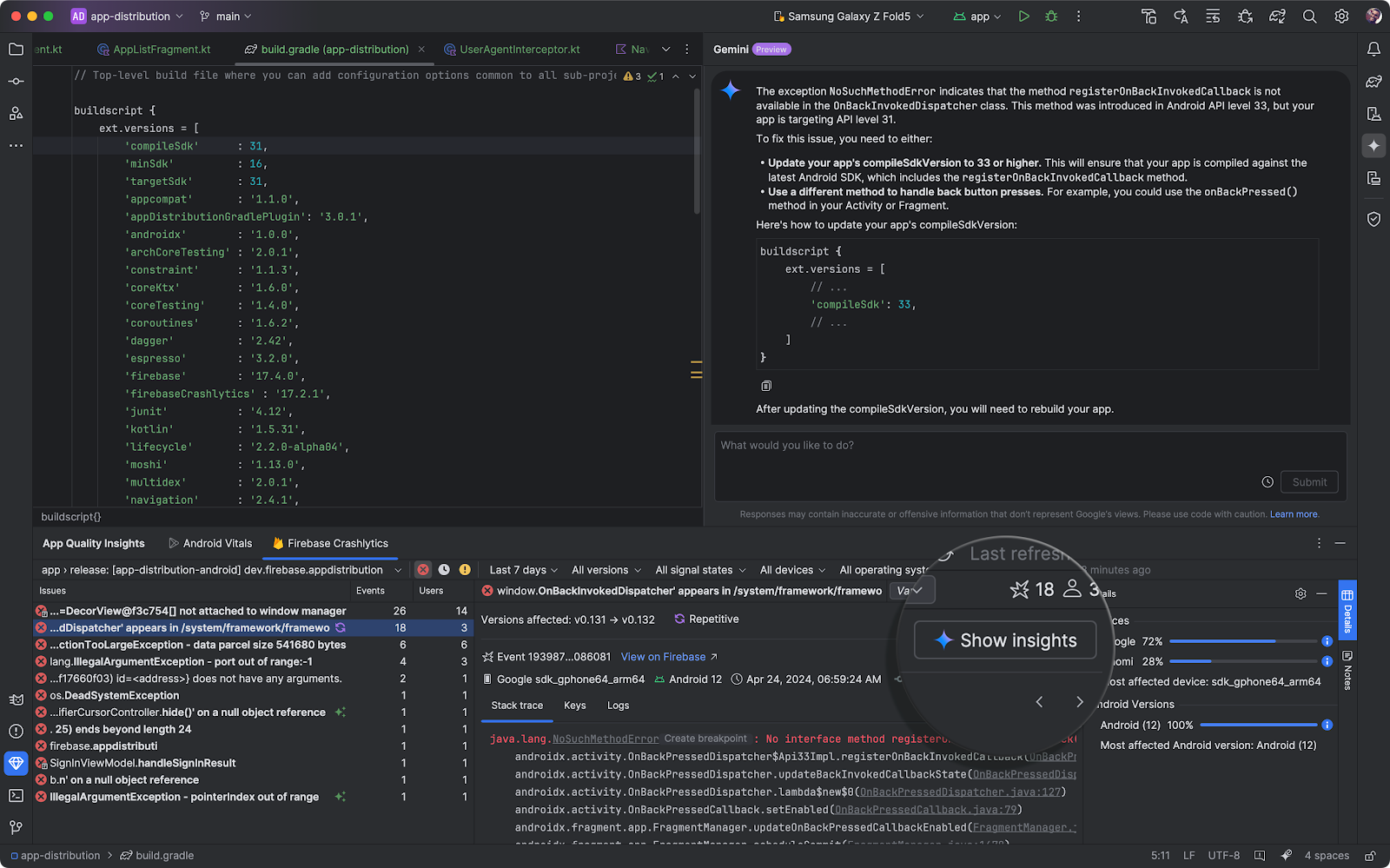

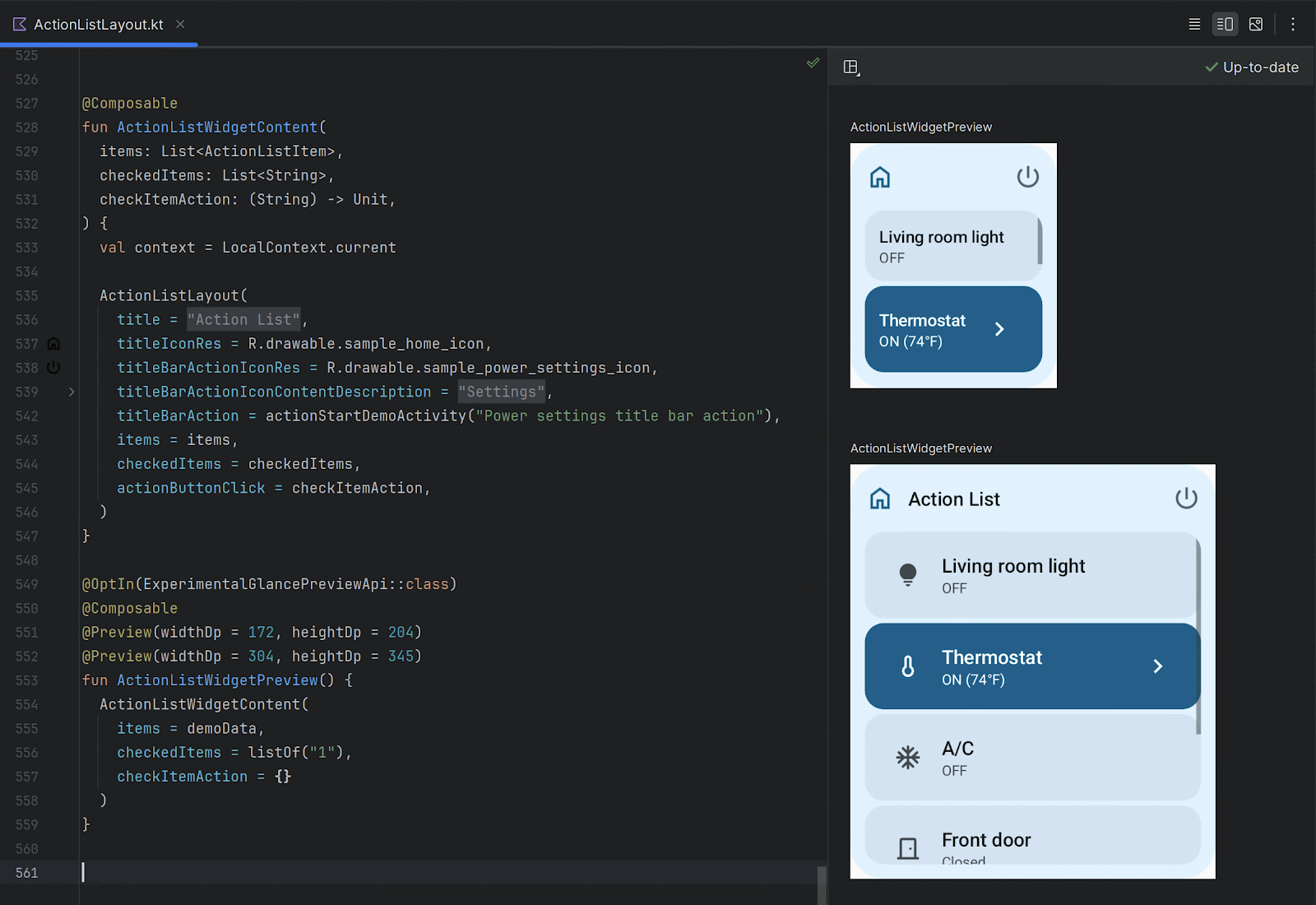

Now that you know what they are, how do you get started building adaptive apps? We strongly recommend using WindowSizeClasses as the opinionated breakpoints for your UI and we’re bringing you a variety of new Compose APIs that make it easier to implement common adaptive layouts.

Available now in beta, the new Compose adaptive layout libraries help you to make your UI look good across window sizes. From navigation UI to list/detail and supporting pane style layouts, we’re providing composables to make building an adaptive app easier than ever.

Check out these technical sessions to learn more:

Or get started by checking out the new documentation!

Increase user productivity on tablet and foldables

Tablets and foldables are great for consuming content but even better when it comes to creating content. That’s why leveraging input devices like stylus for more productive experiences is especially important for your users.

Improving your app’s stylus experience

Stylus users on Android can remain more productive with new support for handwriting in text fields. You no longer need to put down your stylus when you need to input some text into a text field. Stylus handwriting and gestures will work automatically if you are using standard text components including BasicTextField in Compose (1.7), EditText in Views and text input elements in WebView.

For stylus users, low latency is key to having a responsive inking experience. Reducing latency minimizes the amount of delay between when you move your stylus and when the ink appears on the screen, giving users a more authentic pen-to-paper experience. Based on developer feedback, we’ve introduced new APIs to make low latency easier for apps that use Canvas for rendering.

A great example of these libraries in practice is Infinite Painter, where the team reduced their inking latency by 5x.

Enhancing productivity with keyboard and mouse support

Next to the stylus, another essential input device is the physical keyboard that really shines when users need to do a lot of text input like long emails, documents or blog posts. As a developer, making it easy for users to navigate your app with keyboard navigation can set your app apart.

Users should be able to navigate to all elements in your app with just their keyboard. It’s also important that frequently used keyboard shortcuts are supported in your app. To help educate your users about shortcuts, consider making your keyboard shortcuts discoverable to users by adding entries to the system KeyboardHelper.

To improve the experience for keyboard, mouse, trackpad, and stylus users, we also recommend implementing hover states and keyboard focus. All interactive components should have a hover state and should show a visual cue to indicate which component has the keyboard focus.

You can learn more about all these improvements and more via the technical session, and our updated documentation and codelab.

Enhance productivity with pane expansion

Although larger window sizes allow showing multiple panes of content at once, users often want to focus on one specific pane at a time. By following the new guidance for pane expansion, users have the choice to see both panes at once, or resize them as they desire.

Google Calendar has added pane expansion to their supporting pane layout on expanded width window sizes, allowing users to resize to see more details of an event, or more information in their schedule.

Although larger window sizes allow showing multiple panes of content at once, users often want to focus on one specific pane at a time. By following the new guidance for pane expansion, users have the choice to see both panes at once, or resize them as they desire.

Google Calendar has added pane expansion to their supporting pane layout on expanded width window sizes, allowing users to resize to see more details of an event, or more information in their schedule.

This feature will be supported by activity embedding in Android 15, and is also planned to be supported by the material3-adaptive library.

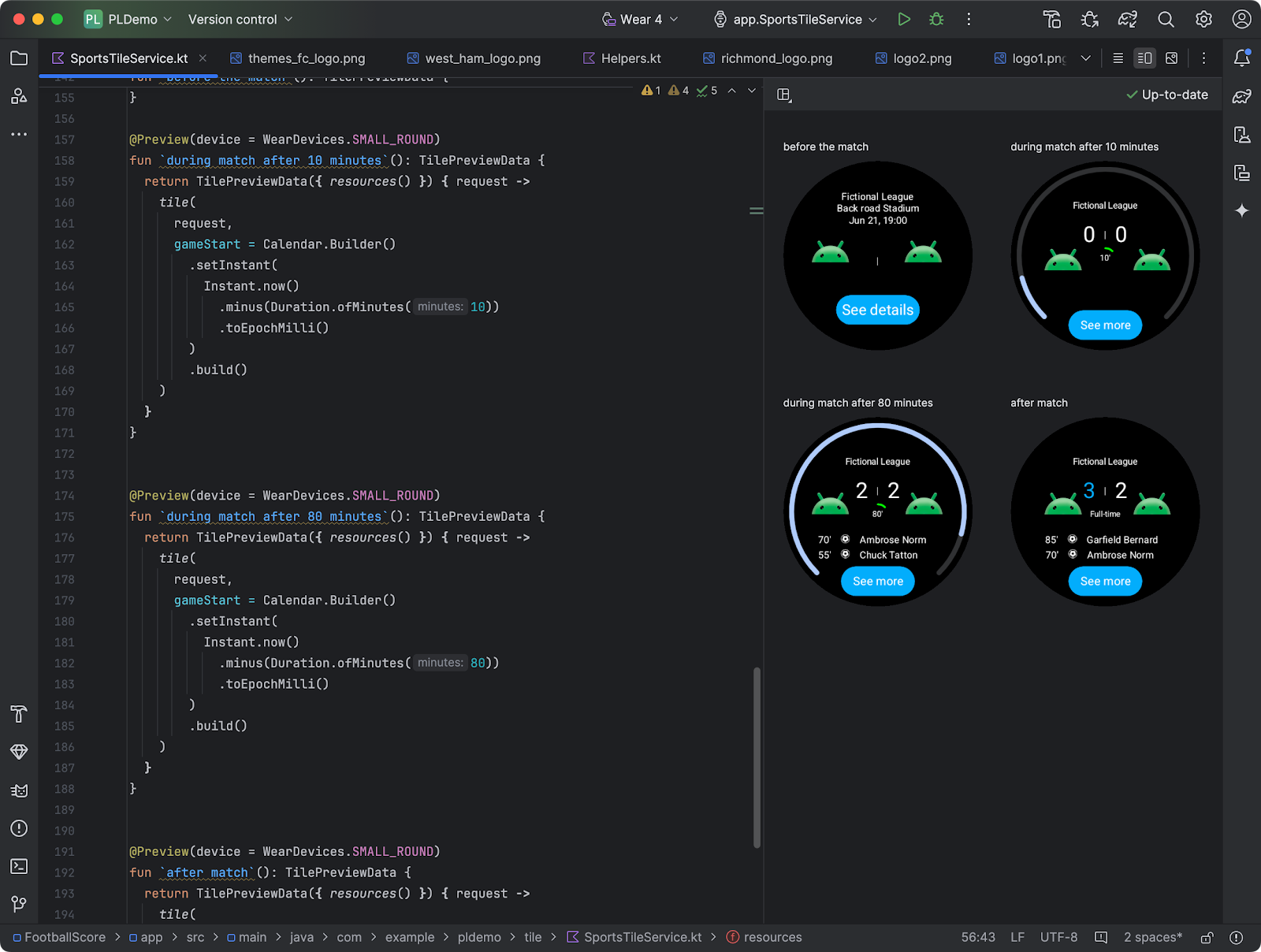

Building great games across surfaces

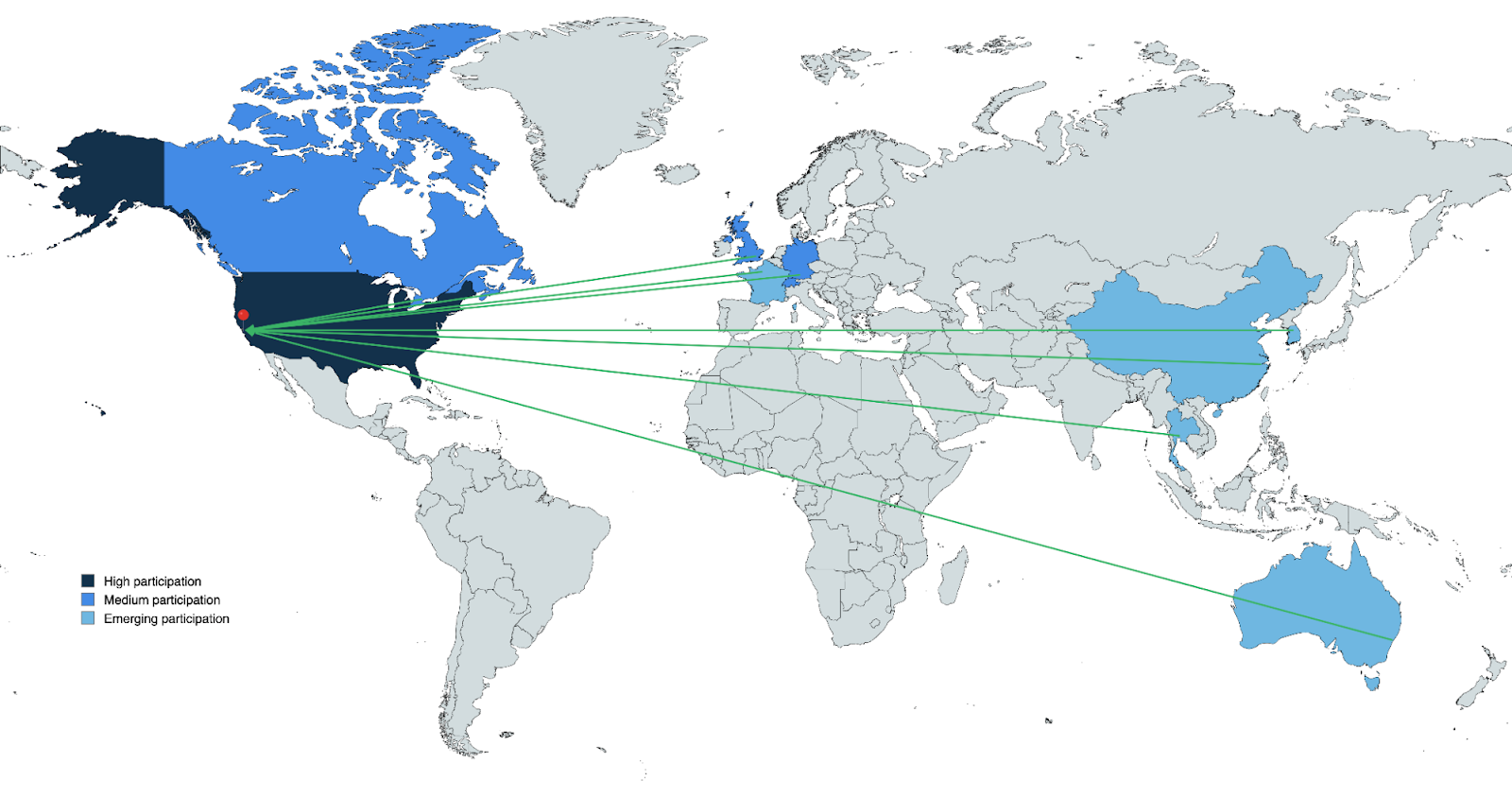

Gamers appreciate premium, immersive experiences and with Android tablets, foldables, desktops, and Chromebooks your game can reach more players than ever. Creating meaningful experiences on each of these devices is key to ensuring your game stands out.

During this year’s Google I/O, we are highlighting the best practices for rendering, managing assets, and windowing in resizable contexts to build quality experiences and impress your players across form factors.

With the wide variety of devices and hardware configurations, provide configurable graphics options for your players. And, for the best experience right out of the box, define default graphics options for different devices. Additionally, consider trade offs like storage size, performance, and compatibility across platforms when deciding what texture compression formats to use.

Large screen devices support different window sizes with configurations like multi-window mode and on orientation change and fold/unfold. By default, Android provides a compatibility mode - but, for the most seamless experience, declare and handle configuration events. Display cutouts, hinges, and even system UI can also occlude your game window, so support edge-to-edge windowing and ensure no key game content is occluded. If you really want to take your game to the next level, consider using the Jetpack WindowManager library to support dynamic layouts on foldable devices.

To provide smoother gameplay and reduce input latency consider using frame pacing. Also consider enabling wide color gamut support so that vivid colors are rendered properly while also maximizing contrast and brightness on large screen HDR displays to improve realism and immersion for your players.

With the changing mobile landscape and transition from OpenGL to Vulkan, handle swapchain recreation after window configuration changes. If you rely on any device specific hardware features like host visible device local memory have fallback implementations for other platforms.

Learn more about the rendering best practices and how to level up your game across surfaces by tuning into this technical session and be sure to check out our multiplatform optimization guide.

Get started building adaptable apps from phones to tablets and foldables

You can get started building adaptable apps that look great across tablets, foldables, Chromebooks and more by checking out the “Building adaptive Android apps” technical session or heading to the large screens gallery for content tailored to your specific app type - from productivity apps to games… and more!

Posted by Fahd Imtiaz, Product Manager, Android Developer

Posted by Fahd Imtiaz, Product Manager, Android Developer

Posted by

Posted by

Posted by

Posted by

Posted by Paul Feng, Vice President of Product Management, Google Play

Posted by Paul Feng, Vice President of Product Management, Google Play

Posted by Maru Ahues Bouza – Director, Product Management, and Jeffrey van Gogh – Director, Engineering

Posted by Maru Ahues Bouza – Director, Product Management, and Jeffrey van Gogh – Director, Engineering

Posted by Yafit Becher – Product Manager

Posted by Yafit Becher – Product Manager

Posted by Timothy Jordan – Director, Developer Relations and Open Source

Posted by Timothy Jordan – Director, Developer Relations and Open Source