Posted by Breana Tate - Developer Relations Engineer, Android Health

Posted by Breana Tate - Developer Relations Engineer, Android Health

Android Health’s mission is to enable billions of Android users to be healthier through access, storage, and control of their health, fitness, and safety data. To further this mission, we offer two primary APIs for developers, Health Connect and Health Services on Wear OS, which are both used by a growing number of apps on Android and Wear OS.

AI capabilities unlock amazing and unique use cases, but to be ready to deliver the most value to your users at the right time, you need a strong foundation of data. Our updates this year focus on helping you build up this data foundation, with support for more data types, new ways to access data, and additional methods of getting timely data updates when you need them.

Changes to the Google Fit APIs

We recently shared that Google Fit developer services will be transitioning to become a core part of the Android Health platform. As part of this, the Google Fit APIs, including the REST API, will remain available until June 30, 2025.

Health Connect is the recommended solution for storing and sharing health and fitness data on Android phones. Beginning with Android 14, it’s available by default in Settings. On pre-Android 14 devices, it’s available for download from the Play Store. Health Connect lets your app connect with hundreds of apps using a single API integration. To date, over 500 apps have integrated with Health Connect and have unlocked deeper insights for their users. Check out the featured list to see some of the apps that have integrated.

We’re excited to continue supporting the Google Fit Android Recording API functionality through the Recording API on mobile, which allows developers to record steps, and soon distance and calories, in a power-efficient manner. In contrast to the Google Fit Android Recording API, the Recording API on mobile does not store data in the cloud by default, and does not require Google Sign-In. The API is designed to make migrating from the Fit Recording API effortless. Keep an eye on d.android.com/health-and-fitness for upcoming documentation.

Upcoming capabilities from Health Connect

Health Connect will soon add support for background reads and history reads.

Background reads will enable developers to read data from Health Connect while their app is in the background, meaning that you can keep data up-to-date without relying on the user to open your app. This is a departure from current behavior, where apps can only read from Health Connect while the app is in the foreground or running a foreground service.

History reads will give users the option to grant apps access to all historical data in Health Connect, not just the past 30 days.

With both background reads and history reads, users are in control. Both capabilities require developers to declare the respective permissions, and users must approve the permission requests before developers can make use of the data protected by those permissions. Even after granting approval, users have the option of revoking access at any time from within Health Connect settings.

Both features will be released later this year, so stay tuned to learn how to add support to your apps!

Updates to Health Services on Wear OS

Health Services on Wear OS is a set of APIs that makes it simple to create power-efficient health and fitness experiences on Wear OS.

In Wear OS 5, we’re introducing 2 new features:

- New data types for running

- Support for debounced goals

New Data Types for Running

Starting with Wear OS 5, Health Services will support new data types for running. These data types can help provide additional insights on running form and economy.

The full list of new advanced running metrics is:

- Ground Contact Time

- Stride Length

- Vertical Oscillation

- Vertical Ratio

As with all data types supported by Health Services on Wear OS, be sure to check exercise capabilities so that your app only uses metrics that are supported on the devices running your app, creating a smoother experience for users. This is especially important for Wear OS, as there is a strong ecosystem of devices for consumers to choose from, and they don’t always support the same metrics.

// Checking if the device supports the RUNNING exercise and confirming the

// data types that are supported.

suspend fun getExerciseCapabilities(): ExerciseTypeCapabilities? {

val capabilities = exerciseClient.getCapabilitiesAsync().await()

return if (ExerciseType.RUNNING in capabilities.supportedExerciseTypes) {

capabilities.getExerciseTypeCapabilities(ExerciseType.RUNNING)

} else {

null

}

}

. . .

// Checking whether the data types that we want to use are supported by

// the RUNNING exercise on this device.

val dataTypes = setOf(

DataType.HEART_RATE_BPM_STATS,

DataType.CALORIES_TOTAL,

DataType.DISTANCE_TOTAL,

DataType.GROUND_CONTACT_TIME,

DataType.VERTICAL_OSCILLATION

).intersect(capabilities.supportedDataTypes)Checking exercise capabilities with Health Services on Wear OS

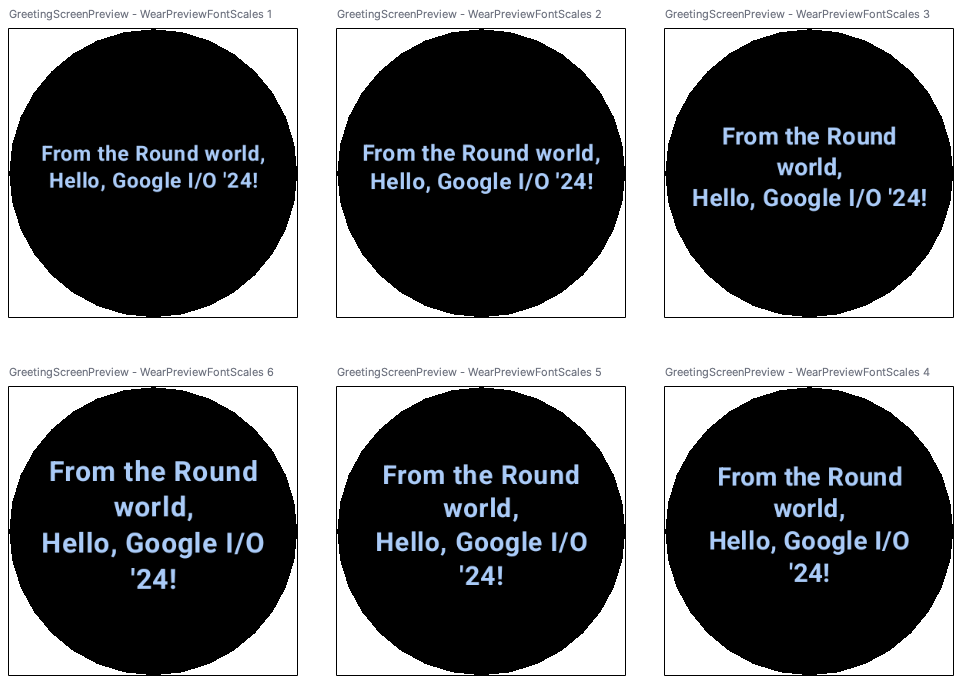

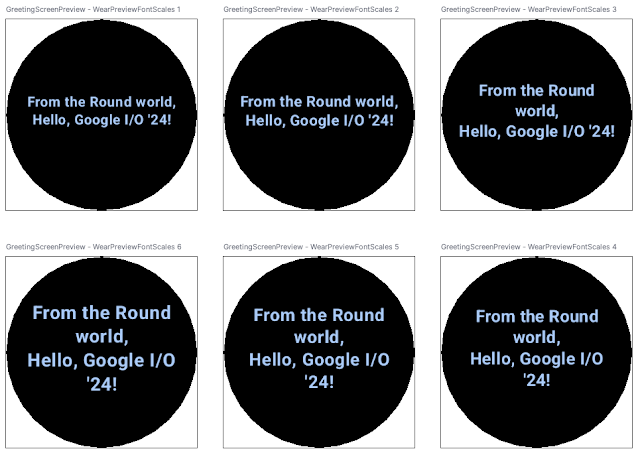

To make this easy, we’ve introduced a sensor panel, available starting in Android Studio Koala Feature Drop, which is currently in Canary. You can use the panel to test your app across a variety of device capabilities, experimenting with situations where metrics like heart rate or distance aren’t available.

The Health Services sensor panel

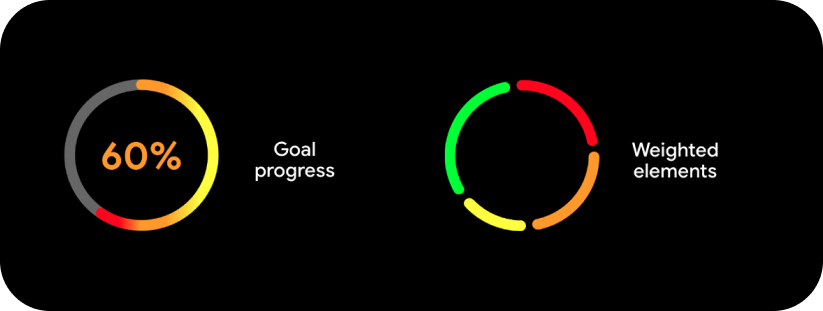

Support for debounced goals

Second, Health Services on Wear OS will soon support debounced goals for instantaneous metrics. These include metrics like heart rate, distance, and speed, for which users want to maintain a specified threshold or range throughout an exercise.

Debounced goals prevent the same event from being emitted multiple times—every time the condition is true—over a short time period. Instead, events are emitted only if the threshold has been continuously exceeded for a (configurable) number of seconds. You can also prevent events from being emitted immediately after goal registration.

This support comes from two new ways to better time goal alerts for instantaneous metrics: duration at threshold and initial delay:

- Duration at threshold is the amount of uninterrupted time the user needs to cross the specified threshold before Health Services sends an alert event.

- Initial delay is the amount of time that must pass, since goal registration, before your app is notified.

Together, these features reduce the number of false positives and repeated alerts surfaced to users if your app lets users set fitness goals or targets.

|

|

Duration at Threshold

|

Initial Delay

|

|

Definition

|

The amount of uninterrupted time the user needs to cross the specified threshold before Health Services will send an alert event.

|

The amount of time that must pass since goal registration, before your app is notified.

|

|

Purpose

|

Prevent false positives.

|

Prevent repeatedly notifying the user.

|

|

Counter starts

|

As soon as user crosses the specified threshold

|

As soon as the monitoring request is set

|

The differences between Duration at Threshold and Initial Delay

A common use case for debounced goals involves heart rate zones. Heart rate continuously fluctuates throughout an exercise, especially during cardio-intensive activities. Without support for debouncing, an app might get many alerts in a short period of time, such as each time the user’s heart rate dips above or below the target range.

By introducing an initial delay, you can inform Health Services to send a goal alert only after a specified time period has passed–think of this like an adjustment period. And by introducing a duration at threshold, you can take this customization further, by specifying the amount of time that must pass in (or out) of the specified threshold for the goal to be activated. In practice, this would be like waiting for the user to be out of their target heart rate range for 15 seconds before your app lets them know to increase or decrease their intensity.

Check out the technical session, “Building Adaptable Experiences with Android Health” to see this in action!

Your app’s training partner

The Health & Fitness Developer Center is your one-stop-shop for building health & fitness apps on Android! Visit the site for documentation, design inspiration, case studies, and more to learn how to build apps on mobile and Wear OS.

We’re excited to see the Health and Fitness experiences you continue to build on Android!

Posted by Chris Arriola – Developer Relations Engineer

Posted by Chris Arriola – Developer Relations Engineer

Posted by

Posted by

Posted by Tram Bui, Developer Programs Engineer, Developer Relations

Posted by Tram Bui, Developer Programs Engineer, Developer Relations

Posted by Matt Van Der Staay – Engineering Director, Google Home

Posted by Matt Van Der Staay – Engineering Director, Google Home

Posted by Vivek Radhakrishnan – Technical Program Manager, and Seung Nam – Product Manager

Posted by Vivek Radhakrishnan – Technical Program Manager, and Seung Nam – Product Manager

Posted by Maru Ahues Bouza, Product Management Director, Android Developer

Posted by Maru Ahues Bouza, Product Management Director, Android Developer

Kseniia Shumelchyk, Android Developer Relations Engineer, and Garan Jenkin, Android Developer Relations Engineer

Kseniia Shumelchyk, Android Developer Relations Engineer, and Garan Jenkin, Android Developer Relations Engineer

Posted by Breana Tate - Developer Relations Engineer, Android Health

Posted by Breana Tate - Developer Relations Engineer, Android Health

Posted by Anna Bernbaum – Product Manager, and Garan Jenkin – Developer Relations Engineer

Posted by Anna Bernbaum – Product Manager, and Garan Jenkin – Developer Relations Engineer