Posted by Anuj Gosalia

A little over a year ago, we introduced ARCore: a platform for building augmented reality (AR) experiences. Developers have been using it to create thousands of ARCore apps that help people with everything from fixing their dishwashers, to shopping for sunglasses, to mapping the night sky. Since last I/O, we've quadrupled the number of ARCore enabled devices to an estimated 400 million.

Today, at I/O we introduced updates to Augmented Images and Light Estimation - features that let you build more interactive, and realistic experiences. And to make it easier for people to experience AR, we introduced Scene Viewer, a new tool which lets users view 3D objects in AR right from your website.

Augmented Images

To make experiences appear realistic, we need to account for the fact that things in the real world don’t always stay still. That’s why we’re updating Augmented Images — our API that lets people point their camera at 2D images, like posters or packaging, to bring them to life. The updates enable you to track moving images and multiple images simultaneously. This unlocks the ability to create dynamic and interactive experiences like animated playing cards where multiple images move at the same time.

An example of how the Augmented Images API can be used with moving targets by JD.com

Light Estimation

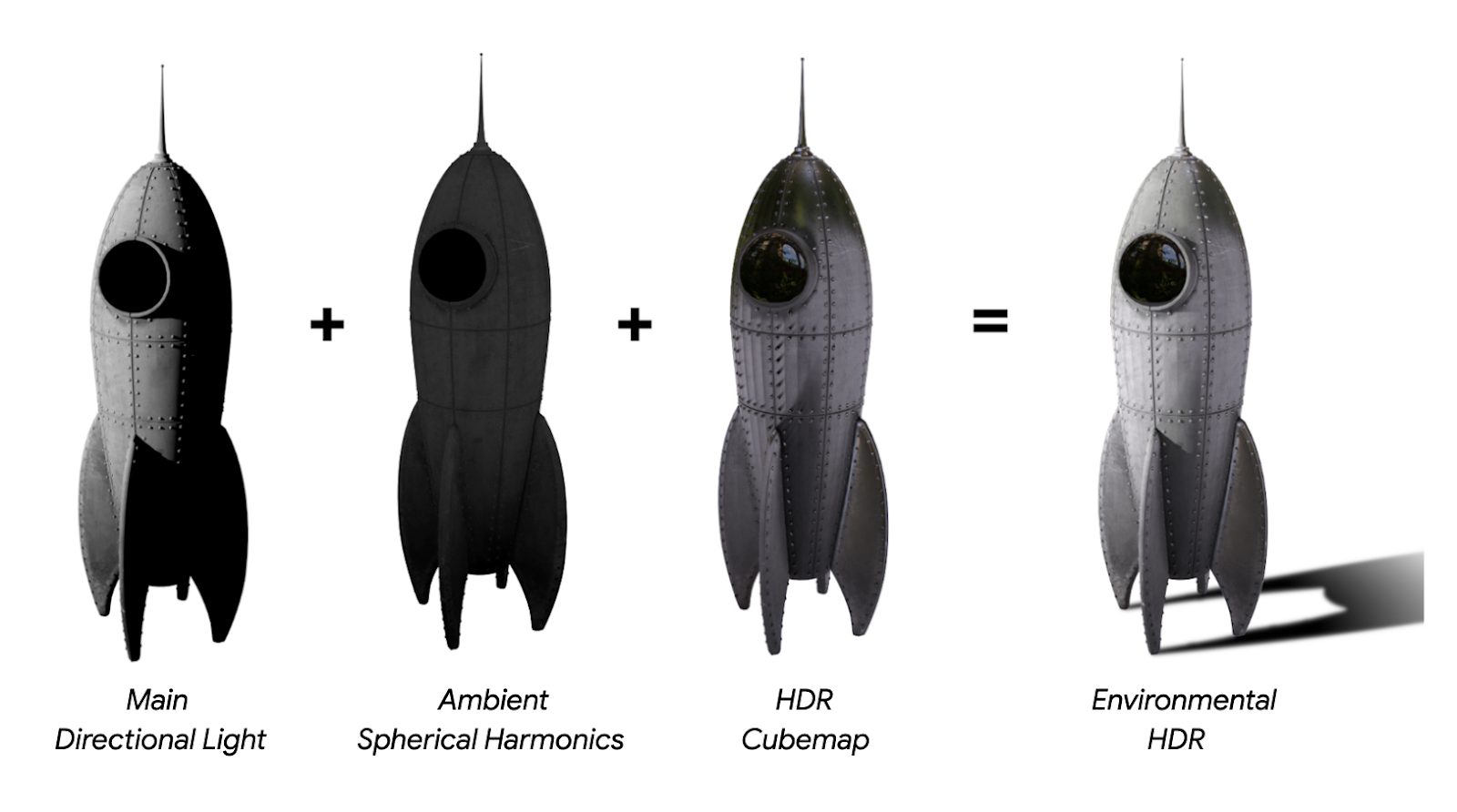

Last year, we introduced the concept of light estimation, which provides a single ambient light intensity to extend real world lighting into a digital scene. In order to provide even more realistic lighting, we’ve added a new mode, Environmental HDR, to our Light Estimation API.

Before and after Environmental HDR is applied to the digital mannequin on the left, featuring 3D printed designs from Julia Koerner

Environmental HDR uses machine learning with a single camera frame to understand high dynamic range illumination in 360°. It takes in available light data, and extends the light into a scene with accurate shadows, highlights, reflections and more. When Environmental HDR is activated, digital objects are lit just like physical objects, so the two blend seamlessly, even when light sources are moving.

Digital mannequin on left and physical mannequin on right

Environmental HDR provides developers with three APIs to replicate real world lighting:

- Main Directional Light: helps with placing shadows in the right direction

- Ambient Spherical Harmonics: helps model ambient illumination from all directions

- HDR Cubemap: provides specular highlights and reflections

Scene Viewer

We want to make it easier for people to jump into AR, so today we’re introducing Scene Viewer, so that AR experience can be launched right from your website without having to download a separate app.

To make your assets accessible via Scene Viewer, first add a glTF 3D asset to your website with the <model-viewer> web component, and then add the “ar” attribute to the <model-viewer> markup. Later this year, experiences in Scene Viewer will begin to surface in your Search results.

<script type="module" src="https://unpkg.com/@google/model-viewer/dist/model-viewer.js"></script>

<script nomodule src="https://unpkg.com/@google/model-viewer/dist/model-viewer-legacy.js"></script>

<model-viewer ar src="examples/assets/YOURMODEL.gltf"

auto-rotate camera-controls alt="TEXT ABOUT YOUR MODEL" background-color="#455A64"></model-viewer>

NASA.gov enables users to view the Curiosity Rover in their space

These are a few ways that improving real world understanding in ARCore can make AR experiences more interactive, realistic, and easier to access. Look for these features to roll out over the next two releases. To learn more and get started, check out the ARCore developer website.