Posted by Hak Matsuda and Dirk Dougherty, Android Developer Relations team

If you develop a performance-intensive 3D game, you’re always looking for ways to give users richer graphics, higher frame rates, and better responsiveness. You also want to conserve the user’s battery and keep the device from getting too warm during play. To help you optimize in all of these areas, consider taking advantage of the hardware scaler that’s available on almost all Android devices in the market today.

How it works and why you should use it

Virtually all modern Android devices use a CPU/GPU chipset that includes a hardware video scaler. Android provides the higher-level integration and makes the scaler available to apps through standard Android APIs, from Java or native (C++) code. To take advantage of the hardware scaler, all you have to do is render to a fixed-size graphics buffer, rather than using the system-provided default buffers, which are sized to the device's full screen resolution.

When you render to a fixed-size buffer, the device hardware does the work of scaling your scene up (or down) to match the device's screen resolution, including making any adjustments to aspect ratio. Typically, you would create a fixed-size buffer that's smaller than the device's full screen resolution, which lets you render more efficiently — especially on today's high-resolution screens.

Using the hardware scaler is more efficient for several reasons. First, hardware scalers are extremely fast and can produce great visual results through multi-tap and other algorithms that reduce artifacts. Second, because your app is rendering to a smaller buffer, the computation load on the GPU is reduced and performance improves. Third, with less computation work to do, the GPU runs cooler and uses less battery. And finally, you can choose what size rendering buffer you want to use, and that buffer can be the same on all devices, regardless of the actual screen resolution.

Optimizing the fill rate

In a mobile GPU, the pixel fill rate is one of the major sources of performance bottlenecks for performance game applications. With newer phones and tablets offering higher and higher screen resolutions, rendering your 2D or 3D graphics on those those devices can significantly reduce your frame rate. The GPU hits its maximum fill rate, and with so many pixels to fill, your frame rate drops.

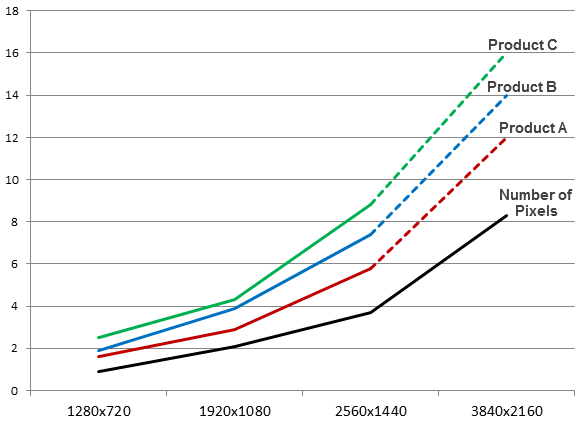

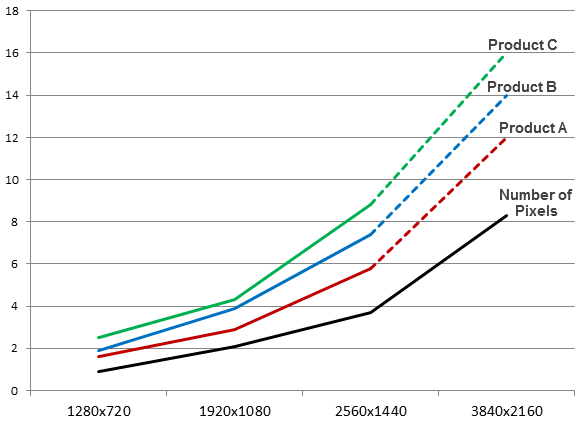

Power consumed in the GPU at different rendering resolutions, across several popular chipsets in use on Android devices. (Data provided by Qualcomm).

To avoid these bottlenecks, you need to reduce the number of pixels that your game is drawing in each frame. There are several techniques for achieving that, such as using depth-prepass optimizations and others, but a really simple and effective way is making use of the hardware scaler.

Instead of rendering to a full-size buffer that could be as large as 2560x1600, your game can instead render to a smaller buffer — for example 1280x720 or 1920x1080 — and let the hardware scaler expand your scene without any additional cost and minimal loss in visual quality.

Reducing power consumption and thermal effects

A performance-intensive game can tend to consume too much battery and generate too much heat. The game’s power consumption and thermal conditions are important to users, and they are important considerations to developers as well.

As shown in the diagram, the power consumed in the device GPU increases significantly as rendering resolution rises. In most cases, any heavy use of power in GPU will end up reducing battery life in the device.

In addition, as CPU/GPU rendering load increases, heat is generated that can make the device uncomfortable to hold. The heat can even trigger CPU/GPU speed adjustments designed to cool the CPU/GPU, and these in turn can throttle the processing power that’s available to your game.

For both minimizing power consumption and thermal effects, using the hardware scaler can be very useful. Because you are rendering to a smaller buffer, the GPU spends less energy rendering and generates less heat.

Accessing the hardware scaler from Android APIs

Android gives you easy access to the hardware scaler through standard APIs, available from your Java code or from your native (C++) code through the Android NDK.

All you need to do is use the APIs to create a fixed-size buffer and render into it. You don’t need to consider the actual size of the device screen, however in cases where you want to preserve the original aspect ratio, you can either match the aspect ratio of the buffer to that of the screen, or you can adjust your rendering into the buffer.

From your Java code, you access the scaler through SurfaceView, introduced in API level 1. Here’s how you would create a fixed-size buffer at 1280x720 resolution:

surfaceView = new GLSurfaceView(this);

surfaceView.getHolder().setFixedSize(1280, 720);

If you want to use the scaler from native code, you can do so through the NativeActivity class, introduced in Android 2.3 (API level 9). Here’s how you would create a fixed-size buffer at 1280x720 resolution using NativeActivity:

int32_t ret = ANativeWindow_setBuffersGeometry(window, 1280, 720, 0);

By specifying a size for the buffer, the hardware scaler is enabled and you benefit in your rendering to the specified window.

Choosing a size for your graphics buffer

If you will use a fixed-size graphics buffer, it's important to choose a size that balances visual quality across targeted devices with performance and efficiency gains.

For most performance 3D games that use the hardware scaler, the recommended size for rendering is 1080p. As illustrated in the diagram, 1080p is a sweet spot that balances a visual quality, frame rate, and power consumption. If you are satisfied with 720p, of course you can use that size for even more efficient operations.

More information

If you’d like to take advantage of the hardware scaler in your app, take a look at the class documentation for SurfaceView or NativeActivity, depending on whether you are rendering through the Android framework or native APIs.

Watch for sample code on using the hardware scaler coming soon!