Successful deep learning models often require significant amounts of computational resources, memory and power to train and run, which presents an obstacle if you want them to perform well on mobile and IoT devices. On-device machine learning allows you to run inference directly on the devices, with the benefits of data privacy and access everywhere, regardless of connectivity. On-device ML systems, such as MobileNets and ProjectionNets, address the resource bottlenecks on mobile devices by optimizing for model efficiency. But what if you wanted to train your own customized, on-device models for your personal mobile application?

Yesterday at Google I/O, we announced ML Kit to make machine learning accessible for all mobile developers. One of the core ML Kit capabilities that will be available soon is an automatic model compression service powered by “Learn2Compress” technology developed by our research team. Learn2Compress enables custom on-device deep learning models in TensorFlow Lite that run efficiently on mobile devices, without developers having to worry about optimizing for memory and speed. We are pleased to make Learn2Compress for image classification available soon through ML Kit. Learn2Compress will be initially available to a small number of developers, and will be offered more broadly in the coming months. You can sign up here if you are interested in using this feature for building your own models.

How it Works

Learn2Compress generalizes the learning framework introduced in previous works like ProjectionNet and incorporates several state-of-the-art techniques for compressing neural network models. It takes as input a large pre-trained TensorFlow model provided by the user, performs training and optimization and automatically generates ready-to-use on-device models that are smaller in size, more memory-efficient, more power-efficient and faster at inference with minimal loss in accuracy.

|

| Learn2Compress for automatically generating on-device ML models. |

- Pruning reduces model size by removing weights or operations that are least useful for predictions (e.g.low-scoring weights). This can be very effective especially for on-device models involving sparse inputs or outputs, which can be reduced up to 2x in size while retaining 97% of the original prediction quality.

- Quantization techniques are particularly effective when applied during training and can improve inference speed by reducing the number of bits used for model weights and activations. For example, using 8-bit fixed point representation instead of floats can speed up the model inference, reduce power and further reduce size by 4x.

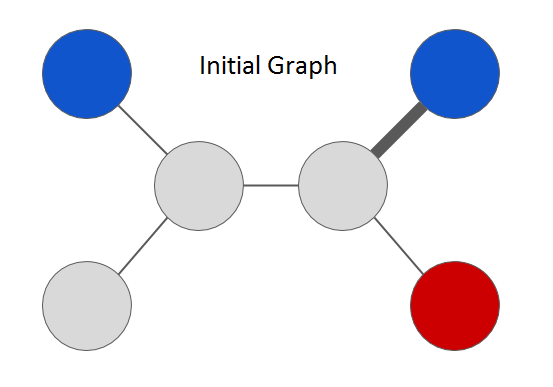

- Joint training and distillation approaches follow a teacher-student learning strategy — we use a larger teacher network (in this case, user-provided TensorFlow model) to train a compact student network (on-device model) with minimal loss in accuracy.

The teacher network can be fixed (as in distillation) or jointly optimized, and even train multiple student models of different sizes simultaneously. So instead of a single model, Learn2Compress generates multiple on-device models in a single shot, at different sizes and inference speeds, and lets the developer pick one best suited for their application needs.

Joint training and distillation approach to learn compact student models.

How well does it work?

To demonstrate the effectiveness of Learn2Compress, we used it to build compact on-device models of several state-of-the-art deep networks used in image and natural language tasks such as MobileNets, NASNet, Inception, ProjectionNet, among others. For a given task and dataset, we can generate multiple on-device models at different inference speeds and model sizes.

|

| Accuracy at various sizes for Learn2Compress models and full-sized baseline networks on CIFAR-10 (left) and ImageNet (right) image classification tasks. Student networks used to produce the compressed variants for CIFAR-10 and ImageNet are modeled using NASNet and MobileNet-inspired architectures, respectively. |

|

| Computation cost and average prediction latency (on Pixel phone) for baseline and Learn2Compress models on CIFAR-10 image classification task. Learn2Compress-optimized models use NASNet-style network architecture. |

We will continue to improve Learn2Compress with future advances in ML and deep learning, and extend to more use-cases beyond image classification. We are excited and looking forward to make this available soon through ML Kit’s compression service on the Cloud. We hope this will make it easy for developers to automatically build and optimize their own on-device ML models so that they can focus on building great apps and cool user experiences involving computer vision, natural language and other machine learning applications.

Acknowledgments

I would like to acknowledge our core contributors Gaurav Menghani, Prabhu Kaliamoorthi and Yicheng Fan along with Wei Chai, Kang Lee, Sheng Xu and Pannag Sanketi. Special thanks to Dave Burke, Brahim Elbouchikhi, Hrishikesh Aradhye, Hugues Vincent, and Arun Venkatesan from the Android team; Sachin Kotwani, Wesley Tarle, Pavel Jbanov and from the Firebase team; Andrei Broder, Andrew Tomkins, Robin Dua, Patrick McGregor, Gaurav Nemade, the Google Expander team and TensorFlow team.