Posted by Jon Markoff, Staff Developer Advocate, Android Security

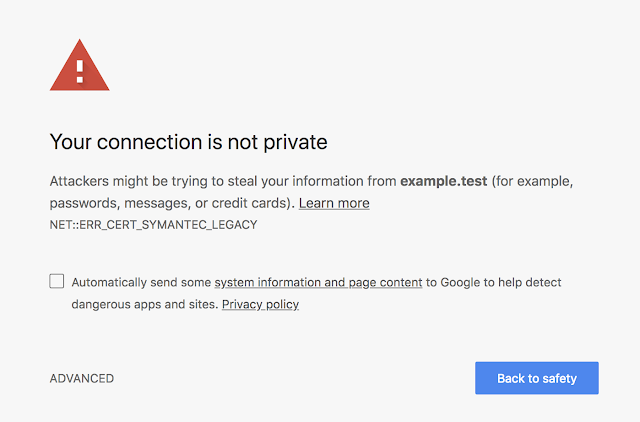

Have you ever tried to encrypt data in your app? As a developer, you want to keep data safe, and in the hands of the party intended to use. But if you’re like most Android developers, you don’t have a dedicated security team to help encrypt your app’s data properly. By searching the web to learn how to encrypt data, you might get answers that are several years out of date and provide incorrect examples.

The Jetpack Security (JetSec) crypto library provides abstractions for encrypting Files and SharedPreferences objects. The library promotes the use of the AndroidKeyStore while using safe and well-known cryptographic primitives. Using EncryptedFile and EncryptedSharedPreferences allows you to locally protect files that may contain sensitive data, API keys, OAuth tokens, and other types of secrets.

Why would you want to encrypt data in your app? Doesn’t Android, since 5.0, encrypt the contents of the user's data partition by default? It certainly does, but there are some use cases where you may want an extra level of protection. If your app uses shared storage, you should encrypt the data. In the app home directory, your app should encrypt data if your app handles sensitive information including but not limited to personally identifiable information (PII), health records, financial details, or enterprise data. When possible, we recommend that you tie this information to biometrics for an extra level of protection.

Jetpack Security is based on Tink, an open-source, cross-platform security project from Google. Tink might be appropriate if you need general encryption, hybrid encryption, or something similar. Jetpack Security data structures are fully compatible with Tink.

Key Generation

Before we jump into encrypting your data, it’s important to understand how your encryption keys will be kept safe. Jetpack Security uses a master key, which encrypts all subkeys that are used for each cryptographic operation. JetSec provides a recommended default master key in the MasterKeys class. This class uses a basic AES256-GCM key which is generated and stored in the AndroidKeyStore. The AndroidKeyStore is a container which stores cryptographic keys in the TEE or StrongBox, making them hard to extract. Subkeys are stored in a configurable SharedPreferences object.

Primarily, we use the AES256_GCM_SPEC specification in Jetpack Security, which is recommended for general use cases. AES256-GCM is symmetric and generally fast on modern devices.

val keyAlias = MasterKeys.getOrCreate(MasterKeys.AES256_GCM_SPEC)

For apps that require more configuration, or handle very sensitive data, it’s recommended to build your KeyGenParameterSpec, choosing options that make sense for your use. Time-bound keys with BiometricPrompt can provide an extra level of protection against rooted or compromised devices.

Important options:

userAuthenticationRequired() and userAuthenticationValiditySeconds() can be used to create a time-bound key. Time-bound keys require authorization using BiometricPrompt for both encryption and decryption of symmetric keys.

unlockedDeviceRequired() sets a flag that helps ensure key access cannot happen if the device is not unlocked. This flag is available on Android Pie and higher.

- Use

setIsStrongBoxBacked(), to run crypto operations on a stronger separate chip. This has a slight performance impact, but is more secure. It’s available on some devices that run Android Pie or higher.

Note: If your app needs to encrypt data in the background, you should not use time-bound keys or require that the device is unlocked, as you will not be able to accomplish this without a user present.

// Custom Advanced Master Key

val advancedSpec = KeyGenParameterSpec.Builder(

"master_key",

KeyProperties.PURPOSE_ENCRYPT or KeyProperties.PURPOSE_DECRYPT

).apply {

setBlockModes(KeyProperties.BLOCK_MODE_GCM)

setEncryptionPaddings(KeyProperties.ENCRYPTION_PADDING_NONE)

setKeySize(256)

setUserAuthenticationRequired(true)

setUserAuthenticationValidityDurationSeconds(15) // must be larger than 0

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.P) {

setUnlockedDeviceRequired(true)

setIsStrongBoxBacked(true)

}

}.build()

val advancedKeyAlias = MasterKeys.getOrCreate(advancedSpec)

Unlocking time-bound keys

You must use BiometricPrompt to authorize the device if your key was created with the following options:

userAuthenticationRequired is true

userAuthenticationValiditySeconds > 0

After the user authenticates, the keys are unlocked for the amount of time set in the validity seconds field. The AndroidKeystore does not have an API to query key settings, so your app must keep track of these settings. You should build your BiometricPrompt instance in the onCreate() method of the activity where you present the dialog to the user.

BiometricPrompt code to unlock time-bound keys

// Activity.onCreate

val promptInfo = PromptInfo.Builder()

.setTitle("Unlock?")

.setDescription("Would you like to unlock this key?")

.setDeviceCredentialAllowed(true)

.build()

val biometricPrompt = BiometricPrompt(

this, // Activity

ContextCompat.getMainExecutor(this),

authenticationCallback

)

private val authenticationCallback = object : AuthenticationCallback() {

override fun onAuthenticationSucceeded(

result: AuthenticationResult

) {

super.onAuthenticationSucceeded(result)

// Unlocked -- do work here.

}

override fun onAuthenticationError(

errorCode: Int, errString: CharSequence

) {

super.onAuthenticationError(errorCode, errString)

// Handle error.

}

}

To use:

biometricPrompt.authenticate(promptInfo)

Encrypt Files

Jetpack Security includes an EncryptedFile class, which removes the challenges of encrypting file data. Similar to File, EncryptedFile provides a FileInputStream object for reading and a FileOutputStream object for writing. Files are encrypted using Streaming AEAD, which follows the OAE2 definition. The data is divided into chunks and encrypted using AES256-GCM in such a way that it's not possible to reorder.

val secretFile = File(filesDir, "super_secret")

val encryptedFile = EncryptedFile.Builder(

secretFile,

applicationContext,

advancedKeyAlias,

FileEncryptionScheme.AES256_GCM_HKDF_4KB)

.setKeysetAlias("file_key") // optional

.setKeysetPrefName("secret_shared_prefs") // optional

.build()

encryptedFile.openFileOutput().use { outputStream ->

// Write data to your encrypted file

}

encryptedFile.openFileInput().use { inputStream ->

// Read data from your encrypted file

}

Encrypt SharedPreferences

If your application needs to save Key-value pairs - such as API keys - JetSec provides the EncryptedSharedPreferences class, which uses the same SharedPreferences interface that you’re used to.

Both keys and values are encrypted. Keys are encrypted using AES256-SIV-CMAC, which provides a deterministic cipher text; values are encrypted with AES256-GCM and are bound to the encrypted key. This scheme allows the key data to be encrypted safely, while still allowing lookups.

EncryptedSharedPreferences.create(

"my_secret_prefs",

advancedKeyAlias,

applicationContext,

PrefKeyEncryptionScheme.AES256_SIV,

PrefValueEncryptionScheme.AES256_GCM

).edit {

// Update secret values

}

More Resources

FileLocker is a sample app on the Android Security GitHub samples page. It’s a great example of how to use File encryption using Jetpack Security.

Happy Encrypting!