Learning good visual and vision-language representations is critical to solving computer vision problems — image retrieval, image classification, video understanding — and can enable the development of tools and products that change people’s daily lives. For example, a good vision-language matching model can help users find the most relevant images given a text description or an image input and help tools such as Google Lens find more fine-grained information about an image.

To learn such representations, current state-of-the-art (SotA) visual and vision-language models rely heavily on curated training datasets that require expert knowledge and extensive labels. For vision applications, representations are mostly learned on large-scale datasets with explicit class labels, such as ImageNet, OpenImages, and JFT-300M. For vision-language applications, popular pre-training datasets, such as Conceptual Captions and Visual Genome Dense Captions, all require non-trivial data collection and cleaning steps, limiting the size of datasets and thus hindering the scale of the trained models. In contrast, natural language processing (NLP) models have achieved SotA performance on GLUE and SuperGLUE benchmarks by utilizing large-scale pre-training on raw text without human labels.

In "Scaling Up Visual and Vision-Language Representation Learning With Noisy Text Supervision", to appear at ICML 2021, we propose bridging this gap with publicly available image alt-text data (written copy that appears in place of an image on a webpage if the image fails to load on a user's screen) in order to train larger, state-of-the-art vision and vision-language models. To that end, we leverage a noisy dataset of over one billion image and alt-text pairs, obtained without expensive filtering or post-processing steps in the Conceptual Captions dataset. We show that the scale of our corpus can make up for noisy data and leads to SotA representation, and achieves strong performance when transferred to classification tasks such as ImageNet and VTAB. The aligned visual and language representations also set new SotA results on Flickr30K and MS-COCO benchmarks, even when compared with more sophisticated cross-attention models, and enable zero-shot image classification and cross-modality search with complex text and text + image queries.

Creating the Dataset

Alt-texts usually provide a description of what the image is about, but the dataset is “noisy” because some text may be partly or wholly unrelated to its paired image.

|

| Example image-text pairs randomly sampled from the training dataset of ALIGN. One clearly noisy text label is marked in italics. |

In this work, we follow the methodology of constructing the Conceptual Captions dataset to get a version of raw English alt-text data (image and alt-text pairs). While the Conceptual Captions dataset was cleaned by heavy filtering and post-processing, this work scales up visual and vision-language representation learning by relaxing most of the cleaning steps in the original work. Instead, we only apply minimal frequency-based filtering. The result is a much larger but noisier dataset of 1.8B image-text pairs.

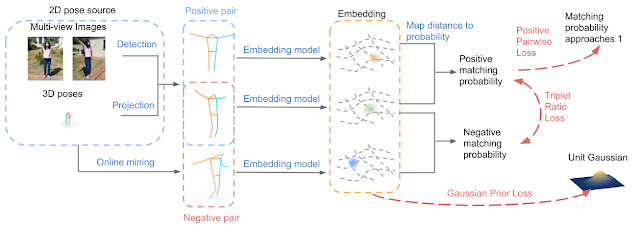

ALIGN: A Large-scale ImaGe and Noisy-Text Embedding

For the purpose of building larger and more powerful models easily, we employ a simple dual-encoder architecture that learns to align visual and language representations of the image and text pairs. Image and text encoders are learned via a contrastive loss (formulated as normalized softmax) that pushes the embeddings of matched image-text pairs together while pushing those of non-matched image-text pairs (within the same batch) apart. The large-scale dataset makes it possible for us to scale up the model size to be as large as EfficientNet-L2 (image encoder) and BERT-large (text encoder) trained from scratch. The learned representation can be used for downstream visual and vision-language tasks.

|

| Figure of ImageNet credit to (Krizhevsky et al. 2012) and VTAB figure credit to (Zhai et al. 2019) |

The resulting representation can be used for vision-only or vision-language task transfer. Without any fine-tuning, ALIGN powers cross-modal search – image-to-text search, text-to-image search, and even search with joint image+text queries, examples below.

|

Evaluating Retrieval and Representation

The learned ALIGN model with BERT-Large and EfficientNet-L2 as text and image encoder backbones achieves SotA performance on multiple image-text retrieval tasks (Flickr30K and MS-COCO) in both zero-shot and fine-tuned settings, as shown below.

| Flickr30K (1K test set) R@1 | MS-COCO (5K test set) R@1 | ||||

| Setting | Model | image → text | text → image | image → text | text → image |

| Zero-shot | ImageBERT | 70.7 | 54.3 | 44.0 | 32.3 |

| UNITER | 83.6 | 68.7 | - | - | |

| CLIP | 88.0 | 68.7 | 58.4 | 37.8 | |

| ALIGN | 88.6 | 75.7 | 58.6 | 45.6 | |

| Fine-tuned | GPO | 88.7 | 76.1 | 68.1 | 52.7 |

| UNITER | 87.3 | 75.6 | 65.7 | 52.9 | |

| ERNIE-ViL | 88.1 | 76.7 | - | - | |

| VILLA | 87.9 | 76.3 | - | - | |

| Oscar | - | - | 73.5 | 57.5 | |

| ALIGN | 95.3 | 84.9 | 77.0 | 59.9 | |

| Image-text retrieval results (recall@1) on Flickr30K and MS-COCO datasets (both zero-shot and fine-tuned). ALIGN significantly outperforms existing methods including the cross-modality attention models that are too expensive for large-scale retrieval applications. |

ALIGN is also a strong image representation model. Shown below, with frozen features, ALIGN slightly outperforms CLIP and achieves a SotA result of 85.5% top-1 accuracy on ImageNet. With fine-tuning, ALIGN achieves higher accuracy than most generalist models, such as BiT and ViT, and is only worse than Meta Pseudo Labels, which requires deeper interaction between ImageNet training and large-scale unlabeled data.

| Model (backbone) | Acc@1 w/ frozen features | Acc@1 | Acc@5 |

| WSL (ResNeXt-101 32x48d) | 83.6 | 85.4 | 97.6 |

| CLIP (ViT-L/14) | 85.4 | - | - |

| BiT (ResNet152 x 4) | - | 87.54 | 98.46 |

| NoisyStudent (EfficientNet-L2) | - | 88.4 | 98.7 |

| ViT (ViT-H/14) | - | 88.55 | - |

| Meta-Pseudo-Labels (EfficientNet-L2) | - | 90.2 | 98.8 |

| ALIGN (EfficientNet-L2) | 85.5 | 88.64 | 98.67 |

| ImageNet classification results comparison with supervised training (fine-tuning). |

Zero-Shot Image Classification

Traditionally, image classification problems treat each class as independent IDs, and people have to train the classification layers with at least a few shots of labeled data per class. The class names are actually also natural language phrases, so we can naturally extend the image-text retrieval capability of ALIGN for image classification without any training data.

On the ImageNet validation dataset, ALIGN achieves 76.4% top-1 zero-shot accuracy and shows great robustness in different variants of ImageNet with distribution shifts, similar to the concurrent work CLIP. We also use the same text prompt engineering and ensembling as in CLIP.

| ImageNet | ImageNet-R | ImageNet-A | ImageNet-V2 | |

| CLIP | 76.2 | 88.9 | 77.2 | 70.1 |

| ALIGN | 76.4 | 92.2 | 75.8 | 70.1 |

| Top-1 accuracy of zero-shot classification on ImageNet and its variants. |

Application in Image Search

To illustrate the quantitative results above, we build a simple image retrieval system with the embeddings trained by ALIGN and show the top 1 text-to-image retrieval results for a handful of text queries from a 160M image pool. ALIGN can retrieve precise images given detailed descriptions of a scene, or fine-grained or instance-level concepts like landmarks and artworks. These examples demonstrate that the ALIGN model can align images and texts with similar semantics, and that ALIGN can generalize to novel complex concepts.

|

| Image retrieval with fine-grained text queries using ALIGN's embeddings. |

Multimodal (Image+Text) Query for Image Search

A surprising property of word vectors is that word analogies can often be solved with vector arithmetic. A common example, "king – man + woman = queen". Such linear relationships between image and text embeddings also emerge in ALIGN.

Specifically, given a query image and a text string, we add their ALIGN embeddings together and use it to retrieve relevant images using cosine similarity, as shown below. These examples not only demonstrate the compositionality of ALIGN embeddings across vision and language domains, but also show the feasibility of searching with a multi-modal query. For instance, one could now look for the "Australia" or "Madagascar" equivalence of pandas, or turn a pair of black shoes into identically-looking beige shoes. Also, it is possible to remove objects/attributes from a scene by performing subtraction in the embedding space, shown below.

|

| Image retrieval with image text queries. By adding or subtracting text query embedding, ALIGN retrieves relevant images. |

Social Impact and Future Work

While this work shows promising results from a methodology perspective with a simple data collection method, additional analysis of the data and the resulting model is necessary before the responsible use of the model in practice. For instance, considerations should be made towards the potential for the use of harmful text data in alt-texts to reinforce such harms. With regard to fairness, data balancing efforts may be required to prevent reinforcing stereotypes from the web data. Additional testing and training around sensitive religious or cultural items should be taken to understand and mitigate the impact from possibly mislabeled data.

Further analysis should also be taken to ensure that the demographic distribution of humans and related cultural items, such as clothing, food, and art, do not cause skewed model performance. Analysis and balancing would be required if such models will be used in production.

Conclusion

We have presented a simple method of leveraging large-scale noisy image-text data to scale up visual and vision-language representation learning. The resulting model, ALIGN, is capable of cross-modal retrieval and significantly outperforms SotA models. In visual-only downstream tasks, ALIGN is also comparable to or outperforms SotA models trained with large-scale labeled data.

Acknowledgement

We would like to thank our co-authors in Google Research: Ye Xia, Yi-Ting Chen, Zarana Parekh, Hieu Pham, Quoc V. Le, Yunhsuan Sung, Zhen Li, Tom Duerig. This work was also done with invaluable help from other colleagues from Google. We would like to thank Jan Dlabal and Zhe Li for continuous support in training infrastructure, Simon Kornblith for building the zero-shot & robustness model evaluation on ImageNet variants, Xiaohua Zhai for help on conducting VTAB evaluation, Mingxing Tan and Max Moroz for suggestions on EfficientNet training, Aleksei Timofeev for the early idea of multimodal query retrieval, Aaron Michelony and Kaushal Patel for their early work on data generation, and Sergey Ioffe, Jason Baldridge and Krishna Srinivasan for the insightful feedback and discussion.