Posted by Lillian Chen – Global Brand and Content Marketing Manager, Google Accelerator Programs

Located in Boca Raton, Carbon Limit aims to decarbonize the industry and take part in saving, protecting, and healing the environment. Cofounder Tim Sperry explains that for him and his cofounders Oro Padron, and Christina Stavridi, the mission is personal. “I’ve lost family members [to polluted air]. Oro has his own story, Christina has her own story, and our other core team member Angel just had kids. All of us have our own connection to our mission. And with that, we've developed a really strong company culture,” he says.

Today, Carbon Limit is evolving to create sustainable solutions for the built environment. Their flagship product, CaptureCrete, is an additive that gives concrete the ability to capture and store CO2 directly from the air.

Carbon Limit’s initial prototype — a portable shipping container fitted with solar panels, filtered media, and intake fans — was a direct air capture system. With a business model that was dependent on tax credits and carbon credits, the team decided to pivot. “We took our original technology, which was always meant to capture CO2 to store in concrete as a permanent storage solution to CO2 in the air, and turned that into concrete technology,” explains Tim. “We’re lowering the carbon footprint of concrete projects and problems, and providing the ability to generate valuable carbon credits. It actually pays to use our technology: you’re quantifiably lowering the carbon footprint and improving the environment, and you can make money from these carbon credits.”

How Carbon Limit uses AI

Combating climate change is a race against time, as cofounder and CMO Oro explains: “We are in an industry that moves at a pace that when technology catches up, sometimes it’s too late.”

“We have found that AI actually is not eliminating, it is creating—it is letting our own people discover things about themselves and possibilities that they didn’t know about,” says Oro. “We embrace AI because we are embracing the future, and we strive to be pioneers.”

Artificial intelligence also allows for transparency in a space that can become congested by unreliable data. “We’re developing tools, specifically the digital MRV, which stands for measurement, reporting, and verification of carbon credits,” says Tim. “There is bad press that there’s a lot of fake or unverified carbon credits being sold, generated, or created.” AI gives real-time, real-world data, exposure, and quantification of the carbon credits. Carbon Limit is generating carbon credits with hard tech, bringing trust into tech.

How Carbon Limit uses Google technology

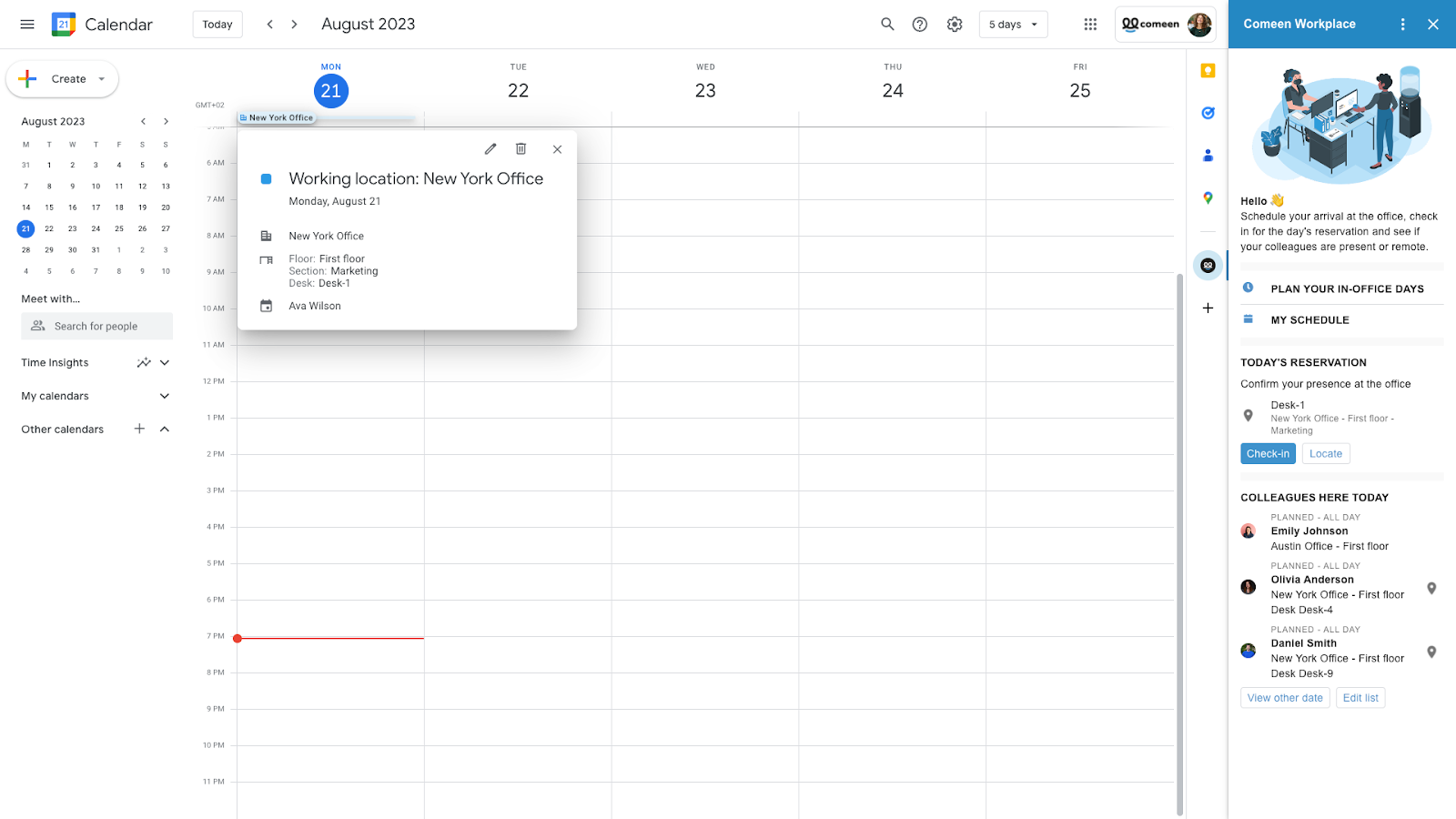

Carbon Limit is a team of developers, programmers, and data scientists working across multiple operating systems, so they needed a centralized system for collaborating. “Google Workspace has allowed us to build our own CRMs with Google Sheets and Google Docs, which we’ve found to be the easiest way to onboard quickly. Google has been an amazing tool for us to communicate internally.” Christina adds, “We have a small but diverse team with ages that vary. Not every single team member is used to using the same tools, so the way Oro has onboarded the team and utilized these tools in a customizable way where they’re easily adoptable and used by every single team member to optimize our work has been super beneficial.”

Additionally, the Carbon Limit team uses Google data for training their CO2-related data, and Google Colab to train their models. “We have some models that were made in Python, but utilizing Google Cloud has helped us predict models faster,” says Oro.

Participating in Google for Startups Accelerator: Climate Change

Before Carbon Limit started the Google for Startups Accelerator: Climate Change program, the Carbon Limit team considered integrating artificial intelligence (AI) and machine learning (ML) into their process but wanted to ensure that they were making the right decision. With Google mentorship and support, they went full force with AI and ML algorithms. “Accelerator: Climate Change helped us realize exactly what we needed to do,” says Oro.

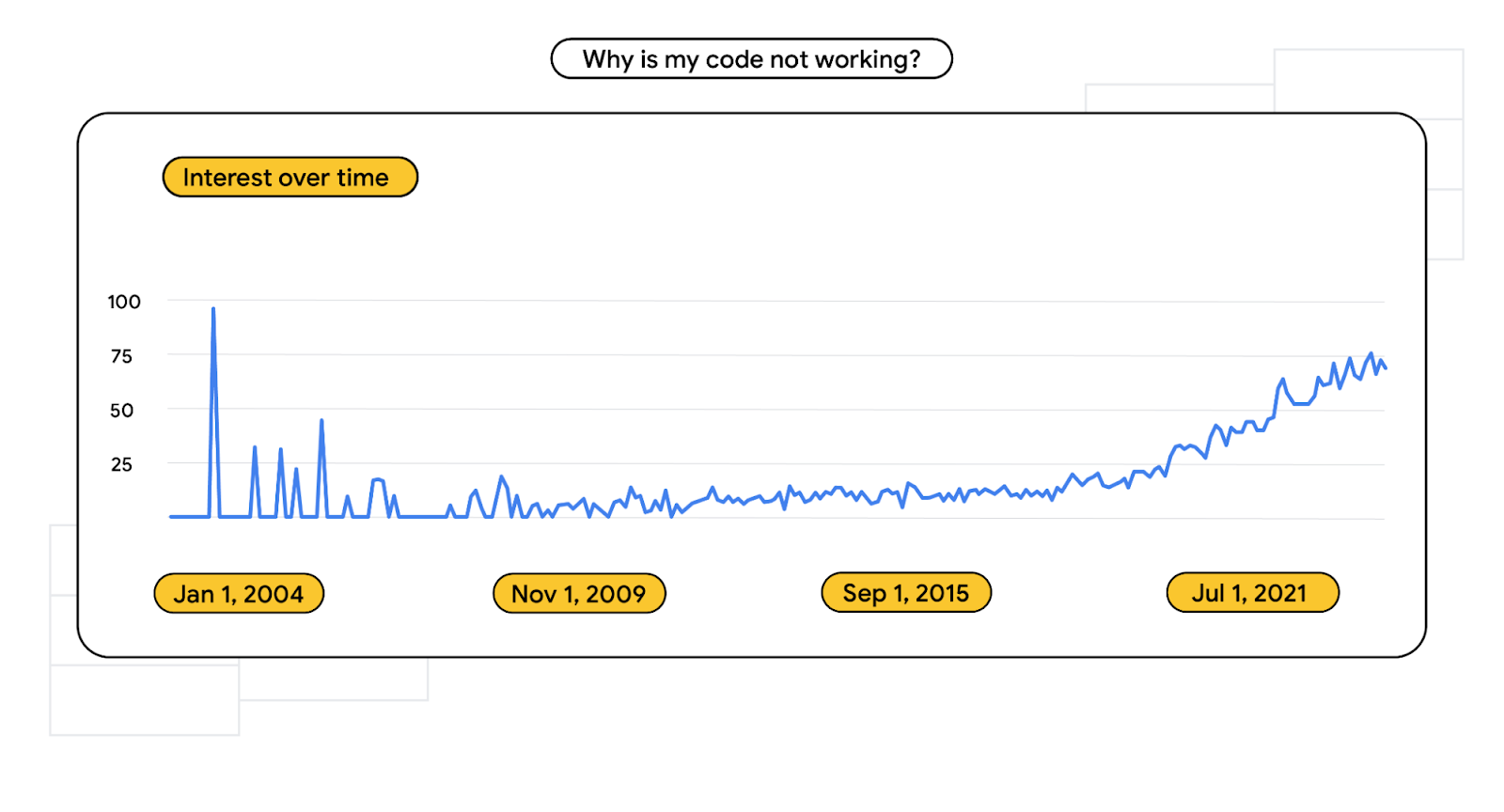

Participating in the program also gave Carbon Limit access to resources that helped enhance their SEO. “We learned how to increment our backlinks and how to improve performance, which has been extremely helpful to put us on the map. Our whole backbone has been built thanks to Google Workspace,” says Oro.

“The Google for Startups Accelerator program gave us valuable resources and guidance on what we can do, how we can do it, and what not to do” says Tim. “The mentorship and learning from people who developed the technology, use the technology, and work with it every day was invaluable for us.” Christina adds, “The mentors also helped us refine our pitch when communicating our solution on different platforms. That was very useful to understand how to speak to different customers and investors.”

The program also led to a new client for Carbon Limit: Google. “That was critical because with Google as an early adopter, that helped us build a significant amount of credibility and validation,” Tim tells us.

What’s next for Carbon Limit

Looking ahead, Carbon Limit will be launching a new technology that can be used in data centers to mitigate electricity as well as reduce and remove CO2 pollution.

“We went from a carbon capture solution to sustainable solutions because we wanted to go even bigger,” says Tim. “We want to inspire others to do what we’re doing and help create more awareness and a more environmentally friendly world.”

Tim shares, “I love what I do. I love to be able to invent something that didn’t exist. But more importantly, it helps protect my family, my loved ones, future generations, and the environment. And I get to do it with this amazing group of people at Carbon Limit.”

Learn about how to get involved in Google accelerator programs here.

Posted by Brian Craft, Satish Shreenivasa, Huikun Zhang, Manisha Arora and Paul Cubre – gTech Data Science Team

Posted by Brian Craft, Satish Shreenivasa, Huikun Zhang, Manisha Arora and Paul Cubre – gTech Data Science Team

Posted by Bradford Lee – Product Marketing Manager, Augmented Reality, and Ahsan Ashraf – Product Marketing Manager, Google Maps Platform

Posted by Bradford Lee – Product Marketing Manager, Augmented Reality, and Ahsan Ashraf – Product Marketing Manager, Google Maps Platform

Posted by Google for Developers

Posted by Google for Developers

Posted by Yariv Adan, Director of Cloud Conversational AI and Pati Jurek, Google for startups Accelerator Regional Lead

Posted by Yariv Adan, Director of Cloud Conversational AI and Pati Jurek, Google for startups Accelerator Regional Lead

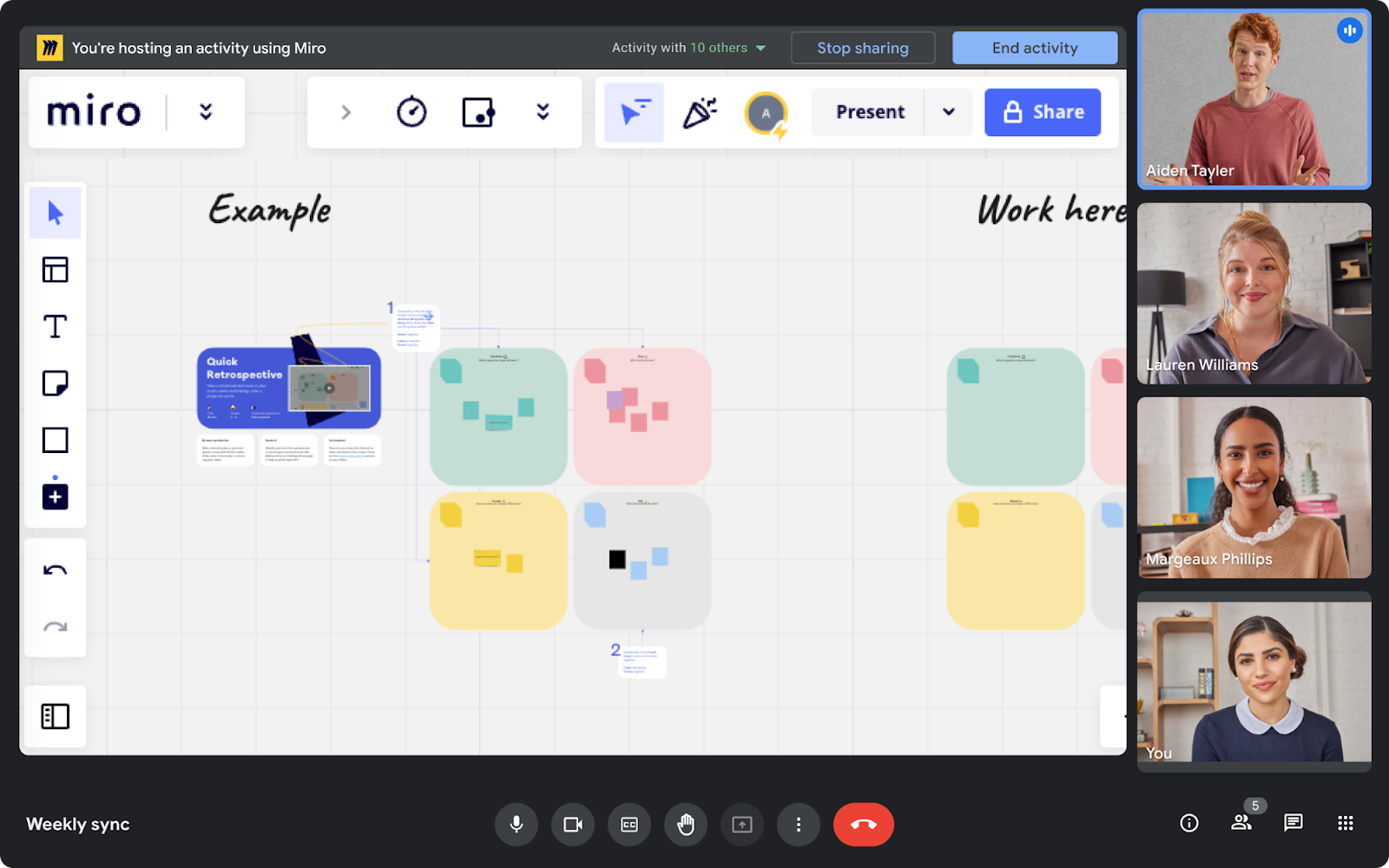

Posted by Chanel Greco, Developer Advocate

Posted by Chanel Greco, Developer Advocate

Posted by

Posted by

Posted by

Posted by