Posted by Jessica Dene Earley-Cha, Developer Relations Engineer

Like many other people who use their smartphone to make their lives easier, I’m way more likely to use an app that adapts to my behavior and is customized to fit me. Android apps already can support some personalization like the ability to long touch an app and a list of common user journeys are listed. When I long press my Audible app (an online audiobook and podcast service), it gives me a shortcut to the book I’m currently listening to; right now that is Daring Greatly by Brené Brown.

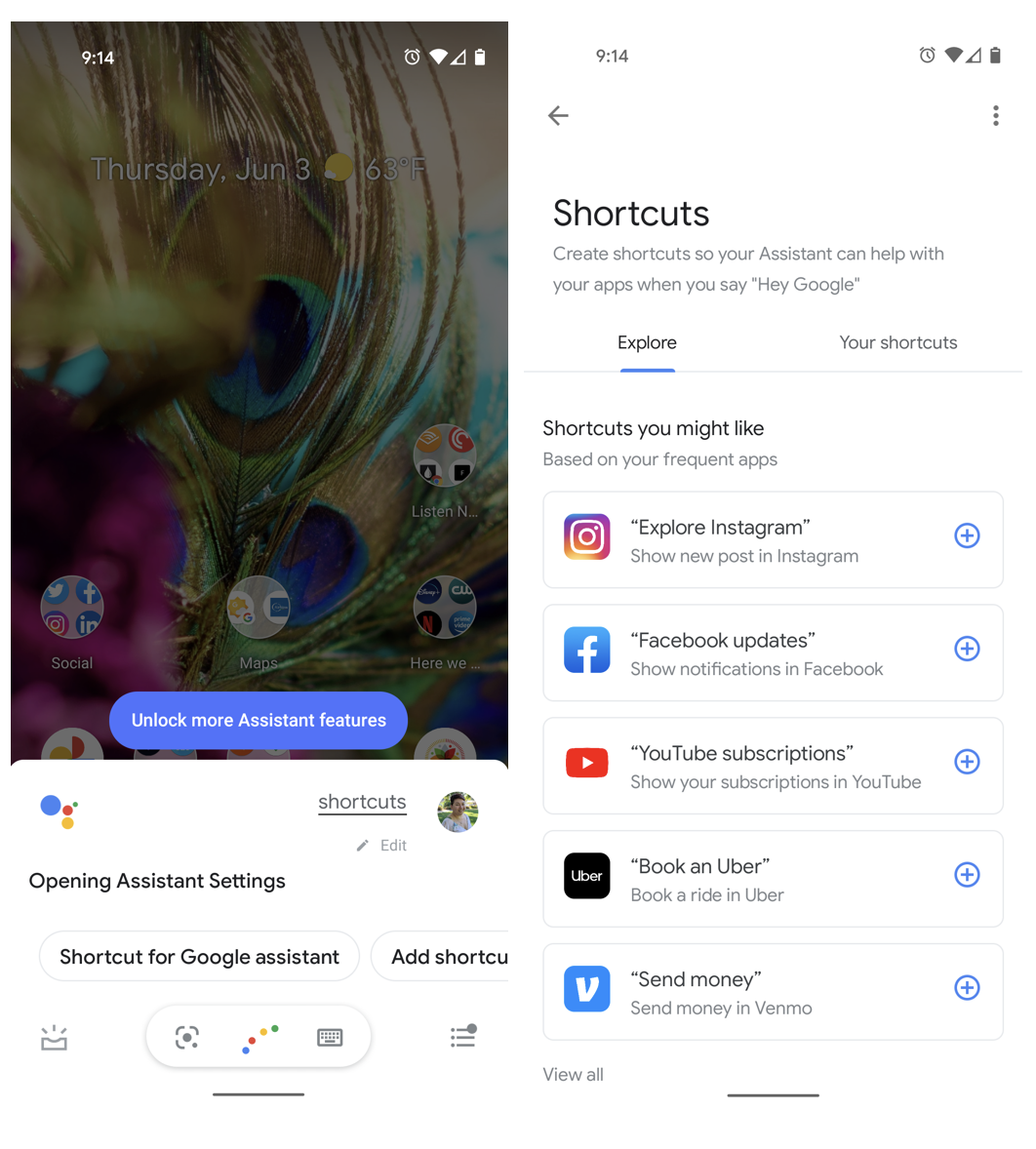

Now, imagine if these shortcuts could also be triggered by a voice command – and, when relevant to the user, show up in Google Assistant for easy use.

Wouldn't that be lovely?

Dynamic shortcuts on a mobile device

Well, now you can do that with App Actions by pushing dynamic shortcuts to the Google Assistant. Let’s go over what Shortcuts are, what happens when you push dynamic shortcuts to Google Assistant, and how to do just that!

Android Shortcuts

As an Android developer, you're most likely familiar with shortcuts. Shortcuts give your users the ability to jump into a specific part of your app. For cases where the destination in your app is based on individual user behavior, you can use a dynamic shortcut to jump to a specific thing the user was previously working with. For example, let’s consider a ToDo app, where users can create and maintain their ToDo lists. Since each item in the ToDo list is unique to each user, you can use Dynamic Shortcuts so that users' shortcuts can be based on their items on their ToDo list.

Below is a snippet of an Android dynamic shortcut for the fictional ToDo app.

val shortcut = = new ShortcutInfoCompat.Builder(context, task.id)

.setShortLabel(task.title)

.setLongLabel(task.title)

.setIcon(Icon.createWithResource(context, R.drawable.icon_active_task))

.setIntent(intent)

.build()

ShortcutManagerCompat.pushDynamicShortcut(context, shortcut)

Dynamic Shortcuts for App Actions

If you're pushing dynamic shortcuts, it's a short hop to make those same shortcuts available for use by Google Assistant. You can do that by adding the Google Shortcuts Integration library and a few lines of code.

To extend a dynamic shortcut to Google Assistant through App Actions, two jetpack modules need to be added, and the dynamic shortcut needs to include .addCapabilityBinding.

val shortcut = = new ShortcutInfoCompat.Builder(context, task.id)

.setShortLabel(task.title)

.setLongLabel(task.title)

.setIcon(Icon.createWithResource(context, R.drawable.icon_active_task))

.addCapabilityBinding("actions.intent.GET_THING", "thing.name", listOf(task.title))

.setIntent(intent)

.build()

ShortcutManagerCompat.pushDynamicShortcut(context, shortcut)

The addCapabilityBinding method binds the dynamic shortcut to a capability, which are declared ways a user can launch your app to the requested section. If you don’t already have App Actions implemented, you’ll need to add Capabilities to your shortcuts.xml file. Capabilities are an expression of the relevant feature of an app and contains a Built-In Intent (BII). BIIs are a language model for a voice command that Assistant already understands, and linking a BII to a shortcut allows Assistant to use the shortcut as the fulfillment for a matching command. In other words, by having capabilities, Assistant knows what to listen for, and how to launch the app.

In the example above, the addCapabilityBinding binds that dynamic shortcut to the actions.intent.GET_THING BII. When a user requests one of their items in their ToDo app, Assistant will process their request and it’ll trigger capability with the GET_THING BII that is listed in their shortcuts.xml.

<shortcuts xmlns:android="http://schemas.android.com/apk/res/android">

<capability android:name="actions.intent.GET_THING">

<intent

android:action="android.intent.action.VIEW"

android:targetPackage="YOUR_UNIQUE_APPLICATION_ID"

android:targetClass="YOUR_TARGET_CLASS">

<!-- Eg. name = the ToDo item -->

<parameter

android:name="thing.name"

android:key="name"/>

</intent>

</capability>

</shortcuts>

So in summary, the process to add dynamic shortcuts looks like this:

1. Configure App Actions by adding two jetpack modules ( ShortcutManagerCompat library and Google Shortcuts Integration Library). Then associate the shortcut with a Built-In Intent (BII) in your shortcuts.xml file. Finally push the dynamic shortcut from your app.

2. Two major things happen when you push your dynamic shortcuts to Assistant:

- Users can open dynamic shortcuts through Google Assistant, fast tracking users to your content

- During contextually relevant times, Assistant can proactively suggest your Android dynamic shortcuts to users, displaying it on Assistant enabled surfaces.

Not too bad. I don’t know about you, but I like to test out new functionality in a small app first. You're in luck! We recently launched a codelab that walks you through this whole process.

Looking for more resources to help improve your understanding of App Actions? We have a new learning pathway that walks you through the product, including the dynamic shortcuts that you just read about. Let us know what you think!

Thanks for reading! To share your thoughts or questions, join us on Reddit at r/GoogleAssistantDev.

Follow @ActionsOnGoogle on Twitter for more of our team's updates, and tweet using #AppActions to share what you’re working on. Can’t wait to see what you build!