Today, we are thrilled to announce the stable release of Android Studio Ladybug 🐞 Feature Drop (2024.2.2)!

Accelerate your productivity with Gemini in Android Studio, Animation Preview support for Wear Tiles, App Links Assistant and much more. All of these new features are designed to help you build high-quality Android apps faster.

Read on to learn more about all the updates, quality improvements, and new features across your key workflows in Android Studio Ladybug Feature Drop, and download the latest stable version today to try them out!

Gemini in Android Studio

Gemini Code Transforms

Gemini Code Transforms can help you modify, optimize, or add code to your app with AI assistance. Simply right-click in your code editor and select "Gemini > Generate code" or highlight code and select "Gemini > Transform selected code." You can also use the keyboard shortcut Ctrl+\ (⌘+\ on macOS) to bring up the Gemini prompt. Describe the changes you want to make to your code, and Gemini will suggest a code diff, allowing you to easily review and accept only the suggestions you want.

With Gemini Code Transforms, you can simplify complex code, perform specific code transformations, or even generate new functions. You can also refine the suggested code to iterate on the code suggestions with Gemini. It's an AI coding assistant right in your editor, helping you write better code more efficiently.

Rename

Gemini in Android Studio enhances your workflow with intelligent assistance for common tasks. When renaming a single variable, class, or method from the code editor, the "Refactor > Rename" action uses Gemini to suggest contextually appropriate names, making it smoother and more efficient to refactor names as you’re coding in the editor.

Rethink

For larger renaming refactors, Gemini can "Rethink variable names" across your whole file. This feature analyzes your code and suggests more intuitive and descriptive names for variables and methods, improving readability and maintainability.

Commit Message

Gemini now assists with commit messages. When committing changes to version control, it analyzes your code modifications and suggests a detailed commit message.

Generate Documentation

Gemini in Android Studio makes documenting your code easier than ever. To generate clear and concise documentation, select a code snippet, right-click in the editor and choose "Gemini > Document Function" (or "Document Class" or "Document Property", depending on the context). Gemini will generate a draft that you can then refine and perfect before accepting the changes. This streamlined process helps you create informative documentation quickly and efficiently.

Debug

Animation Preview support for Wear OS Tiles

Animation Preview support for Wear OS Tiles helps you visualize and debug tile animations with ease. It provides a real-time view of your animations, allowing you to preview them, control playback with options like play, pause, and speed adjustment, and inspect key properties such as initial/end states and animation curves. You can even dynamically modify animation code and instantly observe the results within the inspector, streamlining the debugging and refinement process.

Wear Health Services

The Wear Health Services feature in Android Studio simplifies the process of testing health and fitness apps by enabling Wear Health Services within the emulator. You can now easily customize various parameters for a given exercise such as heart rate, distance, and speed without needing a physical device or performing the activity itself. This streamlines the development and testing workflow, allowing for faster iteration and more efficient debugging of health-related features.

Optimize

App Links Assistant

App Links Assistant simplifies the process of implementing app links by serving valid JSON syntax that resolves broken deep links for your app. You can review the JSON file and then upload it to your website, resolving issues quickly. This eliminates the manual creation of the JSON file, saving you time and effort. The tool also allows you to compare existing JSON files with newly generated ones to easily identify any discrepancies.

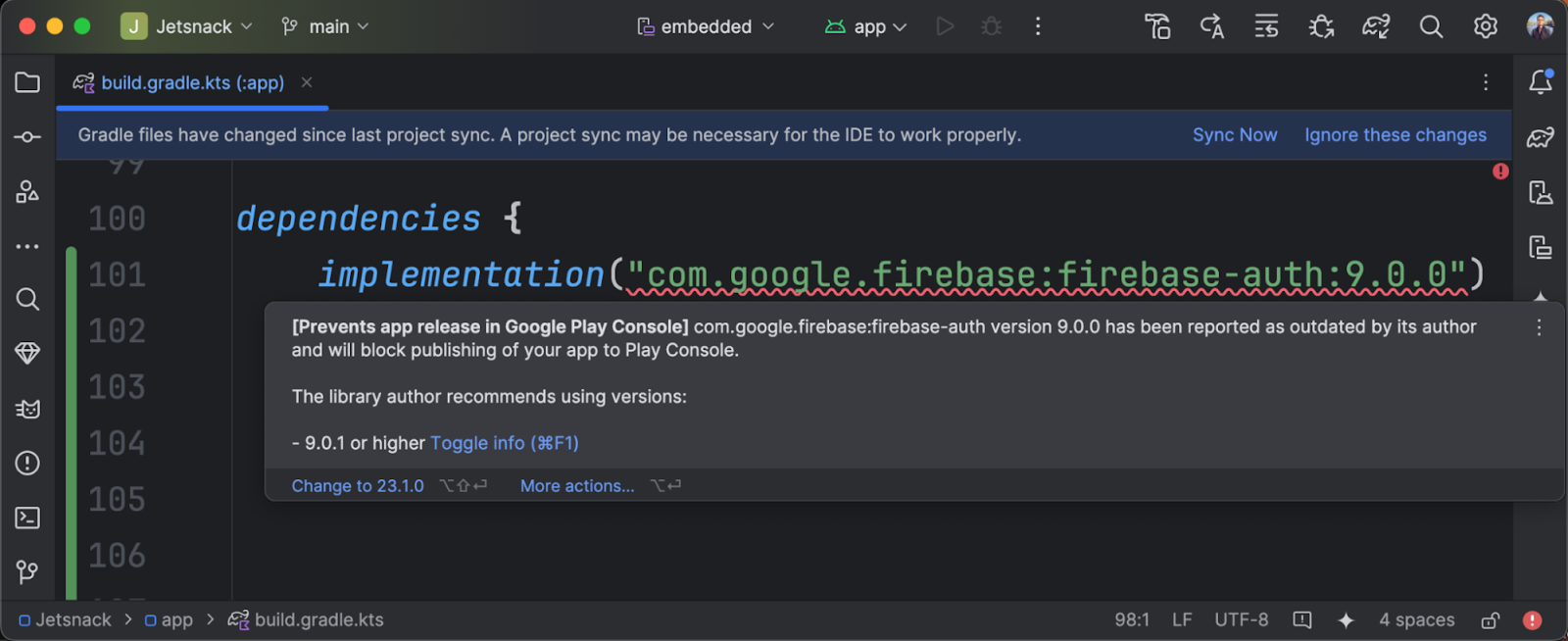

Google Play SDK Insights Integration

Android Studio now provides enhanced lint warnings for public SDKs from the Google Play SDK Index and the Google Play SDK Console, helping you identify and address potential issues. These warnings alert you if an SDK is outdated, violates Google Play policies, or has known security vulnerabilities. Furthermore, Android Studio provides helpful quick fixes and recommended version ranges whenever possible, making it easier to update your dependencies and keeping your app more secure and compliant.

Quality improvements

Beyond new features, we also continued to improve the overall quality and stability of Android Studio. In fact, the Android Studio team addressed over 770 bugs during the Ladybug Feature Drop development cycle.

IntelliJ platform update

Android Studio Ladybug Feature Drop (2024.2.2) includes the IntelliJ 2024.2 platform release, which has many new features such as more intuitive full line code completion suggestions, a preview in the Search Everywhere dialog and improved log management for the Java** and Kotlin programming languages.

See the full IntelliJ 2024.2 release notes.

Summary

To recap, Android Studio Ladybug Feature Drop includes the following enhancements and features:

Gemini in Android Studio

- Gemini Code Transforms

- Rename

- Rethink

- Commit Message

- Generate Documentation

Debug

- Animation Preview support for Wear OS Tiles

- Wear Health Services

Optimize

- App Links Assistant

- Google Play SDK Insights Integration

Quality Improvements

- 770+ bugs addressed

IntelliJ Platform Update

- More intuitive full line code completion suggestions

- Preview in the Search Everywhere dialog

- Improved log management for Java and Kotlin programming languages

Getting Started

Ready for next-level Android development? Download Android Studio Ladybug Feature Drop and unlock these cutting-edge features today. As always, your feedback is important to us – check known issues, report bugs, suggest improvements, and be part of our vibrant community on LinkedIn, Medium, YouTube, or X. Let's build the future of Android apps together!

**Java is a trademark or registered trademark of Oracle and/or its affiliates.

Posted by Nevin Mital - Developer Relations Engineer, Android Media

Posted by Nevin Mital - Developer Relations Engineer, Android Media

Posted by Caren Chang- Android Developer Relations Engineer

Posted by Caren Chang- Android Developer Relations Engineer

Posted by Kristina Simakova – Engineering Manager

Posted by Kristina Simakova – Engineering Manager

Recap some of Google’s biggest AI news from 2024, including moments from Gemini, NotebookLM, Search and more.

Recap some of Google’s biggest AI news from 2024, including moments from Gemini, NotebookLM, Search and more.

Here are Google’s latest AI updates from December including Gemini 2.0, GenCast, and Willow.

Here are Google’s latest AI updates from December including Gemini 2.0, GenCast, and Willow.

Posted by Robbie McLachlan – Developer Marketing

Posted by Robbie McLachlan – Developer Marketing

Posted by Matthew McCullough – VP of Product Management, Android Developer

Posted by Matthew McCullough – VP of Product Management, Android Developer