Much of the world’s information is stored in the form of tables, which can be found on the web or in databases and documents. These might include anything from technical specifications of consumer products to financial and country development statistics, sports results and much more. Currently, one needs to manually look at these tables to find the answer to a question or rely on a service that gives answers to specific questions (e.g., about sports results). This information would be much more accessible and useful if it could be queried through natural language.

For example, the following figure shows a table with a number of questions that people might want to ask. The answer to these questions might be found in one, or multiple, cells in a table (“Which wrestler had the most number of reigns?”), or might require aggregating multiple table cells (“How many world champions are there with only one reign?”).

|

| A table and questions with the expected answers. Answers can be selected (#1, #4) or computed (#2, #3). |

select count(*) where column("No. of reigns") == 1;” and then executed to produce the answer. This approach often requires substantial engineering in order to generate syntactically and semantically valid queries and is difficult to scale to arbitrary questions rather than questions about very specific tables (such as sports results).In, “TAPAS: Weakly Supervised Table Parsing via Pre-training”, accepted at ACL 2020, we take a different approach that extends the BERT architecture to encode the question jointly along with tabular data structure, resulting in a model that can then point directly to the answer. Instead of creating a model that works only for a single style of table, this approach results in a model that can be applied to tables from a wide range of domains. After pre-training on millions of Wikipedia tables, we show that our approach exhibits competitive accuracy on three academic table question-answering (QA) datasets. Additionally, in order to facilitate more exciting research in this area, we have open-sourced the code for training and testing the models as well as the models pre-trained on Wikipedia tables, available at our GitHub repo.

How to Process a Question

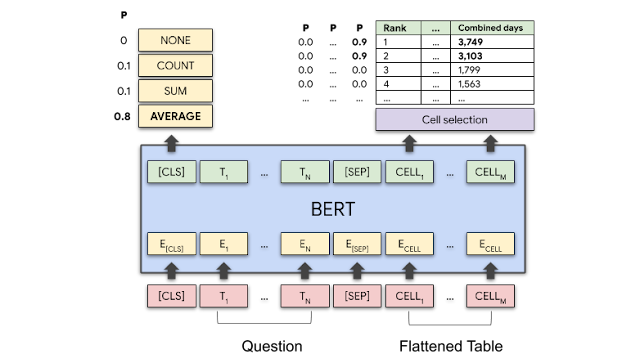

To process a question such as “Average time as champion for top 2 wrestlers?”, our model jointly encodes the question as well as the table content row by row using a BERT model that is extended with special embeddings to encode the table structure.

The key addition to the transformer-based BERT model are the extra embeddings that are used to encode the structured input. We rely on learned embeddings for the column index, the row index and one special rank index, which indicates the order of elements in numerical columns. The following image shows how all of these are added together at the input and fed into the transformer layers. The figure below illustrates how the question is encoded, together with the small table shown on the left. Each cell token has a special embedding that indicates its row, column and numeric rank within the column.

Using a method similar to how BERT is trained on text, we pre-trained our model on 6.2 million table-text pairs extracted from the English Wikipedia. During pre-training, the model learns to restore words in both table and text that have been replaced with a mask. We find that the model can do this with relatively high accuracy (71.4% of the masked tokens are restored correctly for tables unseen during training).

Learning from Answers Only

During fine-tuning the model learns how to answer questions from a table. This is done through training with either strong or weak supervision. In the case of strong supervision, for a given table and questions, one must provide the cells and aggregation operation to select (e.g., sum or count), a time-consuming and laborious process. More commonly, one trains using weak supervision, where only the correct answer (e.g., 3426 for the question in the example above) is provided. In this case, the model attempts to find an aggregation operation and cells that produce an answer close to the correct answer. This is done by computing the expectation over all the possible aggregation decisions and comparing it with the true result. The weak supervision scenario is beneficial because it allows for non-experts to provide the data needed to train the model and takes less time than strong supervision.

Results

We applied our model to three datasets — SQA, WikiTableQuestions (WTQ) and WikiSQL — and compared it to the performance of the top three state-of-the-art (SOTA) models for parsing tabular data. The comparison models included Min et al (2019) for WikiSQL, Wang et al. (2019) for WTQ and our own previous work for SQA (Mueller et al., 2019). For all datasets, we report the answer accuracy on the test sets for the weakly supervised training setup. For SQA and WIkiSQL we used our base model pre-trained on Wikipedia, while for WTQ, we found it beneficial to additionally pre-train on the SQA data. Our best models outperform the previous SOTA for SQA by more than 12 points, the previous SOTA for WTQ by more than 4 points and performs similarly to the best model published on WikiSQL.

|

| Test answer accuracy for the weakly-supervised setup on three academic TableQA datasets. |

This work was carried out by Jonathan Herzig, Paweł Krzysztof Nowak, Thomas Müller, Francesco Piccinno and Julian Martin Eisenschlos in the Google AI language group in Zurich. We would like to thank Yasemin Altun, Srini Narayanan, Slav Petrov, William Cohen, Massimo Nicosia, Syrine Krichene, and Jordan Boyd-Graber for useful comments and suggestions.