Google Cloud uses the open-source KVM hypervisor that has been validated by scores of researchers as the foundation of Google Compute Engine and Google Container Engine, and invests in additional security hardening and protection based on our research and testing experience. Then we contribute back our changes to the KVM project, benefiting the overall open-source community.

What follows is a list of the main ways we security harden KVM, to help improve the safety and security of your applications.

- Proactive vulnerability search: There are multiple layers of security and isolation built into Google’s KVM (Kernel-based Virtual Machine), and we’re always working to strengthen them. Google’s cloud security staff includes some of the world’s foremost experts in the world of KVM security, and has uncovered multiple vulnerabilities in KVM, Xen and VMware hypervisors over the years. The Google team has historically found and fixed nine vulnerabilities in KVM. During the same time period, the open source community discovered zero vulnerabilities in KVM that impacted Google Cloud Platform (GCP).

- Reduced attack surface area: Google has helped to improve KVM security by removing unused components (e.g., a legacy mouse driver and interrupt controllers) and limiting the set of emulated instructions. This presents a reduced attack and patch surface area for potential adversaries to exploit. We also modify the remaining components for enhanced security.

- Non-QEMU implementation: Google does not use QEMU, the user-space virtual machine monitor and hardware emulation. Instead, we wrote our own user-space virtual machine monitor that has the following security advantages over QEMU:

Simple host and guest architecture support matrix. QEMU supports a large matrix of host and guest architectures, along with different modes and devices that significantly increase complexity. Because we support a single architecture and a relatively small number of devices, our emulator is much simpler. We don’t currently support cross-architecture host/guest combinations, which helps avoid additional complexity and potential exploits. Google’s virtual machine monitor is composed of individual components with a strong emphasis on simplicity and testability. Unit testing leads to fewer bugs in complex system. QEMU code lacks unit tests and has many interdependencies that would make unit testing extremely difficult.

No history of security problems. QEMU has a long track record of security bugs, such as VENOM, and it's unclear what vulnerabilities may still be lurking in the code. - Boot and Jobs communication: The code provenance processes that we implement helps ensure that machines boot to a known good state. Each KVM host generates a peer-to-peer cryptographic key sharing system that it shares with jobs running on that host, helping to make sure that all communication between jobs running on the host is explicitly authenticated and authorized.

- Code Provenance: We run a custom binary and configuration verification system that was developed and integrated with our development processes to track what source code is running in KVM, how it was built, how it was configured and how it was deployed. We verify code integrity on every level — from the boot-loader, to KVM, to the customers’ guest VMs.

- Rapid and graceful vulnerability response: We've defined strict internal SLAs and processes to patch KVM in the event of a critical security vulnerability. However, in the three years since we released Compute Engine in beta, our KVM implementation has required zero critical security patches. Non-KVM vulnerabilities are rapidly patched through Google's internal infrastructure to help maximize security protection and meet all applicable compliance requirements, and are typically resolved without impact to customers. We notify customers of updates as required by contractual and legal obligations.

- Carefully controlled releases: We have stringent rollout policies and processes for KVM updates driven by compliance requirements and Google Cloud security controls. Only a small team of Google employees has access to the KVM build system and release management control.

There’s a lot more to learn about KVM security at Google. Click the links below for more information.

- KVM Security

- KVM Security Improvements by Andrew Honig

- Performant Security Hardening of KVM by Steve Rutherford

And of course, KVM is just one infrastructure component used to build Google Cloud. We take security very seriously, and hope you’ll entrust your workloads to us.

FAQ

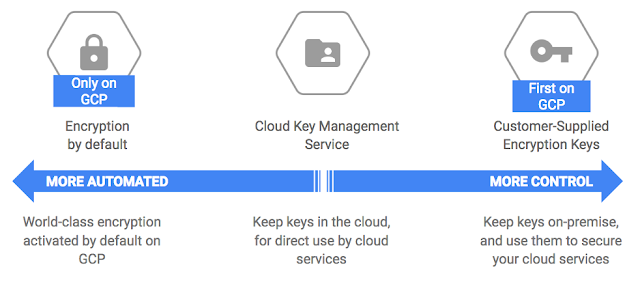

Should I worry about side channel attacks?We rarely see side channel attacks attempted. A large shared infrastructure the size of Compute Engine makes it very impractical for hackers to attempt side channel attacks, attacks based on information gained from the physical implementation (timing and memory access patterns) of a cryptosystem, rather than brute force or theoretical weaknesses in the algorithms. To mount this attack, the target VM and the attacker VM have to be collocated on the same physical host, and for any practical attack an attacker has to have some ability to induce execution of the crypto system being targeted. One common use for side channel attacks is against cryptographic keys. Side channel attacks that leak information are usually addressed quickly by cryptographic library developers. To help prevent that, we recommend that Google Cloud customers ensure that their cryptographic libraries are supported and always up-to-date.

What about Venom?

Venom affects QEMU. Compute Engine and Container Engine are unaffected because both do not use QEMU.

What about Rowhammer?

The Google Project Zero team led the way in discovering practical Rowhammer attacks against client platforms. Google production machines use double refresh rate to reduce errors, and ECC RAM that detects and corrects Rowhammer-induced errors. 1-bit errors are automatically corrected, and 2-bit errors are detected and cause any potentially offending guest VMs to be terminated. Alerts are generated for any projects that cause an unusual number of Rowhammer errors. Undetectable 3-bit errors are theoretically possible, but extremely improbable. A Rowhammer attack would cause a very large number of alerts for 2-bit and 3-bit errors and would be detected.

A recent paper describes a way to mount a Rowhammer attack using a KSM KVM module. KSM, the Linux implementation of memory de-duplication, uses a kernel thread that periodically scans memory to find memory pages with the same contents mapped from multiple VMs that are candidates for merging. Memory “de-duping” with KSM can help to locate the area to “hammer” the physical transistors underlying those bits of data, and can target the identical bits on someone else’s VM running on the same physical host. Compute Engine and Container Engine are not vulnerable to this kind of attack, since they do not use KSM. However, if a similar attack is attempted via a different mechanism, we have mitigations in place to detect it.

What is Google doing to reduce the impact of KVM vulnerabilities?

We have evaluated the sources of vulnerabilities discovered to date within KVM. Most of the vulnerabilities have been in the code areas that are in the kernel for historic reasons, but can now be removed without a significant performance impact when run with modern operating systems on modern hardware. We’re working on relocating in-kernel emulation functionality outside of the kernel without a significant performance impact.

How does the Google security team identify KVM vulnerabilities in their early stage?

We have built an extensive set of proprietary fuzzing tools for KVM. We also do a thorough code review looking specifically for security issues each time we adopt a new feature or version of KVM. As a result, we've found many vulnerabilities in KVM over the past three years. About half of our discoveries come from code review and about half come from our fuzzers.