Managing infrastructure usually involves a web interface or issuing commands in the terminal. These work great for individuals and small teams, but managing infrastructure in this way can be troublesome for larger teams with complex requirements. As more organizations migrate to the cloud, CIOs want hybrid and multi-cloud solutions. Infrastructure as code is one way to manage this complexity.

The open-source tool Terraform, in particular, can help you more safely and predictably create, change and upgrade infrastructure at scale. Created by HashiCorp, Terraform codifies APIs into declarative configuration files that can be shared amongst team members, edited, reviewed and versioned in the same way that software developers can with application code.

Here's a sample Terraform configuration for creating an instance on Google Cloud Platform (GCP):

resource "google_compute_instance" "blog" {

name = "default"

machine_type = "n1-standard-1"

zone = "us-central1-a"

disk {

image = "debian-cloud/debian-8"

}

disk {

type = "local-ssd"

scratch = true

}

network_interface {

network = "default"

}

}Because this is a text file, it can be treated the same as application code and manipulated with the same techniques that developers have had for years, including linting, testing, continuous integration, continuous deployment, collaboration, code review, change requests, change tracking, automation and more. This is a big improvement over managing infrastructure with wikis and shell scripts!

Terraform separates the infrastructure planning phase from the execution phase. The terraform plan command performs a dry-run that shows you what will happen. The terraform apply command makes the changes to real infrastructure.

$ terraform plan

+ google_compute_instance.default

can_ip_forward: "false"

create_timeout: "4"

disk.#: "2"

disk.0.auto_delete: "true"

disk.0.disk_encryption_key_sha256: ""

disk.0.image: "debian-cloud/debian-8"

disk.1.auto_delete: "true"

disk.1.disk_encryption_key_sha256: ""

disk.1.scratch: "true"

disk.1.type: "local-ssd"

machine_type: "n1-standard-1"

metadata_fingerprint: ""

name: "default"

self_link: ""

tags_fingerprint: ""

zone: "us-central1-a"

$ terraform apply

google_compute_instance.default: Creating...

can_ip_forward: "" => "false"

create_timeout: "" => "4"

disk.#: "" => "2"

disk.0.auto_delete: "" => "true"

disk.0.disk_encryption_key_sha256: "" => ""

disk.0.image: "" => "debian-cloud/debian-8"

disk.1.auto_delete: "" => "true"

disk.1.disk_encryption_key_sha256: "" => ""

disk.1.scratch: "" => "true"

disk.1.type: "" => "local-ssd"

machine_type: "" => "n1-standard-1"

metadata_fingerprint: "" => ""

name: "" => "default"

network_interface.#: "" => "1"

network_interface.0.address: "" => ""

network_interface.0.name: "" => ""

network_interface.0.network: "" => "default"

self_link: "" => ""

tags_fingerprint: "" => ""

zone: "" => "us-central1-a"

google_compute_instance.default: Still creating... (10s elapsed)

google_compute_instance.default: Still creating... (20s elapsed)

google_compute_instance.default: Creation complete (ID: default)

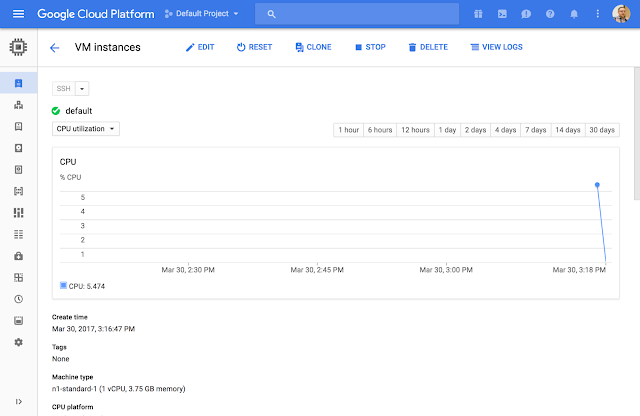

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.This instance is now running on Google Cloud:

|

| (click to enlarge) |

Terraform can manage more that just compute instances. At Google Cloud Next, we announced support for GCP APIs to manage projects and folders as well as billing. With these new APIs, Terraform can manage entire projects and many of their resources.

By adding just a few lines of code to the sample configuration above, we create a project tied to our organization and billing account, enable a configurable number of APIs and services on that project and launch the instance inside this newly-created project.

resource "google_project" "blog" {

name = "blog-demo"

project_id = "blog-demo-491834"

billing_account = "${var.billing_id}"

org_id = "${var.org_id}"

}

resource "google_project_services" "blog" {

project = "${google_project.blog.project_id}"

services = [

"iam.googleapis.com",

"cloudresourcemanager.googleapis.com",

"cloudapis.googleapis.com",

"compute-component.googleapis.com",

]

}

resource "google_compute_instance" "blog" {

# ...

project = "${google_project.blog.project_id}" # <-- ...="" code="" new="" option="">Terraform also detects changes to the configuration and only applies the difference of the changes.

$ terraform apply

google_compute_instance.default: Refreshing state... (ID: default)

google_project.my_project: Creating...

name: "" => "blog-demo"

number: "" => ""

org_id: "" => "1012963984278"

policy_data: "" => ""

policy_etag: "" => ""

project_id: "" => "blog-demo-491834"

skip_delete: "" => ""

google_project.my_project: Still creating... (10s elapsed)

google_project.my_project: Creation complete (ID: blog-demo-491835)

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.We can verify the project is created with the proper APIs:

|

| (click to enlarge) |

And the instance exists inside this project.

This project + instance can be stamped out multiple times. Terraform can also create and export IAM credentials and service accounts for these projects.

By combining GCP’s new resource management and billing APIs and Terraform, you have more control over your organization's resources. With the isolation guaranteed by projects and the reproducibility provided by Terraform, it's possible to quickly stamp out entire environments. Terraform parallelizes as many operations as possible, so it's often possible to spin up a new environment in just a few minutes. And in larger organizations with rollup billing, IT teams can use Terraform to stamp out pre-configured environments tied to a single billing organization.

Use Cases

There are many challenges that can benefit from an infrastructure as code approach to managing resources. Here are a few that come to mind:Ephemeral environments

Once you've codified an infrastructure in Terraform, it's easy to stamp out additional environments for development, QA, staging or testing. Many organizations pay thousands of dollars every month for a dedicated staging environment. Because Terraform parallelizes operations, you can curate a copy of production infrastructure in just one trip to the water cooler. Terraform enables developers to deploy their changes into identical copies of production, letting them catch bugs early.

Rapid project stamping

The new Terraform google_project APIs enable quick project stamping. Organizations can easily create identical projects for training, field demos, new hires, coding interviews or disaster recovery. In larger organizations with rollup billing, IT teams can use Terraform to stamp out pre-configured environments tied to a single billing organization.

On-demand continuous integration

You can use Terraform to create continuous integration or build environments on demand that are always in a clean state. These environments only run when needed, reducing costs and improving parity by using the same configurations each time.

Whatever your use case, the combination of Terraform and GCP’s new resource management APIs represents a powerful new way to manage cloud-based environments. For more information, please visit the Terraform website or review the code on GitHub.