Posted by Matthew McCullough – VP of Product Management, Android Developer

Posted by Matthew McCullough – VP of Product Management, Android Developer

Today we're releasing the second beta of Android 16, continuing our work to build a platform that enables creative expression. You can enroll any supported Pixel device to get this and future Android Beta updates over-the-air.

This build adds new support for professional camera experiences, graphical effects, extends our performance framework, and continues the evolution of features related to privacy, security, and background tasks. We’re looking forward to hearing what you think, and thank you in advance for your continued help in making Android a platform that works for everyone.

Media and camera updates

Android 16 enhances support for professional camera users, allowing for hybrid auto exposure along with precise color temperature and tint adjustments. It's easier than ever to capture motion photos with new Intent actions, and we're continuing to improve UltraHDR images, with support for HEIC encoding and new parameters from the ISO 21496-1 draft standard.

Hybrid auto-exposure

Android 16 adds new hybrid auto-exposure modes to Camera2, allowing you to manually control specific aspects of exposure while letting the auto-exposure (AE) algorithm handle the rest. You can control ISO + AE, and exposure time + AE, providing greater flexibility compared to the current approach where you either have full manual control or rely entirely on auto-exposure.

fun setISOPriority() {

// ...

val availablePriorityModes = mStaticInfo.characteristics.get(

CameraCharacteristics.CONTROL_AE_AVAILABLE_PRIORITY_MODES

)

// ...

// Turn on AE mode to set priority mode

reqBuilder[CaptureRequest.CONTROL_AE_MODE] = CameraMetadata.CONTROL_AE_MODE_ON

reqBuilder[CaptureRequest.CONTROL_AE_PRIORITY_MODE] = CameraMetadata.CONTROL_AE_PRIORITY_MODE_SENSOR_SENSITIVITY_PRIORITY

reqBuilder[CaptureRequest.SENSOR_SENSITIVITY] = TEST_SENSITIVITY_VALUE

val request: CaptureRequest = reqBuilder.build()

// ...

}

Precise color temperature and tint adjustments

Android 16 adds camera support for fine color temperature and tint adjustments to better support professional video recording applications. White balance settings are currently controlled through CONTROL_AWB_MODE, which contains options limited to a preset list, such as Incandescent, Cloudy, and Twilight. The COLOR_CORRECTION_MODE_CCT enables the use of COLOR_CORRECTION_COLOR_TEMPERATURE and COLOR_CORRECTION_COLOR_TINT for precise adjustments of white balance based on the correlated color temperature.

fun setCCT() {

// ... (Your existing code before this point) ...

val colorTemperatureRange: Range<Int> =

mStaticInfo.characteristics[CameraCharacteristics.COLOR_CORRECTION_COLOR_TEMPERATURE_RANGE]

// Set to manual mode to enable CCT mode

reqBuilder[CaptureRequest.CONTROL_AWB_MODE] = CameraMetadata.CONTROL_AWB_MODE_OFF

reqBuilder[CaptureRequest.COLOR_CORRECTION_MODE] = CameraMetadata.COLOR_CORRECTION_MODE_CCT

reqBuilder[CaptureRequest.COLOR_CORRECTION_COLOR_TEMPERATURE] = 5000

reqBuilder[CaptureRequest.COLOR_CORRECTION_COLOR_TINT] = 30

val request: CaptureRequest = reqBuilder.build()

// ... (Your existing code after this point) ...

}

Motion photo capture intent actions

Android 16 adds standard Intent actions — ACTION_MOTION_PHOTO_CAPTURE, and ACTION_MOTION_PHOTO_CAPTURE_SECURE — which request that the camera application capture a motion photo and return it.

You must either pass an extra EXTRA_OUTPUT to control where the image will be written, or a Uri through Intent setClipData. If you don't set a ClipData, it will be copied there for you when calling Context.startActivity.

UltraHDR image enhancements

Android 16 continues our work to deliver dazzling image quality with UltraHDR images. It adds support for UltraHDR images in the HEIC file format. These images will get ImageFormat type HEIC_ULTRAHDR and will contain an embedded gainmap similar to the existing UltraHDR JPEG format. We're working on AVIF support for UltraHDR as well, so stay tuned.

In addition, Android 16 implements additional parameters in UltraHDR from the ISO 21496-1 draft standard, including the ability to get and set the colorspace that gainmap math should be applied in, as well as support for HDR encoded base images with SDR gainmaps.

Custom graphical effects with AGSL

Android 16 adds RuntimeColorFilter and RuntimeXfermode, allowing you to author complex effects like Threshold, Sepia, and Hue Saturation and apply them to draw calls. Since Android 13, you've been able to use AGSL to create custom RuntimeShaders that extend Shaders. The new API mirrors this, adding an AGSL-powered RuntimeColorFilter that extends ColorFilters, and a Xfermode effect that allows you to implement AGSL-based custom compositing and blending between source and destination pixels.

private val thresholdEffectString = """

uniform half threshold;

half4 main(half4 c) {

half luminosity = dot(c.rgb, half3(0.2126, 0.7152, 0.0722));

half bw = step(threshold, luminosity);

return bw.xxx1 * c.a;

}"""

fun setCustomColorFilter(paint: Paint) {

val filter = RuntimeColorFilter(thresholdEffectString)

filter.setFloatUniform(0.5)

paint.colorFilter = filter

}

Behavior changes

With every Android release, we seek to make the platform more efficient, privacy conscious, internationalization friendly, and robust, balancing the needs of apps against hardware support, system performance, user privacy, and battery life. This can result in behavior changes that impact compatibility.

Edge to edge opt-out going away

Android 15 enforced edge-to-edge for apps targeting Android 15 (SDK 35), but your app could opt-out by setting R.attr#windowOptOutEdgeToEdgeEnforcement to true. Once your app targets Android 16 (Baklava), R.attr#windowOptOutEdgeToEdgeEnforcement is deprecated and disabled and your app cannot opt-out of going edge-to-edge. To be compatible with Android 16 Beta 2, ensure your app supports edge-to-edge and remove any use of R.attr#windowOptOutEdgeToEdgeEnforcement. To support edge-to-edge, see the Compose and Views guidance. Please let us know about concerns in our tracker on the feedback page.

Health and fitness permissions

For apps targeting Android 16 or higher, BODY_SENSORS permissions are transitioning to the granular permissions under android.permissions.health also used by Health Connect. Any API previously requiring BODY_SENSORS or BODY_SENSORS_BACKGROUND will now require the corresponding android.permissions.health permission. This affects the following data types, APIs, and foreground service types:

If your app uses these APIs, it should now request the respective granular permissions:

These permissions are the same as those that guard access to reading data from Health Connect, the Android datastore for health, fitness, and wellness data.

Abandoned empty jobs stop reason

An abandoned job occurs when the JobParameters object associated with the job has been garbage collected, but jobFinished has not been called to signal job completion. This indicates that the job may be running and being rescheduled without the application's awareness.

Applications in Android 16 that rely on JobScheduler without maintaining a strong reference to the JobParameters object will now be granted the new job stop reason STOP_REASON_TIMEOUT_ABANDONED on timeout, instead of STOP_REASON_TIMEOUT.

If there are frequent occurrences of the new abandoned stop reason, the system will take mitigation steps to reduce job frequency. Please use the new stop reason to detect and reduce abandoned jobs.

Note: If you're using WorkManager, you're not expected to be impacted by this change — one nice side effect of using Android Jetpack to schedule your work.

Intent redirect changes

Android 16 introduces default security hardening against Intent redirection attacks regardless of your app's targetSDK version. The removeLaunchSecurityProtection API allows you to opt-out of this protection if your testing reveals issues.

Note: Opting out of security protections should be done with caution and only when absolutely necessary, as it can increase the risk of security vulnerabilities.

val iSublevel = intent.getParcelableExtra("sub_intent", Intent::class.java)

iSublevel?.let {

it.removeLaunchSecurityProtection()

startActivity(it)

}

Elegant font APIs deprecated and disabled

Apps targeting Android 15 (API level 35) have the elegantTextHeight TextView attribute set to true by default, replacing the compact font with one that is much more readable. You could override this by setting the elegantTextHeight attribute to false.

Android 16 deprecates the elegantTextHeight attribute, and the attribute will be ignored once your app targets Android 16. The “UI fonts” controlled by these APIs are being discontinued, so you should adapt any layouts to ensure consistent and future proof text rendering in Arabic, Lao, Myanmar, Tamil, Gujarati, Kannada, Malayalam, Odia, Telugu or Thai.

default elegantTextHeight behavior for apps targeting Android 14 (API level 34) and lower

default elegantTextHeight behavior for apps targeting Android 15 (API level 35) and higher

16 KB page size compatibility mode

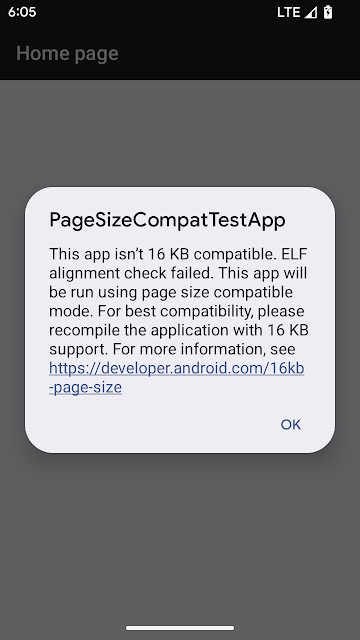

Android 15 introduced support for 16KB memory pages to optimize performance of the platform. Android 16 adds a compatibility mode, allowing some apps built for 4K memory pages to run on a device configured for 16KB memory pages.

If Android detects that your app has 4KB aligned memory pages, it will automatically use compatibility mode and display a notification dialog to the user. Setting the android:pageSizeCompat property in the AndroidManifest.xml to enable the backwards compatibility mode will prevent the display of the dialog when your app launches. For best performance, reliability, and stability, your app should still be 16KB aligned. Read our recent blog post about updating your apps to support 16KB memory pages for more details.

Measurement system customization

Users can now customize their measurement system in regional preferences within Settings. The user preference is included as part of the locale code, so you can register a BroadcastReceiver on ACTION_LOCALE_CHANGED to handle locale configuration changes when regional preferences change.

Using formatters can help match the local experience. For example, "0.5 in" in English (United States), is "12,7 mm" for a user who has set their phone to English (Denmark) or who uses their phone in English (United States) with the metric system as the measurement system preference.

To find these settings in Android 16 Beta 2, open the Settings app and navigate to System > Languages & region.

Content handling for live wallpapers

In Android 16, the live wallpaper framework is gaining a new content API to address the challenges of dynamic, user-driven wallpapers. Currently, live wallpapers incorporating user-provided content require complex, service-specific implementations. Android 16 introduces WallpaperDescription and WallpaperInstance. WallpaperDescription allows you to identify distinct instances of a live wallpaper from the same service. For example, a wallpaper that has instances on both the home screen and on the lock screen may have unique content in both places. The wallpaper picker and WallpaperManager use this metadata to better present wallpapers to users, streamlining the process for you to create diverse and personalized live wallpaper experiences.

Headroom APIs in ADPF

The SystemHealthManager introduces the getCpuHeadroom and getGpuHeadroom APIs, designed to provide games and resource-intensive apps with estimates of available CPU and GPU resources. These methods offer a way for you to gauge how your app or game can best improve system health, particularly when used in conjunction with other Android Dynamic Performance Framework (ADPF) APIs that detect thermal throttling. By using CpuHeadroomParams and GpuHeadroomParams on supported devices, you will be able to customize the time window used to compute the headroom and select between average or minimum resource availability. This can help you reduce your CPU or GPU resource usage accordingly, leading to better user experiences and improved battery life.

Key sharing API

Android 16 adds APIs that support sharing access to Android Keystore keys with other apps. The new KeyStoreManager class supports granting and revoking access to keys by app uid, and includes an API for apps to access shared keys.

Standardized picture and audio quality framework for TVs

The new MediaQuality package in Android 16 exposes a set of standardized APIs for access to audio and picture profiles and hardware-related settings. This allows streaming apps to query profiles and apply them to media dynamically:

- Movies mastered with a wider dynamic range require greater color accuracy to see subtle details in shadows and adjust to ambient light, so a profile that prefers color accuracy over brightness may be appropriate.

- Live sporting events are often mastered with a narrow dynamic range, but are often watched in daylight, so a profile that gives preference to brightness over color accuracy can give better results.

- Fully interactive content wants minimal processing to reduce latency, and wants higher frame rates, which is why many TV's ship with a game profile.

The API allows apps to switch between profiles and users to enjoy the benefits of tuning supported TVs to best suit their content.

Accessibility

Android 16 adds additional APIs to enhance UI semantics that help improve consistency for users that rely on accessibility services, such as TalkBack.

Duration added to TtsSpan

Android 16 extends TtsSpan with a TYPE_DURATION, consisting of ARG_HOURS, ARG_MINUTES, and ARG_SECONDS. This allows you to directly annotate time duration, ensuring accurate and consistent text-to-speech output with services like TalkBack.

Support elements with multiple labels

Android currently allows UI elements to derive their accessibility label from another, and now offers the ability for multiple labels to be associated, a common scenario in web content. By introducing a list-based API within AccessibilityNodeInfo, Android can directly support these multi-label relationships. As part of this change, we've deprecated AccessibilityNodeInfo setLabeledBy and getLabeledBy in favor of addLabeledBy, removeLabeledBy, and getLabeledByList.

Improved support for expandable elements

Android 16 adds accessibility APIs that allow you to convey the expanded or collapsed state of interactive elements, such as menus and expandable lists. By setting the expanded state using setExpandedState and dispatching TYPE_WINDOW_CONTENT_CHANGED AccessibilityEvents with a CONTENT_CHANGE_TYPE_EXPANDED content change type, you can ensure that screen readers like TalkBack announce state changes, providing a more intuitive and inclusive user experience.

Indeterminate ProgressBars

Android 16 adds RANGE_TYPE_INDETERMINATE, giving a way for you to expose RangeInfo for both determinate and indeterminate ProgressBar widgets, allowing services like TalkBack to more consistently provide feedback for progress indicators.

Tri-state CheckBox

The new AccessibilityNodeInfo getChecked and setChecked(int) methods in Android 16 now support a "partially checked" state in addition to "checked" and "unchecked." This replaces the deprecated boolean isChecked and setChecked(boolean).

Two Android API releases in 2025

This preview is for the next major release of Android with a planned launch in Q2 of 2025 and we plan to have another release with new developer APIs in Q4. The Q2 major release will be the only release in 2025 to include behavior changes that could affect apps. The Q4 minor release will pick up feature updates, optimizations, and bug fixes; like our non-SDK quarterly releases, it will not include any intentional app-impacting behavior changes.

We'll continue to have quarterly Android releases. The Q1 and Q3 updates provide incremental updates to ensure continuous quality. We’re putting additional energy into working with our device partners to bring the Q2 release to as many devices as possible.

There’s no change to the target API level requirements and the associated dates for apps in Google Play; our plans are for one annual requirement each year, tied to the major API level.

How to get ready

In addition to performing compatibility testing on this next major release, make sure that you're compiling your apps against the new SDK, and use the compatibility framework to enable targetSdkVersion-gated behavior changes as they become available for early testing.

App compatibility

The Android 16 Preview program runs from November 2024 until the final public release in Q2 of 2025. At key development milestones, we'll deliver updates for your development and testing environments. Each update includes SDK tools, system images, emulators, API reference, and API diffs. We'll highlight critical APIs as they are ready to test in the preview program in blogs and on the Android 16 developer website.

We’re targeting March of 2025 for our Platform Stability milestone. At this milestone, we’ll deliver final SDK/NDK APIs and also final internal APIs and app-facing system behaviors. From that time you’ll have several months before the final release to complete your testing. Learn more by checking the release timeline details.

Get started with Android 16

You can enroll any supported Pixel device to get this and future Android Beta updates over-the-air. If you don’t have a Pixel device, you can use the 64-bit system images with the Android Emulator in Android Studio. If you are currently on Android 16 Beta 1 or are already in the Android Beta program, you will be offered an over-the-air update to Beta 2.

We're looking for your feedback so please report issues and submit feature requests on the feedback page. The earlier we get your feedback, the more we can include in our work on the final release.

For the best development experience with Android 16, we recommend that you use the latest preview of Android Studio (Meerkat). Once you’re set up, here are some of the things you should do:

- Compile against the new SDK, test in CI environments, and report any issues in our tracker on the feedback page.

- Test your current app for compatibility, learn whether your app is affected by changes in Android 16, and install your app onto a device or emulator running Android 16 and extensively test it.

We’ll update the beta system images and SDK regularly throughout the Android 16 release cycle. Once you’ve installed a beta build, you’ll automatically get future updates over-the-air for all later previews and Betas.

For complete information, visit the Android 16 developer site.

Posted by Ash Nohe and Summers Pitman – Developer Relations Engineers

Posted by Ash Nohe and Summers Pitman – Developer Relations Engineers

Posted by André Labonté – Senior Product Manager, Android Widgets

Posted by André Labonté – Senior Product Manager, Android Widgets

Posted by Yinka Taiwo-Peters – Product Manager

Posted by Yinka Taiwo-Peters – Product Manager

.png)

Posted by

Posted by

Posted by

Posted by

Posted by

Posted by

Posted by

Posted by

Posted by Kanyinsola Fapohunda – Software Engineer, and Geoffrey Boullanger – Technical Lead

Posted by Kanyinsola Fapohunda – Software Engineer, and Geoffrey Boullanger – Technical Lead

Posted by

Posted by

Posted by

Posted by