The Linux kernel is responsible for enforcing much of Android’s security model, which is why we have put a lot of effort into hardening the Android Linux kernel against exploitation. In Android 9, we introduced support for Clang’s forward-edge Control-Flow Integrity (CFI) enforcement to protect the kernel from code reuse attacks that modify stored function pointers. This year, we have added backward-edge protection for return addresses using Clang’s Shadow Call Stack (SCS).

Google’s Pixel 3 and 3a phones have kernel SCS enabled in the Android 10 update, and Pixel 4 ships with this protection out of the box. We have made patches available to all supported versions of the Android kernel and also maintain a patch set against upstream Linux. This post explains how kernel SCS works, the benefits and trade-offs, how to enable the feature, and how to debug potential issues.

Return-oriented programming

As kernel memory protections increasingly make code injection more difficult, attackers commonly use control flow hijacking to exploit kernel bugs. Return-oriented programming (ROP) is a technique where the attacker gains control of the kernel stack to overwrite function return addresses and redirect execution to carefully selected parts of existing kernel code, known as ROP gadgets. While address space randomization and stack canaries can make this attack more challenging, return addresses stored on the stack remain vulnerable to many overwrite flaws. The general availability of tools for automatically generating this type of kernel exploit makes protecting against it increasingly important.Shadow Call Stack

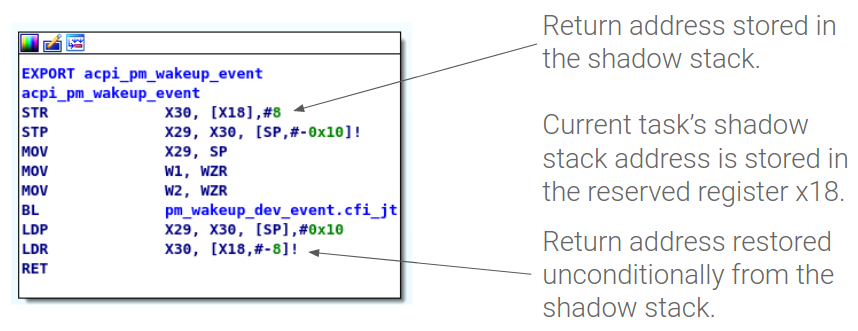

One method of protecting return addresses is to store them in a separately allocated shadow stack that’s not vulnerable to traditional buffer overflows. This can also help protect against arbitrary overwrite attacks.Clang added the Shadow Call Stack instrumentation pass for arm64 in version 7. When enabled, each non-leaf function that pushes the return address to the stack will be instrumented with code that also saves the address to a shadow stack. A pointer to the current task’s shadow stack is always kept in the x18 register, which is reserved for this purpose. Here’s what instrumentation looks like in a typical kernel function:

SCS doesn’t require error handling as it uses the return address from the shadow stack unconditionally. Compatibility with stack unwinding for debugging purposes is maintained by keeping a copy of the return address in the normal stack, but this value is never used for control flow decisions.

Despite requiring a dedicated register, SCS has minimal performance overhead. The instrumentation itself consists of one load and one store instruction per function, which results in a performance impact that’s within noise in our benchmarking. Allocating a shadow stack for each thread does increase the kernel’s memory usage but as only return addresses are stored, the stack size defaults to 1kB. Therefore, the overhead is a fraction of the memory used for the already small regular kernel stacks.

SCS patches are available for Android kernels 4.14 and 4.19, and for upstream Linux. It can be enabled using the following configuration options:

CONFIG_SHADOW_CALL_STACK=y

# CONFIG_SHADOW_CALL_STACK_VMAP is not set

# CONFIG_DEBUG_STACK_USAGE is not set

By default, shadow stacks are not virtually allocated to minimize memory overhead, but CONFIG_SHADOW_CALL_STACK_VMAP can be enabled for better stack exhaustion protection. With CONFIG_DEBUG_STACK_USAGE, the kernel will also print out shadow stack usage in addition to normal stack usage which can be helpful when debugging issues.