Posted by Łukasz Kaiser, Senior Research Scientist, Google Brain TeamDeep Learning (DL) has enabled the rapid advancement of many useful technologies, such as

machine translation,

speech recognition and

object detection. In the research community, one can find code open-sourced by the authors to help in replicating their results and further advancing deep learning. However, most of these DL systems use unique setups that require significant engineering effort and may only work for a specific problem or architecture, making it hard to run new experiments and compare the results.

Today, we are happy to release

Tensor2Tensor (T2T), an open-source system for training deep learning models in TensorFlow. T2T facilitates the creation of state-of-the art models for a wide variety of ML applications, such as translation, parsing, image captioning and more, enabling the exploration of various ideas much faster than previously possible. This release also includes a library of datasets and models, including the best models from a few recent papers (

Attention Is All You Need,

Depthwise Separable Convolutions for Neural Machine Translation and

One Model to Learn Them All) to help kick-start your own DL research.

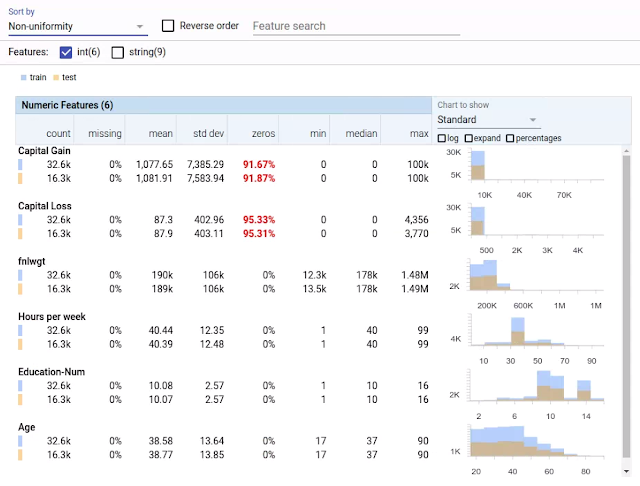

Translation Model | Training time | BLEU (difference from baseline) |

| Transformer (T2T) | 3 days on 8 GPU | 28.4 (+7.8) |

| SliceNet (T2T) | 6 days on 32 GPUs | 26.1 (+5.5) |

| 1 day on 64 GPUs | 26.0 (+5.4) |

| ConvS2S | 18 days on 1 GPU | 25.1 (+4.5) |

| GNMT | 1 day on 96 GPUs | 24.6 (+4.0) |

| 8 days on 32 GPUs | 23.8 (+3.2) |

| MOSES (phrase-based baseline) | N/A | 20.6 (+0.0) |

| BLEU scores (higher is better) on the standard WMT English-German translation task. |

As an example of the kind of improvements T2T can offer, we applied the library to machine translation. As you can see in the table above, two different T2T models, SliceNet and Transformer, outperform the previous state-of-the-art,

GNMT+MoE. Our best T2T model, Transformer, is 3.8 points better than the standard

GNMT model, which itself was 4 points above the baseline

phrase-based translation system, MOSES. Notably, with T2T you can approach previous state-of-the-art results with a single GPU in one day: a small Transformer model (not shown above) gets 24.9 BLEU after 1 day of training on a single GPU. Now everyone with a GPU can tinker with great translation models on their own: our

github repo has

instructions on how to do that.

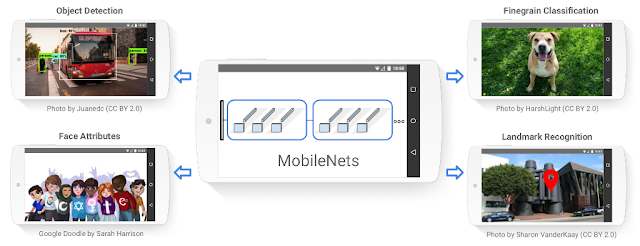

Modular Multi-Task TrainingThe T2T library is built with familiar TensorFlow tools and defines multiple pieces needed in a deep learning system: data-sets, model architectures, optimizers, learning rate decay schemes, hyperparameters, and so on. Crucially, it enforces a standard interface between all these parts and implements current ML best practices. So you can pick any data-set, model, optimizer and set of hyperparameters, and run the training to check how it performs. We made the architecture modular, so every piece between the input data and the predicted output is a tensor-to-tensor function. If you have a new idea for the model architecture, you don’t need to replace the whole setup. You can keep the embedding part and the loss and everything else, just replace the model body by your own function that takes a tensor as input and returns a tensor.

This means that T2T is flexible, with training no longer pinned to a specific model or dataset. It is so easy that even architectures like the famous

LSTM sequence-to-sequence model can be defined in a

few dozen lines of code. One can also train a single model on multiple tasks from different domains. Taken to the limit, you can even train a single model on all data-sets concurrently, and we are happy to report that our

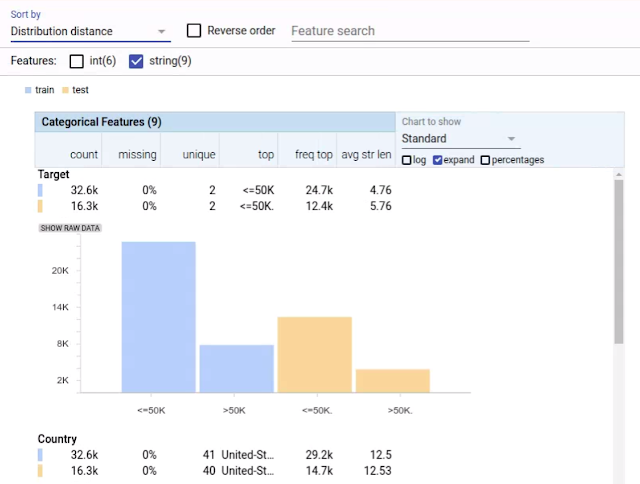

MultiModel, trained like this and included in T2T, yields good results on many tasks even when training jointly on ImageNet (image classification),

MS COCO (image captioning),

WSJ (speech recognition),

WMT (translation) and the

Penn Treebank parsing corpus. It is the first time a single model has been demonstrated to be able to perform all these tasks at once.

Built-in Best PracticesWith this initial release, we also provide scripts to generate a number of data-sets widely used in the research community

1, a handful of models

2, a number of hyperparameter configurations, and a well-performing implementation of other important tricks of the trade. While it is hard to list them all, if you decide to run your model with T2T you’ll get for free the correct padding of sequences and the corresponding cross-entropy loss, well-tuned parameters for the Adam optimizer, adaptive batching, synchronous distributed training, well-tuned data augmentation for images, label smoothing, and a number of hyper-parameter configurations that worked very well for us, including the ones mentioned above that achieve the state-of-the-art results on translation and may help you get good results too.

As an example, consider the task of parsing English sentences into their grammatical constituency trees. This problem has been studied for decades and competitive methods were developed with a lot of effort. It can be presented as a

sequence-to-sequence problem and be solved with neural networks, but it used to require a lot of tuning. With T2T, it took us only a few days to add the

parsing data-set generator and adjust our attention transformer model to train on this problem. To our pleasant surprise, we got very good results in only a week:

| Parsing F1 scores on the standard test set, section 23 of the WSJ. We only compare here models trained discriminatively on the Penn Treebank WSJ training set, see the paper for more results. |

Contribute to Tensor2TensorIn addition to exploring existing models and data-sets, you can easily define your own model and add your own data-sets to Tensor2Tensor. We believe the already included models will perform very well for many NLP tasks, so just adding your data-set might lead to interesting results. By making T2T modular, we also make it very easy to contribute your own model and see how it performs on various tasks. In this way the whole community can benefit from a library of baselines and deep learning research can accelerate. So head to our

github repository, try the new models, and contribute your own!

AcknowledgementsThe release of

Tensor2Tensor was only possible thanks to the widespread collaboration of many engineers and researchers. We want to acknowledge here the core team who contributed (in alphabetical order):

Samy Bengio, Eugene Brevdo, Francois Chollet, Aidan N. Gomez, Stephan Gouws, Llion Jones, Łukasz Kaiser, Nal Kalchbrenner, Niki Parmar, Ryan Sepassi, Noam Shazeer, Jakob Uszkoreit, Ashish Vaswani.

1 We include a number of datasets for image classification (MNIST, CIFAR-10, CIFAR-100, ImageNet), image captioning (MS COCO), translation (WMT with multiple languages including English-German and English-French), language modelling (LM1B), parsing (Penn Treebank), natural language inference (SNLI), speech recognition (TIMIT), algorithmic problems (over a dozen tasks from reversing through addition and multiplication to algebra) and we will be adding more and welcome your data-sets too.↩

2 Including LSTM sequence-to-sequence RNNs, convolutional networks also with separable convolutions (e.g., Xception), recently researched models like ByteNet or the Neural GPU, and our new state-of-the-art models mentioned in this post that we will be actively updating in the repository.↩