Today, we are announcing the launch of the v0.1 version of Graph for Understanding Artifact Composition (GUAC). Introduced at Kubecon 2022 in October, GUAC targets a critical need in the software industry to understand the software supply chain. In collaboration with Kusari, Purdue University, Citi, and community members, we have incorporated feedback from our early testers to improve GUAC and make it more useful for security professionals. This improved version is now available as an API for you to start developing on top of, and integrating into, your systems.

The need for GUAC

High-profile incidents such as Solarwinds, and the recent 3CX supply chain double-exposure, are evidence that supply chain attacks are getting more sophisticated. As highlighted by the U.S. Executive Order on Cybersecurity, there’s a critical need for security professionals, CISOs, and security engineers to be able to more deeply link information from different supply chain ecosystems to keep up with attackers and prevent exposure. Without linking different sources of information, it’s impossible to have a clear understanding of the potential risks posed by the software components in an organization.

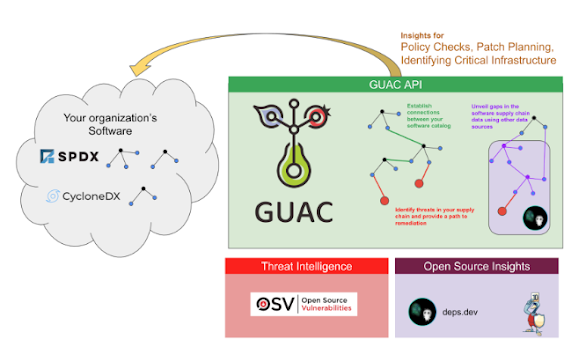

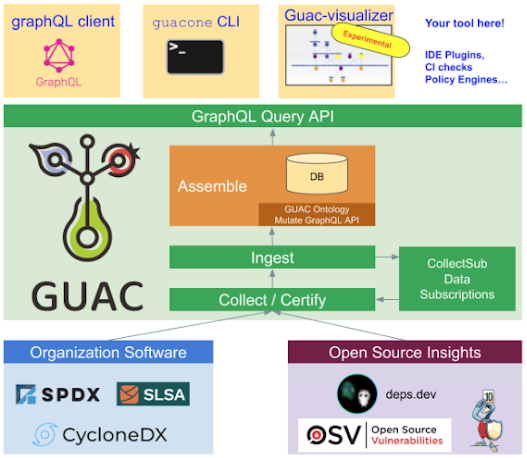

GUAC aggregates software security metadata and maps it to a standard vocabulary of concepts relevant to the software supply chain. This data can be accessed via a GraphQL interface, allowing development of a rich ecosystem of integrations, command-line tools, visualizations, and policy engines.

We hope that GUAC will help the wider software development community better evaluate the supply chain security posture of their organizations and projects. Feedback from early adopters has been overwhelmingly positive:

“At Yahoo, we have found immense value and significant efficiency by utilizing the open source project GUAC. GUAC has allowed us to streamline our processes and increase efficiency in a way that was not possible before,” said Hemil Kadakia, Sr. Mgr. Software Dev Engineering, Paranoids, Yahoo.

The power of GUAC

Dynamic aggregation

GUAC is not just a static database—it is the first application that is continuously evolving the database pertaining to the software that an organization develops or uses. Supply chains change daily, and by aggregating your Software Bill of Materials (SBOMs) and Supply-chain Levels for Software Artifacts (SLSA) attestations with threat intelligence sources (e.g., OSV vulnerability feeds) and OSS insights (e.g., deps.dev), GUAC is constantly incorporating the latest threat information and deeper analytics to help paint a more complete picture of your risk profile. And by merging external data with internal private metadata, GUAC brings the same level of reasoning to a company’s first-party software portfolio.

Seamless integration of incomplete metadata

Because of the complexity of the modern software stack—often spanning languages and toolchains—we discovered during GUAC development that it is difficult to produce high-quality SBOMs that are accurate, complete, and meet specifications and intents.

Following the U.S. Executive Order on Cybersecurity, there are now a large number of SBOM documents being generated during release and build workflows to explain to consumers what’s in their software. Given the difficulty in producing accurate SBOMs, consumers often face a situation where they have incomplete, inaccurate, or conflicting SBOMs. In these situations, GUAC can fill in the gaps in the various supply chain metadata: GUAC can link the documents and then use heuristics to improve the quality of data and guess at the correct intent. Additionally, the GUAC community is now working closely with SPDX to advance SBOM tooling and improve the quality of metadata.

GUAC's process for incorporating and enriching metadata for organizational insight

Consistent interfaces

Alongside the boom in SBOM production, there’s been a rapid expansion of new standards, document types, and formats, making it hard to perform consistent queries. The multiple formats for software supply chain metadata often refer to similar concepts, but with different terms. To integrate these, GUAC defines a common vocabulary for talking about the software supply chain—for example, artifacts, packages, repositories, and the relationships between them.

This vocabulary is then exposed as a GraphQL API, empowering users to build powerful integrations on top of GUAC’s knowledge graph. For example, users are able to query seamlessly with the same commands across different SBOM formats like SPDX and CycloneDX.

According to Ed Warnicke, Distinguished Engineer at Cisco Systems, "Supply chain security is increasingly about making sense of many different kinds of metadata from many different sources. GUAC knits all of that information together into something understandable and actionable."

Potential integrations

Based on these features, we envision potential integrations that users can build on top of GUAC in order to:

Create policies based on trust

Quickly react to security compromises

Determine an upgrade plan in response to a security incident

Create visualizers for data explorations, CLI tools for large scale analysis and incident response, CI checks, IDE plugins to shift policy left, and more

Developers can also build data source integrations under GUAC to expand its coverage. The entire GUAC architecture is plug-and-play, so you can write data integrations to get:

Supply chain metadata from new sources like your preferred security vendors

Parsers to translate this metadata into the GUAC ontology

Database backends to store the GUAC data in either common databases or in organization-defined private data stores

GUAC's GraphQL query API enables a diverse ecosystem of tooling

Dejan Bosanac, an engineer at Red Hat and an active contributor to the GUAC project, further described GUAC’s ingestion abilities, “With mechanisms to ingest and certify data from various sources and GraphQL API to later query those data, we see it as a good foundation for our current and future SSCS efforts. Being a true open source initiative with a welcoming community is just a plus.”

Next steps

Google is committed to making GUAC the best metadata synthesis and aggregation tool for security professionals. GUAC contributors are excited to meet at our monthly community calls and look forward to seeing demos of new applications built with GUAC.

“At Kusari, we are proud to have joined forces with Google's Open Source Security Team and the community to create and build GUAC,” says Tim Miller, CEO of Kusari. “With GUAC, we believe in the critical role it plays in safeguarding the software supply chain and we are dedicated to ensuring its success in the ecosystem.”

Google is preparing SBOMs for consumption by the US Federal Government following EO 14028, and we are internally ingesting our SBOM catalog into GUAC to gather early insights. We encourage you to do the same with the GUAC release and submit your feedback. If the API is not flexible enough, please let us know how we can extend it. You can also submit suggestions and feedback on GUAC development or use cases, either by emailing [email protected] or filing an issue on our GitHub repository.

We hope you'll join us in this journey with GUAC!