Recently, OSS-Fuzz reported 26 new vulnerabilities to open source project maintainers, including one vulnerability in the critical OpenSSL library (CVE-2024-9143) that underpins much of internet infrastructure. The reports themselves aren’t unusual—we’ve reported and helped maintainers fix over 11,000 vulnerabilities in the 8 years of the project.

But these particular vulnerabilities represent a milestone for automated vulnerability finding: each was found with AI, using AI-generated and enhanced fuzz targets. The OpenSSL CVE is one of the first vulnerabilities in a critical piece of software that was discovered by LLMs, adding another real-world example to a recent Google discovery of an exploitable stack buffer underflow in the widely used database engine SQLite.

This blog post discusses the results and lessons over a year and a half of work to bring AI-powered fuzzing to this point, both in introducing AI into fuzz target generation and expanding this to simulate a developer’s workflow. These efforts continue our explorations of how AI can transform vulnerability discovery and strengthen the arsenal of defenders everywhere.

The story so far

In August 2023, the OSS-Fuzz team announced AI-Powered Fuzzing, describing our effort to leverage large language models (LLM) to improve fuzzing coverage to find more vulnerabilities automatically—before malicious attackers could exploit them. Our approach was to use the coding abilities of an LLM to generate more fuzz targets, which are similar to unit tests that exercise relevant functionality to search for vulnerabilities.

The ideal solution would be to completely automate the manual process of developing a fuzz target end to end:

Drafting an initial fuzz target.

Fixing any compilation issues that arise.

Running the fuzz target to see how it performs, and fixing any obvious mistakes causing runtime issues.

Running the corrected fuzz target for a longer period of time, and triaging any crashes to determine the root cause.

Fixing vulnerabilities.

In August 2023, we covered our efforts to use an LLM to handle the first two steps. We were able to use an iterative process to generate a fuzz target with a simple prompt including hardcoded examples and compilation errors.

In January 2024, we open sourced the framework that we were building to enable an LLM to generate fuzz targets. By that point, LLMs were reliably generating targets that exercised more interesting code coverage across 160 projects. But there was still a long tail of projects where we couldn’t get a single working AI-generated fuzz target.

To address this, we’ve been improving the first two steps, as well as implementing steps 3 and 4.

New results: More code coverage and discovered vulnerabilities

We’re now able to automatically gain more coverage in 272 C/C++ projects on OSS-Fuzz (up from 160), adding 370k+ lines of new code coverage. The top coverage improvement in a single project was an increase from 77 lines to 5434 lines (a 7000% increase).

This led to the discovery of 26 new vulnerabilities in projects on OSS-Fuzz that already had hundreds of thousands of hours of fuzzing. The highlight is CVE-2024-9143 in the critical and well-tested OpenSSL library. We reported this vulnerability on September 16 and a fix was published on October 16. As far as we can tell, this vulnerability has likely been present for two decades and wouldn’t have been discoverable with existing fuzz targets written by humans.

Another example was a bug in the project cJSON, where even though an existing human-written harness existed to fuzz a specific function, we still discovered a new vulnerability in that same function with an AI-generated target.

One reason that such bugs could remain undiscovered for so long is that line coverage is not a guarantee that a function is free of bugs. Code coverage as a metric isn’t able to measure all possible code paths and states—different flags and configurations may trigger different behaviors, unearthing different bugs. These examples underscore the need to continue to generate new varieties of fuzz targets even for code that is already fuzzed, as has also been shown by Project Zero in the past (1, 2).

New improvements

To achieve these results, we’ve been focusing on two major improvements:

Automatically generate more relevant context in our prompts. The more complete and relevant information we can provide the LLM about a project, the less likely it would be to hallucinate the missing details in its response. This meant providing more accurate, project-specific context in prompts, such as function, type definitions, cross references, and existing unit tests for each project. To generate this information automatically, we built new infrastructure to index projects across OSS-Fuzz.

LLMs turned out to be highly effective at emulating a typical developer’s entire workflow of writing, testing, and iterating on the fuzz target, as well as triaging the crashes found. Thanks to this, it was possible to further automate more parts of the fuzzing workflow. This additional iterative feedback in turn also resulted in higher quality and greater number of correct fuzz targets.

The workflow in action

Our LLM can now execute the first four steps of the developer’s process (with the fifth soon to come).

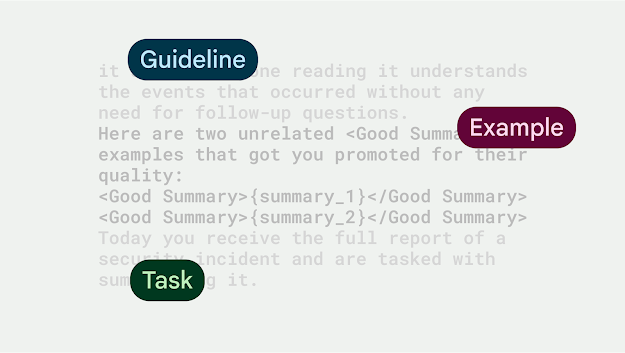

A developer might check the source code, existing documentation and unit tests, as well as usages of the target function when to draft an initial fuzz target. An LLM can fulfill this role here, if we provide a prompt with this information and ask it to come up with a fuzz target.

Once a developer has a candidate target, they would try to compile it and look at any compilation issues that arise. Again, we can prompt an LLM with details of the compilation errors so it can provide fixes.

Example of compilation errors that an LLM was able to fix

Once all compilation errors are fixed, a developer would try running the fuzz target for a short period of time to see if there were any mistakes that led it to instantly crash, suggesting an error with the target rather than a bug discovered in the project.

The following is an example of an LLM fixing a semantic issue with the fuzzing setup:

4. Running the corrected fuzz target for a longer period of time, and triaging any crashes.

At this point, the fuzz target is ready to run for an extended period of time on a suitable fuzzing infrastructure, such as ClusterFuzz.

Any discovered crashes would then need to be triaged, to determine the root causes and whether they represented legitimate vulnerabilities (or bugs in the fuzz target). An LLM can be prompted with the relevant context (stacktraces, fuzz target source code, relevant project source code) to perform this triage.

In this example, the LLM correctly determines this is a bug in the fuzz target, rather than a bug in the project being fuzzed.

5. Fixing vulnerabilities.

The goal is to fully automate this entire workflow by having the LLM generate a suggested patch for the vulnerability. We don’t have anything we can share here today, but we’re collaborating with various researchers to make this a reality and look forward to sharing results soon.

Up next

Improving automated triaging: to get to a point where we’re confident about not requiring human review. This will help automatically report new vulnerabilities to project maintainers. There are likely more than the 26 vulnerabilities we’ve already reported upstream hiding in our results.

Agent-based architecture: which means letting the LLM autonomously plan out the steps to solve a particular problem by providing it with access to tools that enable it to get more information, as well as to check and validate results. By providing LLM with interactive access to real tools such as debuggers, we’ve found that the LLM is more likely to arrive at a correct result.

Integrating our research into OSS-Fuzz as a feature: to achieve a more fully automated end-to-end solution for vulnerability discovery and patching. We hope OSS-Fuzz will be useful for other researchers to evaluate AI-powered vulnerability discovery ideas and ultimately become a tool that will enable defenders to find more vulnerabilities before they get exploited.

For more information, check out our open source framework at oss-fuzz-gen. We’re hoping to continue to collaborate on this area with other researchers. Also, be sure to check out the OSS-Fuzz blog for more technical updates.

.png)